Gamma correction or gamma is a nonlinear operation used to encode and decode luminance or tristimulus values in video or still image systems. Gamma correction is, in the simplest cases, defined by the following power-law expression:

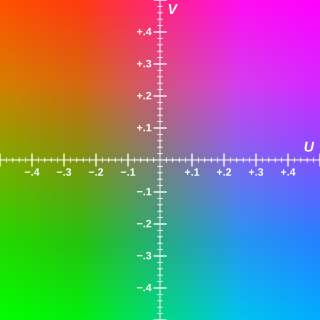

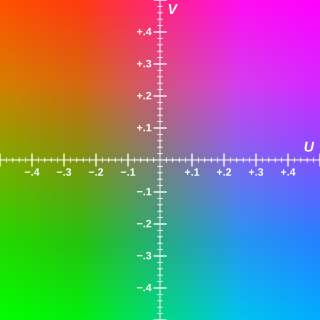

Y′UV, also written YUV, is the color model found in the PAL analogue color TV standard. A color is described as a Y′ component (luma) and two chroma components U and V. The prime symbol (') denotes that the luma is calculated from gamma-corrected RGB input and that it is different from true luminance. Today, the term YUV is commonly used in the computer industry to describe colorspaces that are encoded using YCbCr.

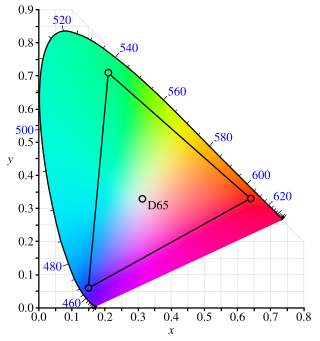

An RGB color space is one of many specific additive colorimetric color spaces based on the RGB color model.

Chroma subsampling is the practice of encoding images by implementing less resolution for chroma information than for luma information, taking advantage of the human visual system's lower acuity for color differences than for luminance.

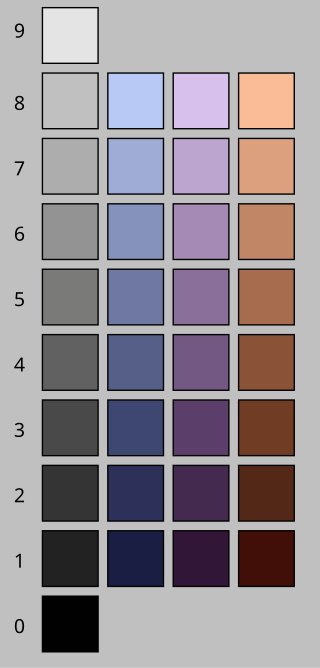

HSL and HSV are the two most common cylindrical-coordinate representations of points in an RGB color model. The two representations rearrange the geometry of RGB in an attempt to be more intuitive and perceptually relevant than the cartesian (cube) representation. Developed in the 1970s for computer graphics applications, HSL and HSV are used today in color pickers, in image editing software, and less commonly in image analysis and computer vision.

The CIELAB color space, also referred to as L*a*b*, is a color space defined by the International Commission on Illumination in 1976. It expresses color as three values: L* for perceptual lightness and a* and b* for the four unique colors of human vision: red, green, blue and yellow. CIELAB was intended as a perceptually uniform space, where a given numerical change corresponds to a similar perceived change in color. While the LAB space is not truly perceptually uniform, it nevertheless is useful in industry for detecting small differences in color.

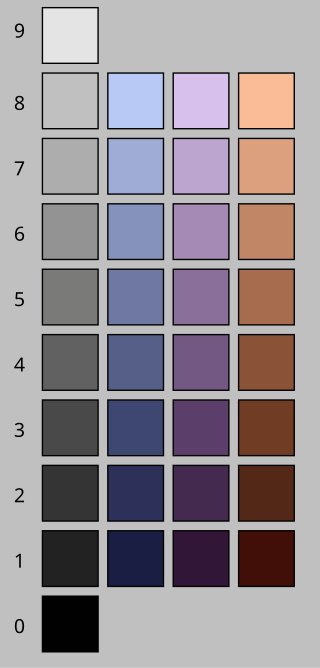

In digital photography, computer-generated imagery, and colorimetry, a grayscale image is one in which the value of each pixel is a single sample representing only an amount of light; that is, it carries only intensity information. Grayscale images, a kind of black-and-white or gray monochrome, are composed exclusively of shades of gray. The contrast ranges from black at the weakest intensity to white at the strongest.

The RGB chromaticity space, two dimensions of the normalized RGB space, is a chromaticity space, a two-dimensional color space in which there is no intensity information.

YCbCr, Y′CbCr, or Y Pb/Cb Pr/Cr, also written as YCBCR or Y′CBCR, is a family of color spaces used as a part of the color image pipeline in video and digital photography systems. Y′ is the luma component and CB and CR are the blue-difference and red-difference chroma components. Y′ is distinguished from Y, which is luminance, meaning that light intensity is nonlinearly encoded based on gamma corrected RGB primaries.

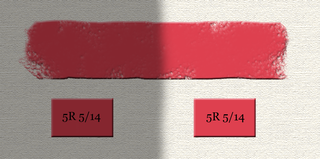

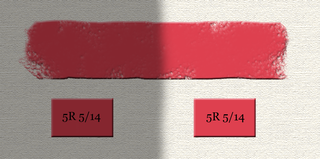

Colorfulness, chroma and saturation are attributes of perceived color relating to chromatic intensity. As defined formally by the International Commission on Illumination (CIE) they respectively describe three different aspects of chromatic intensity, but the terms are often used loosely and interchangeably in contexts where these aspects are not clearly distinguished. The precise meanings of the terms vary by what other functions they are dependent on.

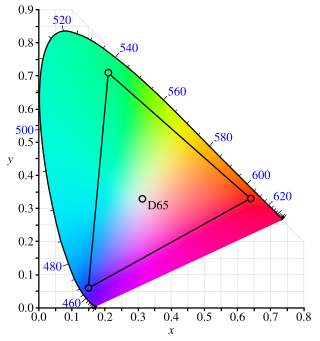

sRGB is a standard RGB color space that HP and Microsoft created cooperatively in 1996 to use on monitors, printers, and the World Wide Web. It was subsequently standardized by the International Electrotechnical Commission (IEC) as IEC 61966-2-1:1999. sRGB is the current defined standard colorspace for the web, and it is usually the assumed colorspace for images that are neither tagged for a colorspace nor have an embedded color profile.

The Adobe RGB (1998) color space or opRGB is a color space developed by Adobe Inc. in 1998. It was designed to encompass most of the colors achievable on CMYK color printers, but by using RGB primary colors on a device such as a computer display. The Adobe RGB (1998) color space encompasses roughly 50% of the visible colors specified by the CIELAB color space – improving upon the gamut of the sRGB color space, primarily in cyan-green hues. It was subsequently standardized by the IEC as IEC 61966-2-5:1999 with a name opRGB and is used in HDMI.

The CIE 1931 color spaces are the first defined quantitative links between distributions of wavelengths in the electromagnetic visible spectrum, and physiologically perceived colors in human color vision. The mathematical relationships that define these color spaces are essential tools for color management, important when dealing with color inks, illuminated displays, and recording devices such as digital cameras. The system was designed in 1931 by the "Commission Internationale de l'éclairage", known in English as the International Commission on Illumination.

LMS, is a color space which represents the response of the three types of cones of the human eye, named for their responsivity (sensitivity) peaks at long, medium, and short wavelengths.

In video, luma represents the brightness in an image. Luma is typically paired with chrominance. Luma represents the achromatic image, while the chroma components represent the color information. Converting R′G′B′ sources into luma and chroma allows for chroma subsampling: because human vision has finer spatial sensitivity to luminance differences than chromatic differences, video systems can store and transmit chromatic information at lower resolution, optimizing perceived detail at a particular bandwidth.

Lightness is a visual perception of the luminance of an object. It is often judged relative to a similarly lit object. In colorimetry and color appearance models, lightness is a prediction of how an illuminated color will appear to a standard observer. While luminance is a linear measurement of light, lightness is a linear prediction of the human perception of that light.

In colorimetry, the CIE 1976L*, u*, v*color space, commonly known by its abbreviation CIELUV, is a color space adopted by the International Commission on Illumination (CIE) in 1976, as a simple-to-compute transformation of the 1931 CIE XYZ color space, but which attempted perceptual uniformity. It is extensively used for applications such as computer graphics which deal with colored lights. Although additive mixtures of different colored lights will fall on a line in CIELUV's uniform chromaticity diagram, such additive mixtures will not, contrary to popular belief, fall along a line in the CIELUV color space unless the mixtures are constant in lightness.

Rec. 709, also known as Rec.709, BT.709, and ITU 709, is a standard developed by ITU-R for image encoding and signal characteristics of high-definition television.

ITU-R Recommendation BT.2100, more commonly known by the abbreviations Rec. 2100 or BT.2100, introduced high-dynamic-range television (HDR-TV) by recommending the use of the perceptual quantizer or hybrid log–gamma (HLG) transfer functions instead of the traditional "gamma" previously used for SDR-TV.