Grand ensemble

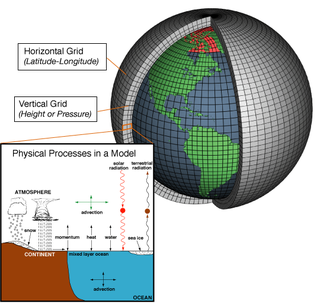

A grand ensemble is an ensemble of ensembles. There has to be at least two nested ensembles. This is best illustrated in the diagram opposite.

A climate ensemble involves slightly different models of the climate system. The ensemble average is expected to perform better than individual model runs. [1] There are at least five different types, to be described below.

The aim of running an ensemble is usually in order to be able to deal with uncertainties in the system. An ultimate aim may be to produce policy relevant information such as a probability distribution function of different outcomes. This is proving to be very difficult due to a number of problems. These include:

Multi-model ensembles (MMEs) are widely used in IPCC assessments, and a comprehensive collection of climate models can be accessed in the Coupled Model Intercomparison Project. Members of a multi-model ensemble are developed by different organisations involved in climate change research and can differ substantially in their software design and programming approach, their handling of spatial discretisation and exact formulation of physical, chemical and biological processes. The benefits of using a multi-model ensemble are seen in "the consistently better performance of the multi-model when considering all aspects of the predictions". [2]

Perturbed physics ensembles (PPEs) form the main scientific focus of the Climateprediction.net project. Modern climate models do a good job of simulating many large-scale features of present-day climate. However, these models contain large numbers of adjustable parameters which are known, individually, to have a significant impact on simulated climate. While many of these are well constrained by observations, there are many which are subject to considerable uncertainty. We do not know the extent to which different choices of parameter-settings or schemes may provide equally realistic simulations of 20th century climate but different forecast for the 21st century. The most thorough way to investigate this uncertainty is to run a massive ensemble experiment in which each relevant parameter combination is investigated. A more general approach is coined "perturbed parameter ensemble" (also abbreviated as PPE), as apart from physical parameters other parameters, relating to the carbon cycle, atmospheric chemistry, land use etc. can be perturbed.

Initial condition ensembles involve the same model in terms of the same atmospheric physics parameters and forcings, but run from variety of different starting states. Because the climate system is chaotic, tiny changes in things such as temperatures, winds, and humidity in one place can lead to very different paths for the system as a whole. We can work around this by setting off several runs started with slightly different starting conditions, and then look at the evolution of the group as a whole. This is similar to what they do in weather forecasting.

Having an initial condition ensemble can help to identify natural variability in the system and deal with it.

A model can be subjected to different forcings. These may correspond with different scenarios such as those described in the Special Report on Emissions Scenarios and more recently in the Representative Concentration Pathway.

A grand ensemble is an ensemble of ensembles. There has to be at least two nested ensembles. This is best illustrated in the diagram opposite.

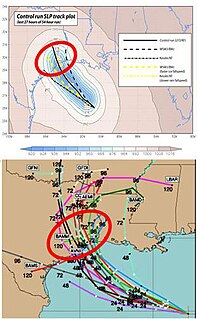

Weather forecasting uses initial condition ensembles.

Climate ensembles were used to project future changes in the occurrence of selected pests of crops. [3]

The European Centre for Medium-Range Weather Forecasts (ECMWF) is an independent intergovernmental organisation supported by most of the nations of Europe. It is based at three sites: Shinfield Park, Reading, United Kingdom; Bologna, Italy; and Bonn, Germany. It operates one of the largest supercomputer complexes in Europe and the world's largest archive of numerical weather prediction data.

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. It does not assume or postulate any natural laws, but explains the macroscopic behavior of nature from the behavior of such ensembles.

A general circulation model (GCM) is a type of climate model. It employs a mathematical model of the general circulation of a planetary atmosphere or ocean. It uses the Navier–Stokes equations on a rotating sphere with thermodynamic terms for various energy sources. These equations are the basis for computer programs used to simulate the Earth's atmosphere or oceans. Atmospheric and oceanic GCMs are key components along with sea ice and land-surface components.

Scenario planning, scenario thinking, scenario analysis, scenario prediction and the scenario method all describe a strategic planning method that some organizations use to make flexible long-term plans. It is in large part an adaptation and generalization of classic methods used by military intelligence.

Climateprediction.net (CPDN) is a volunteer computing project to investigate and reduce uncertainties in climate modelling. It aims to do this by running hundreds of thousands of different models using the donated idle time of ordinary personal computers, thereby leading to a better understanding of how models are affected by small changes in the many parameters known to influence the global climate.

Numerical weather prediction (NWP) uses mathematical models of the atmosphere and oceans to predict the weather based on current weather conditions. Though first attempted in the 1920s, it was not until the advent of computer simulation in the 1950s that numerical weather predictions produced realistic results. A number of global and regional forecast models are run in different countries worldwide, using current weather observations relayed from radiosondes, weather satellites and other observing systems as inputs.

Ensemble forecasting is a method used in or within numerical weather prediction. Instead of making a single forecast of the most likely weather, a set of forecasts is produced. This set of forecasts aims to give an indication of the range of possible future states of the atmosphere. Ensemble forecasting is a form of Monte Carlo analysis. The multiple simulations are conducted to account for the two usual sources of uncertainty in forecast models: (1) the errors introduced by the use of imperfect initial conditions, amplified by the chaotic nature of the evolution equations of the atmosphere, which is often referred to as sensitive dependence on initial conditions; and (2) errors introduced because of imperfections in the model formulation, such as the approximate mathematical methods to solve the equations. Ideally, the verified future atmospheric state should fall within the predicted ensemble spread, and the amount of spread should be related to the uncertainty (error) of the forecast. In general, this approach can be used to make probabilistic forecasts of any dynamical system, and not just for weather prediction.

Data assimilation is a mathematical discipline that seeks to optimally combine theory with observations. There may be a number of different goals sought – for example, to determine the optimal state estimate of a system, to determine initial conditions for a numerical forecast model, to interpolate sparse observation data using knowledge of the system being observed, to set numerical parameters based on training a model from observed data. Depending on the goal, different solution methods may be used. Data assimilation is distinguished from other forms of machine learning, image analysis, and statistical methods in that it utilizes a dynamical model of the system being analyzed.

In physics, maximum entropy thermodynamics views equilibrium thermodynamics and statistical mechanics as inference processes. More specifically, MaxEnt applies inference techniques rooted in Shannon information theory, Bayesian probability, and the principle of maximum entropy. These techniques are relevant to any situation requiring prediction from incomplete or insufficient data. MaxEnt thermodynamics began with two papers by Edwin T. Jaynes published in the 1957 Physical Review.

Probabilistic forecasting summarizes what is known about, or opinions about, future events. In contrast to single-valued forecasts, probabilistic forecasts assign a probability to each of a number of different outcomes, and the complete set of probabilities represents a probability forecast. Thus, probabilistic forecasting is a type of probabilistic classification.

Uncertainty quantification (UQ) is the science of quantitative characterization and reduction of uncertainties in both computational and real world applications. It tries to determine how likely certain outcomes are if some aspects of the system are not exactly known. An example would be to predict the acceleration of a human body in a head-on crash with another car: even if the speed was exactly known, small differences in the manufacturing of individual cars, how tightly every bolt has been tightened, etc., will lead to different results that can only be predicted in a statistical sense.

Ecological forecasting uses knowledge of physics, ecology and physiology to predict how ecological populations, communities, or ecosystems will change in the future in response to environmental factors such as climate change. The goal of the approach is to provide natural resource managers with information to anticipate and respond to short and long-term climate conditions.

Species distribution modelling (SDM), also known as environmental(or ecological) niche modelling (ENM), habitat modelling, predictive habitat distribution modelling, and range mapping uses computer algorithms to predict the distribution of a species across geographic space and time using environmental data. The environmental data are most often climate data, but can include other variables such as soil type, water depth, and land cover. SDMs are used in several research areas in conservation biology, ecology and evolution. These models can be used to understand how environmental conditions influence the occurrence or abundance of a species, and for predictive purposes. Predictions from an SDM may be of a species’ future distribution under climate change, a species’ past distribution in order to assess evolutionary relationships, or the potential future distribution of an invasive species. Predictions of current and/or future habitat suitability can be useful for management applications.

Robust decision-making (RDM) is an iterative decision analytic framework that aims to help identify potential robust strategies, characterize the vulnerabilities of such strategies, and evaluate the tradeoffs among them. RDM focuses on informing decisions under conditions of what is called "deep uncertainty", that is, conditions where the parties to a decision do not know or do not agree on the system model(s) relating actions to consequences or the prior probability distributions for the key input parameters to those model(s).

Climate change scenarios or socioeconomic scenarios are projections of future greenhouse gas (GHG) emissions used by analysts to assess future vulnerability to climate change. Scenarios and pathways are created by scientists to survey any long term routes and explore the effectiveness of mitigation and helps us understand what the future may hold this will allow us to envision the future of human environment system. Producing scenarios requires estimates of future population levels, economic activity, the structure of governance, social values, and patterns of technological change. Economic and energy modelling can be used to analyze and quantify the effects of such drivers.

Edward Epstein was an American meteorologist who pioneered the use of statistical methods in weather forecasting and the development of ensemble forecasting techniques.

Bayesian operational modal analysis (BAYOMA) adopts a Bayesian system identification approach for operational modal analysis (OMA). Operational modal analysis aims at identifying the modal properties of a constructed structure using only its (output) vibration response measured under operating conditions. The (input) excitations to the structure are not measured but are assumed to be 'ambient'. In a Bayesian context, the set of modal parameters are viewed as uncertain parameters or random variables whose probability distribution is updated from the prior distribution to the posterior distribution. The peak(s) of the posterior distribution represents the most probable value(s) (MPV) suggested by the data, while the spread of the distribution around the MPV reflects the remaining uncertainty of the parameters.

The North American Ensemble Forecast System (NAEFS) is a joint project involving the Meteorological Service of Canada (MSC) in Canada, the National Weather Service (NWS) in the United States, and the National Meteorological Service of Mexico (NMSM) in Mexico providing numerical weather prediction ensemble guidance for the 1- to 16-day forecast period. The NAEFS combines the Canadian MSC and the US NWS global ensemble prediction systems, improving probabilistic operational guidance over what can be built from any individual country's ensemble. Model guidance from the NAEFS is incorporated into the forecasts of the respective national agencies.

Strategic planning and uncertainty intertwine in a realistic framework where companies and organizations are bounded to develop and compete in a world dominated by complexity, ambiguity, and uncertainty in which unpredictable, unstoppable and, sometimes, meaningless circumstances may have a direct impact on the expected outcomes. In this scenario, formal planning systems are criticized by a number of academics, who argue that conventional methods, based on classic analytical tools, fail to shape a strategy that can adjust to the changing market and enhance the competitiveness of each business unit, which is the basic principle of a competitive business strategy. Strategy planning systems are supposed to produce the best approaches to concretize long term objectives. However, since strategy deals with the upcoming future, the strategic context of an organization will always be uncertain, therefore the first choice an organisation has to make is when to act; acting now or when the uncertainty has been resolved.

Non-homogeneous Gaussian regression (NGR) is a type of statistical regression analysis used in the atmospheric sciences as a way to convert ensemble forecasts into probabilistic forecasts. Relative to simple linear regression, NGR uses the ensemble spread as an additional predictor, which is used to improve the prediction of uncertainty and allows the predicted uncertainty to vary from case to case. The prediction of uncertainty in NGR is derived from both past forecast errors statistics and the ensemble spread. NGR was originally developed for site-specific medium range temperature forecasting, but has since also been applied to site-specific medium-range wind forecasting and to seasonal forecasts, and has been adapted for precipitation forecasting. The introduction of NGR was the first demonstration that probabilistic forecasts that take account of the varying ensemble spread could achieve better skill scores than forecasts based on standard Model output statistics approaches applied to the ensemble mean.