The European Centre for Medium-Range Weather Forecasts (ECMWF) is an independent intergovernmental organisation supported by most of the nations of Europe. It is based at three sites: Shinfield Park, Reading, United Kingdom; Bologna, Italy; and Bonn, Germany. It operates one of the largest supercomputer complexes in Europe and the world's largest archive of numerical weather prediction data.

Weather forecasting is the application of science and technology to predict the conditions of the atmosphere for a given location and time. People have attempted to predict the weather informally for millennia and formally since the 19th century.

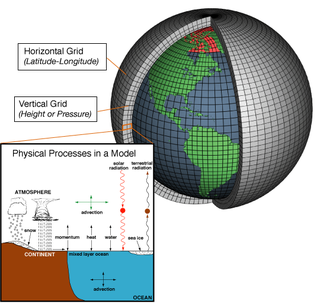

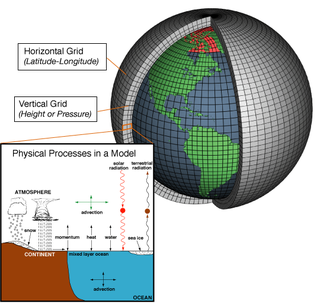

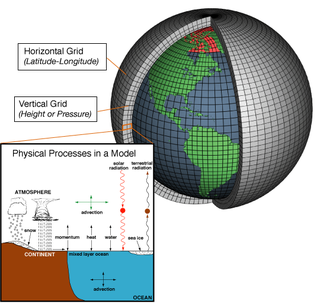

A general circulation model (GCM) is a type of climate model. It employs a mathematical model of the general circulation of a planetary atmosphere or ocean. It uses the Navier–Stokes equations on a rotating sphere with thermodynamic terms for various energy sources. These equations are the basis for computer programs used to simulate the Earth's atmosphere or oceans. Atmospheric and oceanic GCMs are key components along with sea ice and land-surface components.

Numerical weather prediction (NWP) uses mathematical models of the atmosphere and oceans to predict the weather based on current weather conditions. Though first attempted in the 1920s, it was not until the advent of computer simulation in the 1950s that numerical weather predictions produced realistic results. A number of global and regional forecast models are run in different countries worldwide, using current weather observations relayed from radiosondes, weather satellites and other observing systems as inputs.

Data assimilation is a mathematical discipline that seeks to optimally combine theory with observations. There may be a number of different goals sought – for example, to determine the optimal state estimate of a system, to determine initial conditions for a numerical forecast model, to interpolate sparse observation data using knowledge of the system being observed, to set numerical parameters based on training a model from observed data. Depending on the goal, different solution methods may be used. Data assimilation is distinguished from other forms of machine learning, image analysis, and statistical methods in that it utilizes a dynamical model of the system being analyzed.

The Brier Score is a strictly proper score function or strictly proper scoring rule that measures the accuracy of probabilistic predictions. For unidimensional predictions, it is strictly equivalent to the mean squared error as applied to predicted probabilities.

In decision theory, a scoring rule provides evaluation metrics for probabilistic predictions or forecasts. While "regular" loss functions assign a goodness-of-fit score to a predicted value and an observed value, scoring rules assign such a score to a predicted probability distribution and an observed value. On the other hand, a scoring function provides a summary measure for the evaluation of point predictions, i.e. one predicts a property or functional , like the expectation or the median.

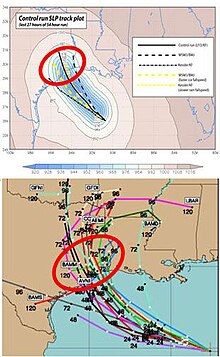

A tropical cyclone forecast model is a computer program that uses meteorological data to forecast aspects of the future state of tropical cyclones. There are three types of models: statistical, dynamical, or combined statistical-dynamic. Dynamical models utilize powerful supercomputers with sophisticated mathematical modeling software and meteorological data to calculate future weather conditions. Statistical models forecast the evolution of a tropical cyclone in a simpler manner, by extrapolating from historical datasets, and thus can be run quickly on platforms such as personal computers. Statistical-dynamical models use aspects of both types of forecasting. Four primary types of forecasts exist for tropical cyclones: track, intensity, storm surge, and rainfall. Dynamical models were not developed until the 1970s and the 1980s, with earlier efforts focused on the storm surge problem.

In atmospheric science, an atmospheric model is a mathematical model constructed around the full set of primitive, dynamical equations which govern atmospheric motions. It can supplement these equations with parameterizations for turbulent diffusion, radiation, moist processes, heat exchange, soil, vegetation, surface water, the kinematic effects of terrain, and convection. Most atmospheric models are numerical, i.e. they discretize equations of motion. They can predict microscale phenomena such as tornadoes and boundary layer eddies, sub-microscale turbulent flow over buildings, as well as synoptic and global flows. The horizontal domain of a model is either global, covering the entire Earth, or regional (limited-area), covering only part of the Earth. The different types of models run are thermotropic, barotropic, hydrostatic, and nonhydrostatic. Some of the model types make assumptions about the atmosphere which lengthens the time steps used and increases computational speed.

In weather forecasting, model output statistics (MOS) is a multiple linear regression technique in which predictands, often near-surface quantities, are related statistically to one or more predictors. The predictors are typically forecasts from a numerical weather prediction (NWP) model, climatic data, and, if applicable, recent surface observations. Thus, output from NWP models can be transformed by the MOS technique into sensible weather parameters that are familiar to a layperson.

The quantitative precipitation forecast is the expected amount of melted precipitation accumulated over a specified time period over a specified area. A QPF will be created when precipitation amounts reaching a minimum threshold are expected during the forecast's valid period. Valid periods of precipitation forecasts are normally synoptic hours such as 00:00, 06:00, 12:00 and 18:00 GMT. Terrain is considered in QPFs by use of topography or based upon climatological precipitation patterns from observations with fine detail. Starting in the mid-to-late 1990s, QPFs were used within hydrologic forecast models to simulate impact to rivers throughout the United States. Forecast models show significant sensitivity to humidity levels within the planetary boundary layer, or in the lowest levels of the atmosphere, which decreases with height. QPF can be generated on a quantitative, forecasting amounts, or a qualitative, forecasting the probability of a specific amount, basis. Radar imagery forecasting techniques show higher skill than model forecasts within 6 to 7 hours of the time of the radar image. The forecasts can be verified through use of rain gauge measurements, weather radar estimates, or a combination of both. Various skill scores can be determined to measure the value of the rainfall forecast.

The history of numerical weather prediction considers how current weather conditions as input into mathematical models of the atmosphere and oceans to predict the weather and future sea state has changed over the years. Though first attempted manually in the 1920s, it was not until the advent of the computer and computer simulation that computation time was reduced to less than the forecast period itself. ENIAC was used to create the first forecasts via computer in 1950, and over the years more powerful computers have been used to increase the size of initial datasets and use more complicated versions of the equations of motion. The development of global forecasting models led to the first climate models. The development of limited area (regional) models facilitated advances in forecasting the tracks of tropical cyclone as well as air quality in the 1970s and 1980s.

A prognostic chart is a map displaying the likely weather forecast for a future time. Such charts generated by atmospheric models as output from numerical weather prediction and contain a variety of information such as temperature, wind, precipitation and weather fronts. They can also indicate derived atmospheric fields such as vorticity, stability indices, or frontogenesis. Forecast errors need to be taken into account and can be determined either via absolute error, or by considering persistence and absolute error combined.

Edward Epstein was an American meteorologist who pioneered the use of statistical methods in weather forecasting and the development of ensemble forecasting techniques.

Timothy Noel Palmer is a mathematical physicist by training. He has spent most of his career working on the dynamics and predictability of weather and climate. Among various research achievements, he pioneered the development of probabilistic ensemble forecasting techniques for weather and climate prediction. These techniques are now standard in operational weather and climate prediction around the world, and are central for reliable decision making for many commercial and humanitarian applications.

The THORPEX Interactive Grand Global Ensemble (TIGGE) is an implementation of ensemble forecasting for global weather forecasting and is part of THORPEX, an international research programme established in 2003 by the World Meteorological Organization to accelerate improvements in the utility and accuracy of weather forecasts up to two weeks ahead.

The North American Ensemble Forecast System (NAEFS) is a joint project involving the Meteorological Service of Canada (MSC) in Canada, the National Weather Service (NWS) in the United States, and the National Meteorological Service of Mexico (NMSM) in Mexico providing numerical weather prediction ensemble guidance for the 1- to 16-day forecast period. The NAEFS combines the Canadian MSC and the US NWS global ensemble prediction systems, improving probabilistic operational guidance over what can be built from any individual country's ensemble. Model guidance from the NAEFS is incorporated into the forecasts of the respective national agencies.

The cost-loss model, also called the cost/loss model or the cost-loss decision model, is a model used to understand how the predicted probability of adverse events affects the decision of whether to take a costly precautionary measure to protect oneself against losses from that event. The threshold probability above which it makes sense to take the precautionary measure equals the ratio of the cost of the preventative measure to the loss averted, and this threshold is termed the cost/loss ratio or cost-loss ratio. The model is typically used in the context of using prediction about weather conditions to decide whether to take a precautionary measure or not.

Non-homogeneous Gaussian regression (NGR) is a type of statistical regression analysis used in the atmospheric sciences as a way to convert ensemble forecasts into probabilistic forecasts. Relative to simple linear regression, NGR uses the ensemble spread as an additional predictor, which is used to improve the prediction of uncertainty and allows the predicted uncertainty to vary from case to case. The prediction of uncertainty in NGR is derived from both past forecast errors statistics and the ensemble spread. NGR was originally developed for site-specific medium range temperature forecasting, but has since also been applied to site-specific medium-range wind forecasting and to seasonal forecasts, and has been adapted for precipitation forecasting. The introduction of NGR was the first demonstration that probabilistic forecasts that take account of the varying ensemble spread could achieve better skill scores than forecasts based on standard model output statistics approaches applied to the ensemble mean.