In quantum mechanics, indistinguishable particles are particles that cannot be distinguished from one another, even in principle. Species of identical particles include, but are not limited to, elementary particles, composite subatomic particles, as well as atoms and molecules. Although all known indistinguishable particles only exist at the quantum scale, there is no exhaustive list of all possible sorts of particles nor a clear-cut limit of applicability, as explored in quantum statistics. They were first discussed by Werner Heisenberg and Paul Dirac in 1926.

The mathematical formulations of quantum mechanics are those mathematical formalisms that permit a rigorous description of quantum mechanics. This mathematical formalism uses mainly a part of functional analysis, especially Hilbert spaces, which are a kind of linear space. Such are distinguished from mathematical formalisms for physics theories developed prior to the early 1900s by the use of abstract mathematical structures, such as infinite-dimensional Hilbert spaces, and operators on these spaces. In brief, values of physical observables such as energy and momentum were no longer considered as values of functions on phase space, but as eigenvalues; more precisely as spectral values of linear operators in Hilbert space.

In quantum mechanics, the Pauli exclusion principle states that two or more identical particles with half-integer spins cannot simultaneously occupy the same quantum state within a system that obeys the laws of quantum mechanics. This principle was formulated by Austrian physicist Wolfgang Pauli in 1925 for electrons, and later extended to all fermions with his spin–statistics theorem of 1940.

In abstract algebra, group theory studies the algebraic structures known as groups. The concept of a group is central to abstract algebra: other well-known algebraic structures, such as rings, fields, and vector spaces, can all be seen as groups endowed with additional operations and axioms. Groups recur throughout mathematics, and the methods of group theory have influenced many parts of algebra. Linear algebraic groups and Lie groups are two branches of group theory that have experienced advances and have become subject areas in their own right.

In quantum mechanics, a density matrix is a matrix that describes an ensemble of physical systems as quantum states. It allows for the calculation of the probabilities of the outcomes of any measurements performed upon the systems of the ensemble using the Born rule. It is a generalization of the more usual state vectors or wavefunctions: while those can only represent pure states, density matrices can also represent mixed ensembles. Mixed ensembles arise in quantum mechanics in two different situations:

- when the preparation of the systems lead to numerous pure states in the ensemble, and thus one must deal with the statistics of possible preparations, and

- when one wants to describe a physical system that is entangled with another, without describing their combined state; this case is typical for a system interacting with some environment. In this case, the density matrix of an entangled system differs from that of an ensemble of pure states that, combined, would give the same statistical results upon measurement.

In quantum physics, a wave function is a mathematical description of the quantum state of an isolated quantum system. The most common symbols for a wave function are the Greek letters ψ and Ψ. Wave functions are complex-valued. For example, a wave function might assign a complex number to each point in a region of space. The Born rule provides the means to turn these complex probability amplitudes into actual probabilities. In one common form, it says that the squared modulus of a wave function that depends upon position is the probability density of measuring a particle as being at a given place. The integral of a wavefunction's squared modulus over all the system's degrees of freedom must be equal to 1, a condition called normalization. Since the wave function is complex-valued, only its relative phase and relative magnitude can be measured; its value does not, in isolation, tell anything about the magnitudes or directions of measurable observables. One has to apply quantum operators, whose eigenvalues correspond to sets of possible results of measurements, to the wave function ψ and calculate the statistical distributions for measurable quantities.

In mathematics, the unitary group of degree n, denoted U(n), is the group of n × n unitary matrices, with the group operation of matrix multiplication. The unitary group is a subgroup of the general linear group GL(n, C), and it has as a subgroup the special unitary group, consisting of those unitary matrices with determinant 1.

In mathematics, a self-adjoint operator on a complex vector space V with inner product is a linear map A that is its own adjoint. That is, for all ∊ V. If V is finite-dimensional with a given orthonormal basis, this is equivalent to the condition that the matrix of A is a Hermitian matrix, i.e., equal to its conjugate transpose A∗. By the finite-dimensional spectral theorem, V has an orthonormal basis such that the matrix of A relative to this basis is a diagonal matrix with entries in the real numbers. This article deals with applying generalizations of this concept to operators on Hilbert spaces of arbitrary dimension.

The Fock space is an algebraic construction used in quantum mechanics to construct the quantum states space of a variable or unknown number of identical particles from a single particle Hilbert space H. It is named after V. A. Fock who first introduced it in his 1932 paper "Konfigurationsraum und zweite Quantelung".

An operator is a function over a space of physical states onto another space of states. The simplest example of the utility of operators is the study of symmetry. Because of this, they are useful tools in classical mechanics. Operators are even more important in quantum mechanics, where they form an intrinsic part of the formulation of the theory.

In quantum mechanics, a Slater determinant is an expression that describes the wave function of a multi-fermionic system. It satisfies anti-symmetry requirements, and consequently the Pauli principle, by changing sign upon exchange of two electrons. Only a small subset of all possible fermionic wave functions can be written as a single Slater determinant, but those form an important and useful subset because of their simplicity.

In mathematics, spectral theory is an inclusive term for theories extending the eigenvector and eigenvalue theory of a single square matrix to a much broader theory of the structure of operators in a variety of mathematical spaces. It is a result of studies of linear algebra and the solutions of systems of linear equations and their generalizations. The theory is connected to that of analytic functions because the spectral properties of an operator are related to analytic functions of the spectral parameter.

In physics, the S-matrix or scattering matrix is a matrix which relates the initial state and the final state of a physical system undergoing a scattering process. It is used in quantum mechanics, scattering theory and quantum field theory (QFT).

In mathematics, a sesquilinear form is a generalization of a bilinear form that, in turn, is a generalization of the concept of the dot product of Euclidean space. A bilinear form is linear in each of its arguments, but a sesquilinear form allows one of the arguments to be "twisted" in a semilinear manner, thus the name; which originates from the Latin numerical prefix sesqui- meaning "one and a half". The basic concept of the dot product – producing a scalar from a pair of vectors – can be generalized by allowing a broader range of scalar values and, perhaps simultaneously, by widening the definition of a vector.

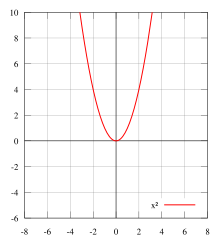

In functional analysis, a branch of mathematics, the Borel functional calculus is a functional calculus, which has particularly broad scope. Thus for instance if T is an operator, applying the squaring function s → s2 to T yields the operator T2. Using the functional calculus for larger classes of functions, we can for example define rigorously the "square root" of the (negative) Laplacian operator −Δ or the exponential

In mathematics, a symmetric space is a Riemannian manifold whose group of isometries contains an inversion symmetry about every point. This can be studied with the tools of Riemannian geometry, leading to consequences in the theory of holonomy; or algebraically through Lie theory, which allowed Cartan to give a complete classification. Symmetric spaces commonly occur in differential geometry, representation theory and harmonic analysis.

In differential geometry, a field of mathematics, a Courant algebroid is a vector bundle together with an inner product and a compatible bracket more general than that of a Lie algebroid.

In linear algebra, particularly projective geometry, a semilinear map between vector spaces V and W over a field K is a function that is a linear map "up to a twist", hence semi-linear, where "twist" means "field automorphism of K". Explicitly, it is a function T : V → W that is:

Symmetries in quantum mechanics describe features of spacetime and particles which are unchanged under some transformation, in the context of quantum mechanics, relativistic quantum mechanics and quantum field theory, and with applications in the mathematical formulation of the standard model and condensed matter physics. In general, symmetry in physics, invariance, and conservation laws, are fundamentally important constraints for formulating physical theories and models. In practice, they are powerful methods for solving problems and predicting what can happen. While conservation laws do not always give the answer to the problem directly, they form the correct constraints and the first steps to solving a multitude of problems. In application, understanding symmetries can also provide insights on the eigenstates that can be expected. For example, the existence of degenerate states can be inferred by the presence of non commuting symmetry operators or that the non degenerate states are also eigenvectors of symmetry operators.

Tau functions are an important ingredient in the modern mathematical theory of integrable systems, and have numerous applications in a variety of other domains. They were originally introduced by Ryogo Hirota in his direct method approach to soliton equations, based on expressing them in an equivalent bilinear form.