In mathematics, specifically in measure theory, a Borel measure on a topological space is a measure that is defined on all open sets. Some authors require additional restrictions on the measure, as described below.

In mathematics, the concept of a measure is a generalization and formalization of geometrical measures and other common notions, such as magnitude, mass, and probability of events. These seemingly distinct concepts have many similarities and can often be treated together in a single mathematical context. Measures are foundational in probability theory, integration theory, and can be generalized to assume negative values, as with electrical charge. Far-reaching generalizations of measure are widely used in quantum physics and physics in general.

In mathematical analysis and in probability theory, a σ-algebra on a set X is a nonempty collection Σ of subsets of X closed under complement, countable unions, and countable intersections. The ordered pair is called a measurable space.

In mathematics, a measurable space or Borel space is a basic object in measure theory. It consists of a set and a σ-algebra, which defines the subsets that will be measured.

A measure space is a basic object of measure theory, a branch of mathematics that studies generalized notions of volumes. It contains an underlying set, the subsets of this set that are feasible for measuring and the method that is used for measuring. One important example of a measure space is a probability space.

In mathematics, mixing is an abstract concept originating from physics: the attempt to describe the irreversible thermodynamic process of mixing in the everyday world: e.g. mixing paint, mixing drinks, industrial mixing.

In functional analysis, an abelian von Neumann algebra is a von Neumann algebra of operators on a Hilbert space in which all elements commute.

In measure theory, Carathéodory's extension theorem states that any pre-measure defined on a given ring of subsets R of a given set Ω can be extended to a measure on the σ-algebra generated by R, and this extension is unique if the pre-measure is σ-finite. Consequently, any pre-measure on a ring containing all intervals of real numbers can be extended to the Borel algebra of the set of real numbers. This is an extremely powerful result of measure theory, and leads, for example, to the Lebesgue measure.

In mathematics, a positive (or signed) measure μ defined on a σ-algebra Σ of subsets of a set X is called a finite measure if μ(X) is a finite real number (rather than ∞), and a set A in Σ is of finite measure if μ(A) < ∞. The measure μ is called σ-finite if X is a countable union of measurable sets each with finite measure. A set in a measure space is said to have σ-finite measure if it is a countable union of measurable sets with finite measure. A measure being σ-finite is a weaker condition than being finite, i.e. all finite measures are σ-finite but there are (many) σ-finite measures that are not finite.

In mathematics, and specifically in measure theory, equivalence is a notion of two measures being qualitatively similar. Specifically, the two measures agree on which events have measure zero.

In mathematics, a locally finite measure is a measure for which every point of the measure space has a neighbourhood of finite measure.

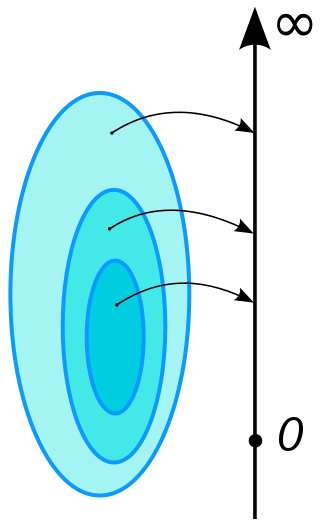

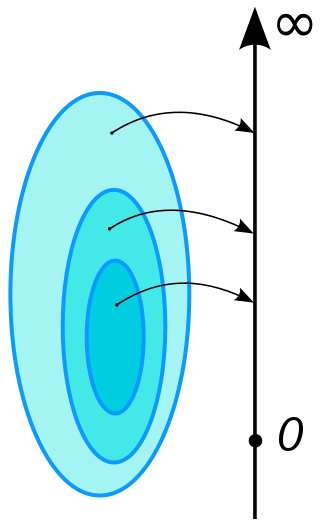

In mathematics, more specifically measure theory, there are various notions of the convergence of measures. For an intuitive general sense of what is meant by convergence of measures, consider a sequence of measures μn on a space, sharing a common collection of measurable sets. Such a sequence might represent an attempt to construct 'better and better' approximations to a desired measure μ that is difficult to obtain directly. The meaning of 'better and better' is subject to all the usual caveats for taking limits; for any error tolerance ε > 0 we require there be N sufficiently large for n ≥ N to ensure the 'difference' between μn and μ is smaller than ε. Various notions of convergence specify precisely what the word 'difference' should mean in that description; these notions are not equivalent to one another, and vary in strength.

In probability theory, a random measure is a measure-valued random element. Random measures are for example used in the theory of random processes, where they form many important point processes such as Poisson point processes and Cox processes.

In measure theory, a branch of mathematics that studies generalized notions of volumes, an s-finite measure is a special type of measure. An s-finite measure is more general than a finite measure, but allows one to generalize certain proofs for finite measures.

In mathematics, lifting theory was first introduced by John von Neumann in a pioneering paper from 1931, in which he answered a question raised by Alfréd Haar. The theory was further developed by Dorothy Maharam (1958) and by Alexandra Ionescu Tulcea and Cassius Ionescu Tulcea (1961). Lifting theory was motivated to a large extent by its striking applications. Its development up to 1969 was described in a monograph of the Ionescu Tulceas. Lifting theory continued to develop since then, yielding new results and applications.

In probability theory, an intensity measure is a measure that is derived from a random measure. The intensity measure is a non-random measure and is defined as the expectation value of the random measure of a set, hence it corresponds to the average volume the random measure assigns to a set. The intensity measure contains important information about the properties of the random measure. A Poisson point process, interpreted as a random measure, is for example uniquely determined by its intensity measure.

In the mathematics of probability, a transition kernel or kernel is a function in mathematics that has different applications. Kernels can for example be used to define random measures or stochastic processes. The most important example of kernels are the Markov kernels.

In the mathematical theory of probability and measure, a sub-probability measure is a measure that is closely related to probability measures. While probability measures always assign the value 1 to the underlying set, sub-probability measures assign a value lesser than or equal to 1 to the underlying set.

In the theory of stochastic processes, a subdiscipline of probability theory, filtrations are totally ordered collections of subsets that are used to model the information that is available at a given point and therefore play an important role in the formalization of random (stochastic) processes.

The σ-algebra of τ-past, is a σ-algebra associated with a stopping time in the theory of stochastic processes, a branch of probability theory.