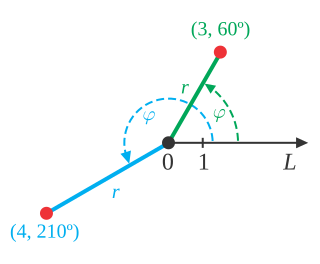

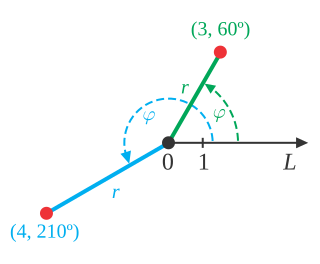

In mathematics, the polar coordinate system is a two-dimensional coordinate system in which each point on a plane is determined by a distance from a reference point and an angle from a reference direction. The reference point is called the pole, and the ray from the pole in the reference direction is the polar axis. The distance from the pole is called the radial coordinate, radial distance or simply radius, and the angle is called the angular coordinate, polar angle, or azimuth. Angles in polar notation are generally expressed in either degrees or radians.

The Navier–Stokes equations are partial differential equations which describe the motion of viscous fluid substances. They were named after French engineer and physicist Claude-Louis Navier and the Irish physicist and mathematician George Gabriel Stokes. They were developed over several decades of progressively building the theories, from 1822 (Navier) to 1842–1850 (Stokes).

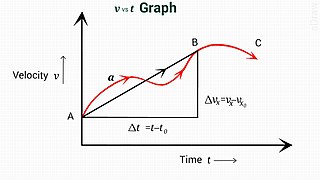

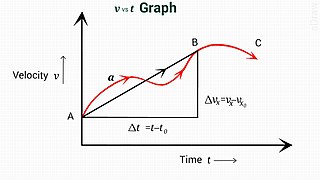

In physics, equations of motion are equations that describe the behavior of a physical system in terms of its motion as a function of time. More specifically, the equations of motion describe the behavior of a physical system as a set of mathematical functions in terms of dynamic variables. These variables are usually spatial coordinates and time, but may include momentum components. The most general choice are generalized coordinates which can be any convenient variables characteristic of the physical system. The functions are defined in a Euclidean space in classical mechanics, but are replaced by curved spaces in relativity. If the dynamics of a system is known, the equations are the solutions for the differential equations describing the motion of the dynamics.

A tautochrone curve or isochrone curve is the curve for which the time taken by an object sliding without friction in uniform gravity to its lowest point is independent of its starting point on the curve. The curve is a cycloid, and the time is equal to π times the square root of the radius over the acceleration of gravity. The tautochrone curve is related to the brachistochrone curve, which is also a cycloid.

The primitive equations are a set of nonlinear partial differential equations that are used to approximate global atmospheric flow and are used in most atmospheric models. They consist of three main sets of balance equations:

- A continuity equation: Representing the conservation of mass.

- Conservation of momentum: Consisting of a form of the Navier–Stokes equations that describe hydrodynamical flow on the surface of a sphere under the assumption that vertical motion is much smaller than horizontal motion (hydrostasis) and that the fluid layer depth is small compared to the radius of the sphere

- A thermal energy equation: Relating the overall temperature of the system to heat sources and sinks

In probability theory, the Borel–Kolmogorov paradox is a paradox relating to conditional probability with respect to an event of probability zero. It is named after Émile Borel and Andrey Kolmogorov.

In physics, the Hamilton–Jacobi equation, named after William Rowan Hamilton and Carl Gustav Jacob Jacobi, is an alternative formulation of classical mechanics, equivalent to other formulations such as Newton's laws of motion, Lagrangian mechanics and Hamiltonian mechanics.

In mathematics, a Killing vector field, named after Wilhelm Killing, is a vector field on a Riemannian manifold that preserves the metric. Killing fields are the infinitesimal generators of isometries; that is, flows generated by Killing fields are continuous isometries of the manifold. More simply, the flow generates a symmetry, in the sense that moving each point of an object the same distance in the direction of the Killing vector will not distort distances on the object.

In rotordynamics, the rigid rotor is a mechanical model of rotating systems. An arbitrary rigid rotor is a 3-dimensional rigid object, such as a top. To orient such an object in space requires three angles, known as Euler angles. A special rigid rotor is the linear rotor requiring only two angles to describe, for example of a diatomic molecule. More general molecules are 3-dimensional, such as water, ammonia, or methane.

In physics, spherically symmetric spacetimes are commonly used to obtain analytic and numerical solutions to Einstein's field equations in the presence of radially moving matter or energy. Because spherically symmetric spacetimes are by definition irrotational, they are not realistic models of black holes in nature. However, their metrics are considerably simpler than those of rotating spacetimes, making them much easier to analyze.

In calculus, the Leibniz integral rule for differentiation under the integral sign, named after Gottfried Wilhelm Leibniz, states that for an integral of the form where and the integrands are functions dependent on the derivative of this integral is expressible as where the partial derivative indicates that inside the integral, only the variation of with is considered in taking the derivative.

In the mathematical theory of bifurcations, a Hopfbifurcation is a critical point where, as a parameter changes, a system's stability switches and a periodic solution arises. More accurately, it is a local bifurcation in which a fixed point of a dynamical system loses stability, as a pair of complex conjugate eigenvalues—of the linearization around the fixed point—crosses the complex plane imaginary axis as a parameter crosses a threshold value. Under reasonably generic assumptions about the dynamical system, the fixed point becomes a small-amplitude limit cycle as the parameter changes.

A theoretical motivation for general relativity, including the motivation for the geodesic equation and the Einstein field equation, can be obtained from special relativity by examining the dynamics of particles in circular orbits about the Earth. A key advantage in examining circular orbits is that it is possible to know the solution of the Einstein Field Equation a priori. This provides a means to inform and verify the formalism.

In classical mechanics, a Liouville dynamical system is an exactly solvable dynamical system in which the kinetic energy T and potential energy V can be expressed in terms of the s generalized coordinates q as follows:

Photon transport in biological tissue can be equivalently modeled numerically with Monte Carlo simulations or analytically by the radiative transfer equation (RTE). However, the RTE is difficult to solve without introducing approximations. A common approximation summarized here is the diffusion approximation. Overall, solutions to the diffusion equation for photon transport are more computationally efficient, but less accurate than Monte Carlo simulations.

In mathematics, vector spherical harmonics (VSH) are an extension of the scalar spherical harmonics for use with vector fields. The components of the VSH are complex-valued functions expressed in the spherical coordinate basis vectors.

In mathematics, the spectral theory of ordinary differential equations is the part of spectral theory concerned with the determination of the spectrum and eigenfunction expansion associated with a linear ordinary differential equation. In his dissertation, Hermann Weyl generalized the classical Sturm–Liouville theory on a finite closed interval to second order differential operators with singularities at the endpoints of the interval, possibly semi-infinite or infinite. Unlike the classical case, the spectrum may no longer consist of just a countable set of eigenvalues, but may also contain a continuous part. In this case the eigenfunction expansion involves an integral over the continuous part with respect to a spectral measure, given by the Titchmarsh–Kodaira formula. The theory was put in its final simplified form for singular differential equations of even degree by Kodaira and others, using von Neumann's spectral theorem. It has had important applications in quantum mechanics, operator theory and harmonic analysis on semisimple Lie groups.

In fluid dynamics, the Oseen equations describe the flow of a viscous and incompressible fluid at small Reynolds numbers, as formulated by Carl Wilhelm Oseen in 1910. Oseen flow is an improved description of these flows, as compared to Stokes flow, with the (partial) inclusion of convective acceleration.

In the theory of Lorentzian manifolds, spherically symmetric spacetimes admit a family of nested round spheres. In such a spacetime, a particularly important kind of coordinate chart is the Schwarzschild chart, a kind of polar spherical coordinate chart on a static and spherically symmetric spacetime, which is adapted to these nested round spheres. The defining characteristic of Schwarzschild chart is that the radial coordinate possesses a natural geometric interpretation in terms of the surface area and Gaussian curvature of each sphere. However, radial distances and angles are not accurately represented.

Calculations in the Newman–Penrose (NP) formalism of general relativity normally begin with the construction of a complex null tetrad, where is a pair of real null vectors and is a pair of complex null vectors. These tetrad vectors respect the following normalization and metric conditions assuming the spacetime signature