Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both hardware and software. Computing has scientific, engineering, mathematical, technological, and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology, and software engineering.

Computer science is the study of computation, information, and automation. Computer science spans theoretical disciplines to applied disciplines.

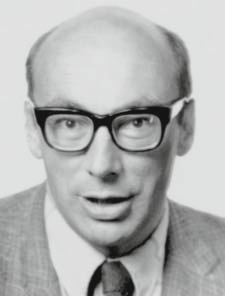

Niklaus Emil Wirth was a Swiss computer scientist. He designed several programming languages, including Pascal, and pioneered several classic topics in software engineering. In 1984, he won the Turing Award, generally recognized as the highest distinction in computer science, "for developing a sequence of innovative computer languages".

In computer science, an abstract machine is a theoretical model that allows for a detailed and precise analysis of how a computer system functions. It is similar to a mathematical function in that it receives inputs and produces outputs based on predefined rules. Abstract machines vary from literal machines in that they are expected to perform correctly and independently of hardware. Abstract machines are "machines" because they allow step-by-step execution of programmes; they are "abstract" because they ignore many aspects of actual (hardware) machines. A typical abstract machine consists of a definition in terms of input, output, and the set of allowable operations used to turn the former into the latter. They can be used for purely theoretical reasons as well as models for real-world computer systems. In the theory of computation, abstract machines are often used in thought experiments regarding computability or to analyse the complexity of algorithms. This use of abstract machines is fundamental to the field of computational complexity theory, such as finite state machines, Mealy machines, push-down automata, and Turing machines.

Theoretical computer science is a subfield of computer science and mathematics that focuses on the abstract and mathematical foundations of computation, such as the theory of computation, formal language theory, the lambda calculus and type theory.

Algorithmic learning theory is a mathematical framework for analyzing machine learning problems and algorithms. Synonyms include formal learning theory and algorithmic inductive inference. Algorithmic learning theory is different from statistical learning theory in that it does not make use of statistical assumptions and analysis. Both algorithmic and statistical learning theory are concerned with machine learning and can thus be viewed as branches of computational learning theory.

In computer science, computational learning theory is a subfield of artificial intelligence devoted to studying the design and analysis of machine learning algorithms.

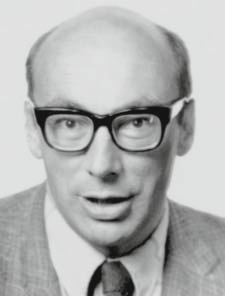

Peter John Landin was a British computer scientist. He was one of the first to realise that the lambda calculus could be used to model a programming language, an insight that is essential to the development of both functional programming and denotational semantics.

ACM SIGACT or SIGACT is the Association for Computing Machinery Special Interest Group on Algorithms and Computation Theory, whose purpose is support of research in theoretical computer science. It was founded in 1968 by Patrick C. Fischer.

Computational thinking (CT) refers to the thought processes involved in formulating problems so their solutions can be represented as computational steps and algorithms. In education, CT is a set of problem-solving methods that involve expressing problems and their solutions in ways that a computer could also execute. It involves automation of processes, but also using computing to explore, analyze, and understand processes.

In computing, a compiler is a computer program that transforms source code written in a programming language or computer language, into another computer language. The most common reason for transforming source code is to create an executable program.

Informatics is the study of computational systems. According to the ACM Europe Council and Informatics Europe, informatics is synonymous with computer science and computing as a profession, in which the central notion is transformation of information. In some cases, the term "informatics" may also be used with different meanings, e.g. in the context of social computing, or in context of library science.

Dana Angluin is a professor emeritus of computer science at Yale University. She is known for foundational work in computational learning theory and distributed computing.

Data-intensive computing is a class of parallel computing applications which use a data parallel approach to process large volumes of data typically terabytes or petabytes in size and typically referred to as big data. Computing applications that devote most of their execution time to computational requirements are deemed compute-intensive, whereas applications are deemed data-intensive require large volumes of data and devote most of their processing time to I/O and manipulation of data.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.