Computer animation is the process used for digitally generating animations. The more general term computer-generated imagery (CGI) encompasses both static scenes and dynamic images, while computer animation only refers to moving images. Modern computer animation usually uses 3D computer graphics. The animation's target is sometimes the computer itself, while other times it is film.

Autodesk 3ds Max, formerly 3D Studio and 3D Studio Max, is a professional 3D computer graphics program for making 3D animations, models, games and images. It is developed and produced by Autodesk Media and Entertainment. It has modeling capabilities and a flexible plugin architecture and must be used on the Microsoft Windows platform. It is frequently used by video game developers, many TV commercial studios, and architectural visualization studios. It is also used for movie effects and movie pre-visualization. 3ds Max features shaders, dynamic simulation, particle systems, radiosity, normal map creation and rendering, global illumination, a customizable user interface, and its own scripting language.

Poser is a figure posing and rendering 3D computer graphics program distributed by Bondware. Poser is optimized for the 3D modeling of human figures. By enabling beginners to produce basic animations and digital images, along with the extensive availability of third-party digital 3D models, it has attained much popularity.

Motion capture is the process of recording the movement of objects or people. It is used in military, entertainment, sports, medical applications, and for validation of computer vision and robots. In filmmaking and video game development, it refers to recording actions of human actors and using that information to animate digital character models in 2D or 3D computer animation. When it includes face and fingers or captures subtle expressions, it is often referred to as performance capture. In many fields, motion capture is sometimes called motion tracking, but in filmmaking and games, motion tracking usually refers more to match moving.

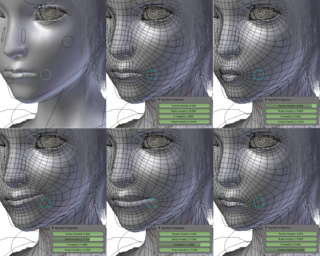

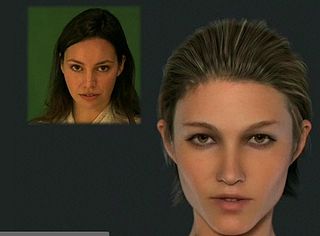

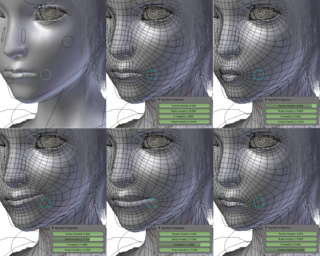

Skeletal animation or rigging is a technique in computer animation in which a character is represented in two parts: a surface representation used to draw the character and a hierarchical set of interconnected parts, a virtual armature used to animate the mesh. While this technique is often used to animate humans and other organic figures, it only serves to make the animation process more intuitive, and the same technique can be used to control the deformation of any object—such as a door, a spoon, a building, or a galaxy. When the animated object is more general than, for example, a humanoid character, the set of "bones" may not be hierarchical or interconnected, but simply represent a higher-level description of the motion of the part of mesh it is influencing.

Real-time computer graphics or real-time rendering is the sub-field of computer graphics focused on producing and analyzing images in real time. The term can refer to anything from rendering an application's graphical user interface (GUI) to real-time image analysis, but is most often used in reference to interactive 3D computer graphics, typically using a graphics processing unit (GPU). One example of this concept is a video game that rapidly renders changing 3D environments to produce an illusion of motion.

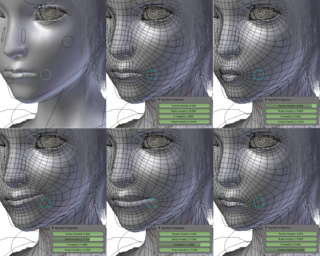

Facial motion capture is the process of electronically converting the movements of a person's face into a digital database using cameras or laser scanners. This database may then be used to produce computer graphics (CG), computer animation for movies, games, or real-time avatars. Because the motion of CG characters is derived from the movements of real people, it results in a more realistic and nuanced computer character animation than if the animation were created manually.

Digital puppetry is the manipulation and performance of digitally animated 2D or 3D figures and objects in a virtual environment that are rendered in real-time by computers. It is most commonly used in filmmaking and television production but has also been used in interactive theme park attractions and live theatre.

Human image synthesis is technology that can be applied to make believable and even photorealistic renditions of human-likenesses, moving or still. It has effectively existed since the early 2000s. Many films using computer generated imagery have featured synthetic images of human-like characters digitally composited onto the real or other simulated film material. Towards the end of the 2010s deep learning artificial intelligence has been applied to synthesize images and video that look like humans, without need for human assistance, once the training phase has been completed, whereas the old school 7D-route required massive amounts of human work .

Interactive skeleton-driven simulation is a scientific computer simulation technique used to approximate realistic physical deformations of dynamic bodies in real-time. It involves using elastic dynamics and mathematical optimizations to decide the body-shapes during motion and interaction with forces. It has various applications within realistic simulations for medicine, 3D computer animation and virtual reality.

Image Metrics is a 3D facial animation and Virtual Try-on company headquartered in El Segundo, with offices in Las Vegas, and research facilities in Manchester. Image Metrics are the makers of the Live Driver and Portable You SDKs for software developers and are providers of facial animation software and services to the visual effects industries.

Computer graphics deals with generating images and art with the aid of computers. Today, computer graphics is a core technology in digital photography, film, video games, digital art, cell phone and computer displays, and many specialized applications. A great deal of specialized hardware and software has been developed, with the displays of most devices being driven by computer graphics hardware. It is a vast and recently developed area of computer science. The phrase was coined in 1960 by computer graphics researchers Verne Hudson and William Fetter of Boeing. It is often abbreviated as CG, or typically in the context of film as computer generated imagery (CGI). The non-artistic aspects of computer graphics are the subject of computer science research.

Messiah is a 3D animation and rendering application developed by pmG Worldwide. It runs on the Win32 and Win64 platforms. It is marketed to run on Mac OS X and Linux via Wine. Messiah's fourth version, messiah:studio was released April 2009 and version 5.5b as messiah:animate was released November 2006. messiahStudio6 was released in April 2013. Messiah seems no longer maintained since 2013 (abandoned).

iClone is a real-time 3D animation and rendering software program. Real-time playback is enabled by using a 3D videogame engine for instant on-screen rendering.

The history of computer animation began as early as the 1940s and 1950s, when people began to experiment with computer graphics – most notably by John Whitney. It was only by the early 1960s when digital computers had become widely established, that new avenues for innovative computer graphics blossomed. Initially, uses were mainly for scientific, engineering and other research purposes, but artistic experimentation began to make its appearance by the mid-1960s – most notably by Dr Thomas Calvert. By the mid-1970s, many such efforts were beginning to enter into public media. Much computer graphics at this time involved 2-dimensional imagery, though increasingly as computer power improved, efforts to achieve 3-dimensional realism became the emphasis. By the late 1980s, photo-realistic 3D was beginning to appear in film movies, and by mid-1990s had developed to the point where 3D animation could be used for entire feature film production.

Morph target animation, per-vertex animation, shape interpolation, shape keys, or blend shapes is a method of 3D computer animation used together with techniques such as skeletal animation. In a morph target animation, a "deformed" version of a mesh is stored as a series of vertex positions. In each key frame of an animation, the vertices are then interpolated between these stored positions.

Mixamo is a 3D computer graphics technology company. Based in San Francisco, the company develops and sells web-based services for 3D character animation. Mixamo's technologies use machine learning methods to automate the steps of the character animation process, including 3D modeling to rigging and 3D animation.

Faceware Technologies is a facial animation and motion capture development company in America. The company was established under Image Metrics and became its own company at the beginning of 2012.

A virtual human is a software fictional character or human being. Virtual human have been created as tools and artificial companions in simulation, video games, film production, human factors and ergonomic and usability studies in various industries, clothing industry, telecommunications (avatars), medicine, etc. These applications require domain-dependent simulation fidelity. A medical application might require an exact simulation of specific internal organs; film industry requires highest aesthetic standards, natural movements, and facial expressions; ergonomic studies require faithful body proportions for a particular population segment and realistic locomotion with constraints, etc.