In algorithmic information theory, the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963 and is a generalization of classical information theory.

The P versus NP problem is a major unsolved problem in theoretical computer science. In informal terms, it asks whether every problem whose solution can be quickly verified can also be quickly solved.

In theoretical computer science and mathematics, computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm.

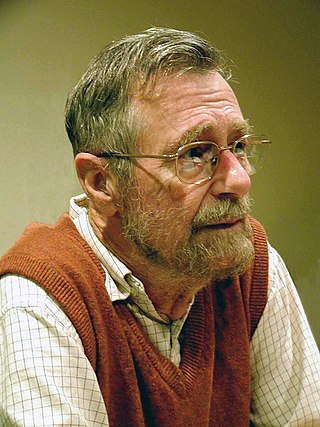

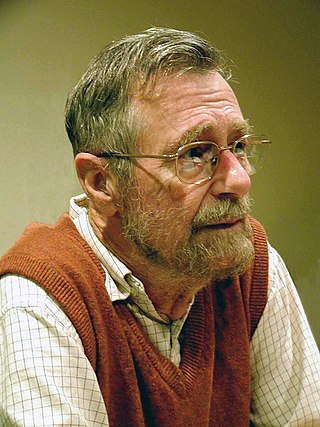

Edsger Wybe Dijkstra was a Dutch computer scientist, programmer, software engineer, systems scientist, and science essayist. He received the 1972 Turing Award for fundamental contributions to developing structured programming languages, and was the Schlumberger Centennial Chair of Computer Sciences at The University of Texas at Austin from 1984 until 2000.

Stephen Arthur Cook is an American-Canadian computer scientist and mathematician who has made significant contributions to the fields of complexity theory and proof complexity. He is a university professor at the University of Toronto, Department of Computer Science and Department of Mathematics.

Sir Charles Antony Richard Hoare is a British computer scientist who has made foundational contributions to programming languages, algorithms, operating systems, formal verification, and concurrent computing. His work earned him the Turing Award, usually regarded as the highest distinction in computer science, in 1980.

In computer science, the clique problem is the computational problem of finding cliques in a graph. It has several different formulations depending on which cliques, and what information about the cliques, should be found. Common formulations of the clique problem include finding a maximum clique, finding a maximum weight clique in a weighted graph, listing all maximal cliques, and solving the decision problem of testing whether a graph contains a clique larger than a given size.

In theoretical computer science, an algorithm is correct with respect to a specification if it behaves as specified. Best explored is functional correctness, which refers to the input-output behavior of the algorithm.

In programming language theory, semantics is the rigorous mathematical study of the meaning of programming languages. Semantics assigns computational meaning to valid strings in a programming language syntax.

The Gödel Prize is an annual prize for outstanding papers in the area of theoretical computer science, given jointly by the European Association for Theoretical Computer Science (EATCS) and the Association for Computing Machinery Special Interest Group on Algorithms and Computational Theory. The award is named in honor of Kurt Gödel. Gödel's connection to theoretical computer science is that he was the first to mention the "P versus NP" question, in a 1956 letter to John von Neumann in which Gödel asked whether a certain NP-complete problem could be solved in quadratic or linear time.

In computational complexity theory, the Cook–Levin theorem, also known as Cook's theorem, states that the Boolean satisfiability problem is NP-complete. That is, it is in NP, and any problem in NP can be reduced in polynomial time by a deterministic Turing machine to the Boolean satisfiability problem.

The graph isomorphism problem is the computational problem of determining whether two finite graphs are isomorphic.

Algorithmic information theory (AIT) is a branch of theoretical computer science that concerns itself with the relationship between computation and information of computably generated objects, such as strings or any other data structure. In other words, it is shown within algorithmic information theory that computational incompressibility "mimics" the relations or inequalities found in information theory. According to Gregory Chaitin, it is "the result of putting Shannon's information theory and Turing's computability theory into a cocktail shaker and shaking vigorously."

In computational complexity theory, the PCP theorem states that every decision problem in the NP complexity class has probabilistically checkable proofs of constant query complexity and logarithmic randomness complexity.

Aleksandr Aleksandrovich Razborov, sometimes known as Sasha Razborov, is a Soviet and Russian mathematician and computational theorist. He is Andrew McLeish Distinguished Service Professor at the University of Chicago.

In computational complexity, problems that are in the complexity class NP but are neither in the class P nor NP-complete are called NP-intermediate, and the class of such problems is called NPI. Ladner's theorem, shown in 1975 by Richard E. Ladner, is a result asserting that, if P ≠ NP, then NPI is not empty; that is, NP contains problems that are neither in P nor NP-complete. Since it is also true that if NPI problems exist, then P ≠ NP, it follows that P = NP if and only if NPI is empty.

Larry Joseph Stockmeyer was an American computer scientist. He was one of the pioneers in the field of computational complexity theory, and he also worked in the field of distributed computing. He died of pancreatic cancer.

The Annual ACM Symposium on Theory of Computing (STOC) is an academic conference in the field of theoretical computer science. STOC has been organized annually since 1969, typically in May or June; the conference is sponsored by the Association for Computing Machinery special interest group SIGACT. Acceptance rate of STOC, averaged from 1970 to 2012, is 31%, with the rate of 29% in 2012.

In computational complexity theory, a problem is NP-complete when:

- it is a problem for which the correctness of each solution can be verified quickly and a brute-force search algorithm can find a solution by trying all possible solutions.

- the problem can be used to simulate every other problem for which we can verify quickly that a solution is correct. In this sense, NP-complete problems are the hardest of the problems to which solutions can be verified quickly. If we could find solutions of some NP-complete problem quickly, we could quickly find the solutions of every other problem to which a given solution can be easily verified.

Geometric complexity theory (GCT), is a research program in computational complexity theory proposed by Ketan Mulmuley and Milind Sohoni. The goal of the program is to answer the most famous open problem in computer science – whether P = NP – by showing that the complexity class P is not equal to the complexity class NP.