This article needs additional citations for verification .(December 2011) |

The following are common definitions related to the machine vision field.

Contents

General related fields

This article needs additional citations for verification .(December 2011) |

The following are common definitions related to the machine vision field.

General related fields

where is the resonant frequency, is the stored energy in the cavity, and is the power dissipated. The optical Q is equal to the ratio of the resonant frequency to the bandwidth of the cavity resonance. The average lifetime of a resonant photon in the cavity is proportional to the cavity's Q. If the Q factor of a laser's cavity is abruptly changed from a low value to a high one, the laser will emit a pulse of light that is much more intense than the laser's normal continuous output. This technique is known as Q-switching.

In digital imaging, a pixel, pel, or picture element is the smallest addressable element in a raster image, or the smallest addressable element in a dot matrix display device. In most digital display devices, pixels are the smallest element that can be manipulated through software.

A camera is an instrument used to capture and store images and videos, either digitally via an electronic image sensor, or chemically via a light-sensitive material such as photographic film. As a pivotal technology in the fields of photography and videography, cameras have played a significant role in the progression of visual arts, media, entertainment, surveillance, and scientific research. The invention of the camera dates back to the 19th century and has since evolved with advancements in technology, leading to a vast array of types and models in the 21st century.

A digital camera, also called a digicam, is a camera that captures photographs in digital memory. Most cameras produced today are digital, largely replacing those that capture images on photographic film or film stock. Digital cameras are now widely incorporated into mobile devices like smartphones with the same or more capabilities and features of dedicated cameras. High-end, high-definition dedicated cameras are still commonly used by professionals and those who desire to take higher-quality photographs.

Astrophotography, also known as astronomical imaging, is the photography or imaging of astronomical objects, celestial events, or areas of the night sky. The first photograph of an astronomical object was taken in 1840, but it was not until the late 19th century that advances in technology allowed for detailed stellar photography. Besides being able to record the details of extended objects such as the Moon, Sun, and planets, modern astrophotography has the ability to image objects outside of the visible spectrum of the human eye such as dim stars, nebulae, and galaxies. This is accomplished through long time exposure as both film and digital cameras can accumulate and sum photons over long periods of time or using specialized optical filters which limit the photons to a certain wavelength.

A camera lens is an optical lens or assembly of lenses used in conjunction with a camera body and mechanism to make images of objects either on photographic film or on other media capable of storing an image chemically or electronically.

A camcorder is a self-contained portable electronic device with video and recording as its primary function. It is typically equipped with an articulating screen mounted on the left side, a belt to facilitate holding on the right side, hot-swappable battery facing towards the user, hot-swappable recording media, and an internally contained quiet optical zoom lens.

A 3D display is a display device capable of conveying depth to the viewer. Many 3D displays are stereoscopic displays, which produce a basic 3D effect by means of stereopsis, but can cause eye strain and visual fatigue. Newer 3D displays such as holographic and light field displays produce a more realistic 3D effect by combining stereopsis and accurate focal length for the displayed content. Newer 3D displays in this manner cause less visual fatigue than classical stereoscopic displays.

Computational photography refers to digital image capture and processing techniques that use digital computation instead of optical processes. Computational photography can improve the capabilities of a camera, or introduce features that were not possible at all with film-based photography, or reduce the cost or size of camera elements. Examples of computational photography include in-camera computation of digital panoramas, high-dynamic-range images, and light field cameras. Light field cameras use novel optical elements to capture three dimensional scene information which can then be used to produce 3D images, enhanced depth-of-field, and selective de-focusing. Enhanced depth-of-field reduces the need for mechanical focusing systems. All of these features use computational imaging techniques.

A digital single-lens reflex camera is a digital camera that combines the optics and mechanisms of a single-lens reflex camera with a solid-state image sensor and digitally records the images from the sensor.

In photography and optics, vignetting is a reduction of an image's brightness or saturation toward the periphery compared to the image center. The word vignette, from the same root as vine, originally referred to a decorative border in a book. Later, the word came to be used for a photographic portrait that is clear at the center and fades off toward the edges. A similar effect is visible in photographs of projected images or videos off a projection screen, resulting in a so-called "hotspot" effect.

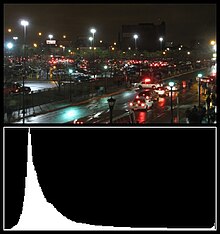

Image noise is random variation of brightness or color information in images, and is usually an aspect of electronic noise. It can be produced by the image sensor and circuitry of a scanner or digital camera. Image noise can also originate in film grain and in the unavoidable shot noise of an ideal photon detector. Image noise is an undesirable by-product of image capture that obscures the desired information. Typically the term “image noise” is used to refer to noise in 2D images, not 3D images.

A light field camera, also known as a plenoptic camera, is a camera that captures information about the light field emanating from a scene; that is, the intensity of light in a scene, and also the precise direction that the light rays are traveling in space. This contrasts with conventional cameras, which record only light intensity at various wavelengths.

A telecentric lens is a special optical lens that has its entrance or exit pupil, or both, at infinity. The size of images produced by a telecentric lens is insensitive to either the distance between an object being imaged and the lens, or the distance between the image plane and the lens, or both, and such an optical property is called telecentricity. Telecentric lenses are used for precision optical two-dimensional measurements, reproduction, and other applications that are sensitive to the image magnification or the angle of incidence of light.

An image sensor or imager is a sensor that detects and conveys information used to form an image. It does so by converting the variable attenuation of light waves into signals, small bursts of current that convey the information. The waves can be light or other electromagnetic radiation. Image sensors are used in electronic imaging devices of both analog and digital types, which include digital cameras, camera modules, camera phones, optical mouse devices, medical imaging equipment, night vision equipment such as thermal imaging devices, radar, sonar, and others. As technology changes, electronic and digital imaging tends to replace chemical and analog imaging.

The following outline is provided as an overview of and topical guide to computer vision:

Range imaging is the name for a collection of techniques that are used to produce a 2D image showing the distance to points in a scene from a specific point, normally associated with some type of sensor device.

Document cameras, also known as visual presenters, visualizers, digital overheads, or docucams, are real-time image capture devices used to display an object to a large audience, such as in a classroom. They can also serve as replacements for image scanners. Similar to opaque projectors, document cameras can magnify and project the images of actual, three-dimensional objects, as well as transparencies. In essence, they are high-resolution web cams, mounted on arms, allowing them to be positioned over a page. The camera connects to a projector or similar video streaming system, enabling a teacher, lecturer, or presenter to write on a sheet of paper or display a two- or three-dimensional object while the audience watches. Different types of document cameras and visualizers offer flexibility in object placement. Larger objects, for instance, can be positioned in front of the camera, which can then be rotated as needed. Alternatively, a ceiling-mounted document camera can be used to create a larger working area.

A digital microscope is a variation of a traditional optical microscope that uses optics and a digital camera to output an image to a monitor, sometimes by means of software running on a computer. A digital microscope often has its own in-built LED light source, and differs from an optical microscope in that there is no provision to observe the sample directly through an eyepiece. Since the image is focused on the digital circuit, the entire system is designed for the monitor image. The optics for the human eye are omitted.

The study of image formation encompasses the radiometric and geometric processes by which 2D images of 3D objects are formed. In the case of digital images, the image formation process also includes analog to digital conversion and sampling.

This glossary defines terms that are used in the document "Defining Video Quality Requirements: A Guide for Public Safety", developed by the Video Quality in Public Safety (VQIPS) Working Group. It contains terminology and explanations of concepts relevant to the video industry. The purpose of the glossary is to inform the reader of commonly used vocabulary terms in the video domain. This glossary was compiled from various industry sources.