A Fourier series is an expansion of a periodic function into a sum of trigonometric functions. The Fourier series is an example of a trigonometric series. By expressing a function as a sum of sines and cosines, many problems involving the function become easier to analyze because trigonometric functions are well understood. For example, Fourier series were first used by Joseph Fourier to find solutions to the heat equation. This application is possible because the derivatives of trigonometric functions fall into simple patterns. Fourier series cannot be used to approximate arbitrary functions, because most functions have infinitely many terms in their Fourier series, and the series do not always converge. Well-behaved functions, for example smooth functions, have Fourier series that converge to the original function. The coefficients of the Fourier series are determined by integrals of the function multiplied by trigonometric functions, described in Common forms of the Fourier series below.

In linear algebra, two vectors in an inner product space are orthonormal if they are orthogonal unit vectors. A unit vector means that the vector has a length of 1, which is also known as normalized. Orthogonal means that the vectors are all perpendicular to each other. A set of vectors form an orthonormal set if all vectors in the set are mutually orthogonal and all of unit length. An orthonormal set which forms a basis is called an orthonormal basis.

Integration is the basic operation in integral calculus. While differentiation has straightforward rules by which the derivative of a complicated function can be found by differentiating its simpler component functions, integration does not, so tables of known integrals are often useful. This page lists some of the most common antiderivatives.

A generalized Fourier series is the expansion of a square integrable function into a sum of square integrable orthogonal basis functions. The standard Fourier series uses an orthonormal basis of trigonometric functions, and the series expansion is applied to periodic functions. In contrast, a generalized Fourier series uses any set of orthogonal basis functions and can apply to any square integrable function.

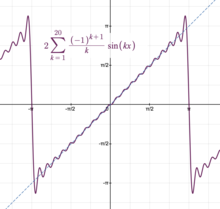

In mathematics, the Gibbs phenomenon is the oscillatory behavior of the Fourier series of a piecewise continuously differentiable periodic function around a jump discontinuity. The th partial Fourier series of the function produces large peaks around the jump which overshoot and undershoot the function values. As more sinusoids are used, this approximation error approaches a limit of about 9% of the jump, though the infinite Fourier series sum does eventually converge almost everywhere except points of discontinuity.

In mathematics, an almost periodic function is, loosely speaking, a function of a real variable that is periodic to within any desired level of accuracy, given suitably long, well-distributed "almost-periods". The concept was first studied by Harald Bohr and later generalized by Vyacheslav Stepanov, Hermann Weyl and Abram Samoilovitch Besicovitch, amongst others. There is also a notion of almost periodic functions on locally compact abelian groups, first studied by John von Neumann.

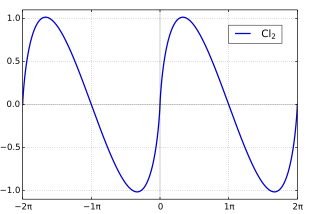

In mathematics, the Clausen function, introduced by Thomas Clausen, is a transcendental, special function of a single variable. It can variously be expressed in the form of a definite integral, a trigonometric series, and various other forms. It is intimately connected with the polylogarithm, inverse tangent integral, polygamma function, Riemann zeta function, Dirichlet eta function, and Dirichlet beta function.

In mathematical analysis, Parseval's identity, named after Marc-Antoine Parseval, is a fundamental result on the summability of the Fourier series of a function. The identity asserts the equality of the energy of a periodic signal and the energy of its frequency domain representation. Geometrically, it is a generalized Pythagorean theorem for inner-product spaces.

In mathematics, orthogonal functions belong to a function space that is a vector space equipped with a bilinear form. When the function space has an interval as the domain, the bilinear form may be the integral of the product of functions over the interval:

In the mathematical subfields of numerical analysis and mathematical analysis, a trigonometric polynomial is a finite linear combination of functions sin(nx) and cos(nx) with n taking on the values of one or more natural numbers. The coefficients may be taken as real numbers, for real-valued functions. For complex coefficients, there is no difference between such a function and a finite Fourier series.

In mathematics, the Fejér kernel is a summability kernel used to express the effect of Cesàro summation on Fourier series. It is a non-negative kernel, giving rise to an approximate identity. It is named after the Hungarian mathematician Lipót Fejér (1880–1959).

In mathematics, the question of whether the Fourier series of a periodic function converges to a given function is researched by a field known as classical harmonic analysis, a branch of pure mathematics. Convergence is not necessarily given in the general case, and certain criteria must be met for convergence to occur.

In mathematics, there are several integrals known as the Dirichlet integral, after the German mathematician Peter Gustav Lejeune Dirichlet, one of which is the improper integral of the sinc function over the positive real number line.

In mathematics, a series expansion is a technique that expresses a function as an infinite sum, or series, of simpler functions. It is a method for calculating a function that cannot be expressed by just elementary operators.

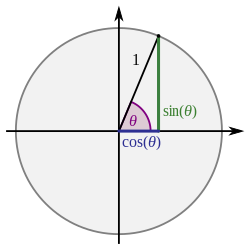

In mathematics, sine and cosine are trigonometric functions of an angle. The sine and cosine of an acute angle are defined in the context of a right triangle: for the specified angle, its sine is the ratio of the length of the side that is opposite that angle to the length of the longest side of the triangle, and the cosine is the ratio of the length of the adjacent leg to that of the hypotenuse. For an angle , the sine and cosine functions are denoted as and .

Clenshaw–Curtis quadrature and Fejér quadrature are methods for numerical integration, or "quadrature", that are based on an expansion of the integrand in terms of Chebyshev polynomials. Equivalently, they employ a change of variables and use a discrete cosine transform (DCT) approximation for the cosine series. Besides having fast-converging accuracy comparable to Gaussian quadrature rules, Clenshaw–Curtis quadrature naturally leads to nested quadrature rules, which is important for both adaptive quadrature and multidimensional quadrature (cubature).

In mathematics, particularly the field of calculus and Fourier analysis, the Fourier sine and cosine series are two mathematical series named after Joseph Fourier.

In mathematical analysis, the Dirichlet kernel, named after the German mathematician Peter Gustav Lejeune Dirichlet, is the collection of periodic functions defined as

Schlömilch's series is a Fourier series type expansion of twice continuously differentiable function in the interval in terms of the Bessel function of the first kind, named after the German mathematician Oskar Schlömilch, who derived the series in 1857. The real-valued function has the following expansion: