Related Research Articles

Audio signal processing is a subfield of signal processing that is concerned with the electronic manipulation of audio signals. Audio signals are electronic representations of sound waves—longitudinal waves which travel through air, consisting of compressions and rarefactions. The energy contained in audio signals or sound power level is typically measured in decibels. As audio signals may be represented in either digital or analog format, processing may occur in either domain. Analog processors operate directly on the electrical signal, while digital processors operate mathematically on its digital representation.

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

Speech processing is the study of speech signals and the processing methods of signals. The signals are usually processed in a digital representation, so speech processing can be regarded as a special case of digital signal processing, applied to speech signals. Aspects of speech processing includes the acquisition, manipulation, storage, transfer and output of speech signals. Different speech processing tasks include speech recognition, speech synthesis, speaker diarization, speech enhancement, speaker recognition, etc.

Speech coding is an application of data compression to digital audio signals containing speech. Speech coding uses speech-specific parameter estimation using audio signal processing techniques to model the speech signal, combined with generic data compression algorithms to represent the resulting modeled parameters in a compact bitstream.

A vocoder is a category of speech coding that analyzes and synthesizes the human voice signal for audio data compression, multiplexing, voice encryption or voice transformation.

Linear predictive coding (LPC) is a method used mostly in audio signal processing and speech processing for representing the spectral envelope of a digital signal of speech in compressed form, using the information of a linear predictive model.

Vector quantization (VQ) is a classical quantization technique from signal processing that allows the modeling of probability density functions by the distribution of prototype vectors. Developed in the early 1980s by Robert M. Gray, it was originally used for data compression. It works by dividing a large set of points (vectors) into groups having approximately the same number of points closest to them. Each group is represented by its centroid point, as in k-means and some other clustering algorithms. In simpler terms, vector quantization chooses a set of points to represent a larger set of points.

Digital audio is a representation of sound recorded in, or converted into, digital form. In digital audio, the sound wave of the audio signal is typically encoded as numerical samples in a continuous sequence. For example, in CD audio, samples are taken 44,100 times per second, each with 16-bit resolution. Digital audio is also the name for the entire technology of sound recording and reproduction using audio signals that have been encoded in digital form. Following significant advances in digital audio technology during the 1970s and 1980s, it gradually replaced analog audio technology in many areas of audio engineering, record production and telecommunications in the 1990s and 2000s.

The mel scale is a perceptual scale of pitches judged by listeners to be equal in distance from one another. The reference point between this scale and normal frequency measurement is defined by assigning a perceptual pitch of 1000 mels to a 1000 Hz tone, 40 dB above the listener's threshold. Above about 500 Hz, increasingly large intervals are judged by listeners to produce equal pitch increments.

Mixed-excitation linear prediction (MELP) is a United States Department of Defense speech coding standard used mainly in military applications and satellite communications, secure voice, and secure radio devices. Its standardization and later development was led and supported by the NSA and NATO. The current "enhanced" version is known as MELPe.

Code-excited linear prediction (CELP) is a linear predictive speech coding algorithm originally proposed by Manfred R. Schroeder and Bishnu S. Atal in 1985. At the time, it provided significantly better quality than existing low bit-rate algorithms, such as residual-excited linear prediction (RELP) and linear predictive coding (LPC) vocoders. Along with its variants, such as algebraic CELP, relaxed CELP, low-delay CELP and vector sum excited linear prediction, it is currently the most widely used speech coding algorithm. It is also used in MPEG-4 Audio speech coding. CELP is commonly used as a generic term for a class of algorithms and not for a particular codec.

Line spectral pairs (LSP) or line spectral frequencies (LSF) are used to represent linear prediction coefficients (LPC) for transmission over a channel. LSPs have several properties that make them superior to direct quantization of LPCs. For this reason, LSPs are very useful in speech coding.

Bishnu S. Atal is an Indian physicist and engineer. He is a noted researcher in acoustics, and is best known for developments in speech coding. He advanced linear predictive coding (LPC) during the late 1960s to 1970s, and developed code-excited linear prediction (CELP) with Manfred R. Schroeder in 1985.

Manfred Robert Schroeder was a German physicist, most known for his contributions to acoustics and computer graphics. He wrote three books and published over 150 articles in his field.

Speaker adaptation is an important technology to fine-tune either features or speech models for mis-match due to inter-speaker variation. In the last decade, eigenvoice (EV) speaker adaptation has been developed. It makes use of the prior knowledge of training speakers to provide a fast adaptation algorithm. Inspired by the kernel eigenface idea in face recognition, kernel eigenvoice (KEV) is proposed. KEV is a non-linear generalization to EV. This incorporates Kernel principal component analysis, a non-linear version of Principal Component Analysis, to capture higher order correlations in order to further explore the speaker space and enhance recognition performance.

An audio coding format is a content representation format for storage or transmission of digital audio. Examples of audio coding formats include MP3, AAC, Vorbis, FLAC, and Opus. A specific software or hardware implementation capable of audio compression and decompression to/from a specific audio coding format is called an audio codec; an example of an audio codec is LAME, which is one of several different codecs which implements encoding and decoding audio in the MP3 audio coding format in software.

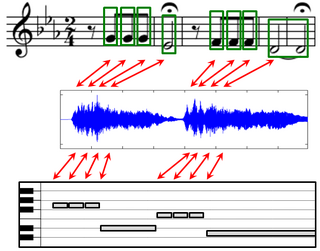

Music can be described and represented in many different ways including sheet music, symbolic representations, and audio recordings. For each of these representations, there may exist different versions that correspond to the same musical work. The general goal of music alignment is to automatically link the various data streams, thus interrelating the multiple information sets related to a given musical work. More precisely, music alignment is taken to mean a procedure which, for a given position in one representation of a piece of music, determines the corresponding position within another representation. In the figure on the right, such an alignment is visualized by the red bidirectional arrows. Such synchronization results form the basis for novel interfaces that allow users to access, search, and browse musical content in a convenient way.

Multichannel Speaking Automaton (MUSA) was an early prototype of Speech Synthesis machine started in 1975.

John Makhoul is a Lebanese-American computer scientist who works in the field of speech and language processing. Dr. Makhoul's work on linear predictive coding was used in the establishment of the Network Voice Protocol, which enabled the transmission of speech signals over the ARPANET. Makhoul is recognized in the field for his vital role in the areas of speech and language processing, including speech analysis, speech coding, speech recognition and speech understanding. He has made a number of significant contributions to the mathematical modeling of speech signals, including his work on linear prediction, and vector quantization. His patented work on the direct application of speech recognition techniques for accurate, language-independent optical character recognition (OCR) has had a dramatic impact on the ability to create OCR systems in multiple languages relatively quickly.

Matti Antero Karjalainen was a Finnish speech processing researcher and inventor in the fields of speech synthesis, speech analysis, speech technology, audio signal processing and psychoacoustics. He was the head of Acoustics Laboratory at the Helsinki University of Technology from 1980 to 2006.

References

- Oppenheim, Alan V.; Johnson, D.H. (June 1972), "Discrete representation of signals", Proceedings of the IEEE, 60 (6): 681–691, CiteSeerX 10.1.1.456.9776 , doi:10.1109/PROC.1972.8727

- Strube, Hans Werner (Oct 1980), "Linear prediction on a warped frequency scale" (PDF), Journal of the Acoustical Society of America, 68 (4): 1071–1076, Bibcode:1980ASAJ...68.1071S, doi:10.1121/1.384992, archived from the original (PDF) on 2014-08-26, retrieved 2014-08-24

- Härmä, Aki; Laine, Unto K. (July 2001), "A comparison of warped and conventional linear predictive coding" (PDF), IEEE Transactions on Speech and Audio Processing, 9 (5): 579–588, CiteSeerX 10.1.1.99.1455 , doi:10.1109/89.928922

- Kruger, Elmar; Strube, Hans Werner (September 1988), "Linear prediction on a warped frequency scale", IEEE Transactions on Acoustics, Speech, and Signal Processing, 36 (9): 1529–1531, doi:10.1109/29.90384

- Laine, Unto K.; Karjalainen, Matti A.; Altosaar, Toomas (April 1994), "Warped linear prediction (WLP) in speech and audio processing" (PDF), 1994 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP-94), vol. III, pp. III–349–III–352, doi:10.1109/ICASSP.1994.390018, ISBN 978-0-7803-1775-8, S2CID 11597755, archived from the original (PDF) on 2016-03-03, retrieved 2014-08-24