Audio signal processing is a subfield of signal processing that is concerned with the electronic manipulation of audio signals. Audio signals are electronic representations of sound waves—longitudinal waves which travel through air, consisting of compressions and rarefactions. The energy contained in audio signals or sound power level is typically measured in decibels. As audio signals may be represented in either digital or analog format, processing may occur in either domain. Analog processors operate directly on the electrical signal, while digital processors operate mathematically on its digital representation.

Speech coding is an application of data compression to digital audio signals containing speech. Speech coding uses speech-specific parameter estimation using audio signal processing techniques to model the speech signal, combined with generic data compression algorithms to represent the resulting modeled parameters in a compact bitstream.

Delta modulation is an analog-to-digital and digital-to-analog signal conversion technique used for transmission of voice information where quality is not of primary importance. DM is the simplest form of differential pulse-code modulation (DPCM) where the difference between successive samples is encoded into n-bit data streams. In delta modulation, the transmitted data are reduced to a 1-bit data stream representing either up (↗) or down (↘). Its main features are:

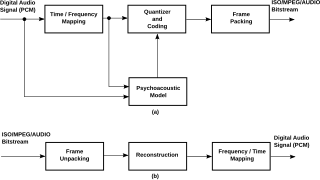

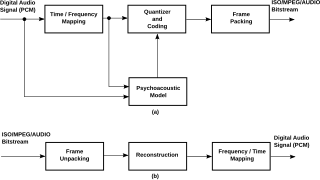

Adaptive Transform Acoustic Coding (ATRAC) is a family of proprietary audio compression algorithms developed by Sony. MiniDisc was the first commercial product to incorporate ATRAC, in 1992. ATRAC allowed a relatively small disc like MiniDisc to have the same running time as CD while storing audio information with minimal perceptible loss in quality. Improvements to the codec in the form of ATRAC3, ATRAC3plus, and ATRAC Advanced Lossless followed in 1999, 2002, and 2006 respectively.

Digital audio is a representation of sound recorded in, or converted into, digital form. In digital audio, the sound wave of the audio signal is typically encoded as numerical samples in a continuous sequence. For example, in CD audio, samples are taken 44,100 times per second, each with 16-bit resolution. Digital audio is also the name for the entire technology of sound recording and reproduction using audio signals that have been encoded in digital form. Following significant advances in digital audio technology during the 1970s and 1980s, it gradually replaced analog audio technology in many areas of audio engineering, record production and telecommunications in the 1990s and 2000s.

G.711 is a narrowband audio codec originally designed for use in telephony that provides toll-quality audio at 64 kbit/s. It is an ITU-T standard (Recommendation) for audio encoding, titled Pulse code modulation (PCM) of voice frequencies released for use in 1972.

H.261 is an ITU-T video compression standard, first ratified in November 1988. It is the first member of the H.26x family of video coding standards in the domain of the ITU-T Study Group 16 Video Coding Experts Group. It was the first video coding standard that was useful in practical terms.

Smacker video is a video file format developed by Epic Games Tools, and primarily used for full-motion video in video games. Smacker uses an adaptive 8-bit RGB palette. RAD's format for video at higher color depths is Bink Video. The Smacker format specifies a container format, a video compression format, and an audio compression format. Since its release in 1994, Smacker has been used in over 2300 games. Blizzard used this format for the cinematic videos seen in its games Warcraft II, StarCraft and Diablo I.

Continuously variable slope delta modulation is a voice coding method. It is a delta modulation with variable step size, first proposed by Greefkes and Riemens in 1970.

G.722 is an ITU-T standard 7 kHz wideband audio codec operating at 48, 56 and 64 kbit/s. It was approved by ITU-T in November 1988. Technology of the codec is based on sub-band ADPCM (SB-ADPCM). The corresponding narrow-band codec based on the same technology is G.726.

G.726 is an ITU-T ADPCM speech codec standard covering the transmission of voice at rates of 16, 24, 32, and 40 kbit/s. It was introduced to supersede both G.721, which covered ADPCM at 32 kbit/s, and G.723, which described ADPCM for 24 and 40 kbit/s. G.726 also introduced a new 16 kbit/s rate. The four bit rates associated with G.726 are often referred to by the bit size of a sample, which are 2, 3, 4, and 5-bits respectively. The corresponding wide-band codec based on the same technology is G.722.

Differential pulse-code modulation (DPCM) is a signal encoder that uses the baseline of pulse-code modulation (PCM) but adds some functionalities based on the prediction of the samples of the signal. The input can be an analog signal or a digital signal.

Dolby Digital Plus, also known as Enhanced AC-3, is a digital audio compression scheme developed by Dolby Labs for the transport and storage of multi-channel digital audio. It is a successor to Dolby Digital (AC-3), and has a number of improvements over that codec, including support for a wider range of data rates, an increased channel count, and multi-program support, as well as additional tools (algorithms) for representing compressed data and counteracting artifacts. Whereas Dolby Digital (AC-3) supports up to five full-bandwidth audio channels at a maximum bitrate of 640 kbit/s, E-AC-3 supports up to 15 full-bandwidth audio channels at a maximum bitrate of 6.144 Mbit/s.

H.120 was the first digital video compression standard. It was developed by the COST 211 European research project and published by the CCITT in 1984, with a revision in 1988 that included contributions proposed by other organizations. The video turned out not to be of adequate quality, there were few implementations, and there are no existing codecs for the format, but it provided important knowledge leading directly to its practical successors, such as H.261. The latest revision was published in March 1993.

SBC, or low-complexity subband codec, is an audio subband codec specified by the Bluetooth Special Interest Group (SIG) for the Advanced Audio Distribution Profile (A2DP). SBC is a digital audio encoder and decoder used to transfer data to Bluetooth audio output devices like headphones or loudspeakers. It can also be used on the Internet. It was designed with Bluetooth bandwidth limitations and processing power in mind to obtain a reasonably good audio quality at medium bit rates with low computational complexity. As of A2DP version 1.3, the Low Complexity Subband Coding remains the default codec and its implementation is mandatory for devices supporting that profile, but vendors are free to add their own codecs to match their needs.

aptX is a family of proprietary audio codec compression algorithms owned by Qualcomm, with a heavy emphasis on wireless audio applications.

In signal processing, sub-band coding (SBC) is any form of transform coding that breaks a signal into a number of different frequency bands, typically by using a fast Fourier transform, and encodes each one independently. This decomposition is often the first step in data compression for audio and video signals.

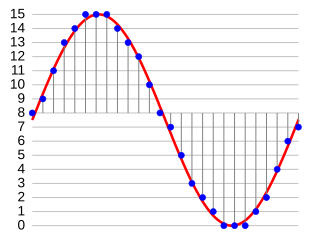

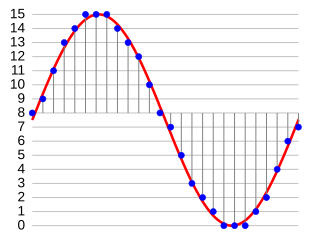

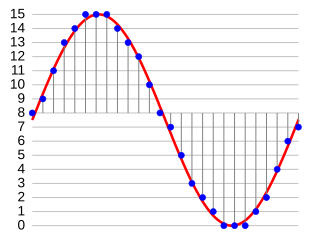

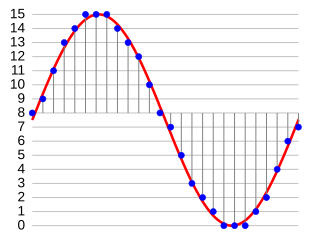

Pulse-code modulation (PCM) is a method used to digitally represent analog signals. It is the standard form of digital audio in computers, compact discs, digital telephony and other digital audio applications. In a PCM stream, the amplitude of the analog signal is sampled at uniform intervals, and each sample is quantized to the nearest value within a range of digital steps. Alec Reeves, Claude Shannon, Barney Oliver and John R. Pierce are credited with its invention.

An audio coding format is a content representation format for storage or transmission of digital audio. Examples of audio coding formats include MP3, AAC, Vorbis, FLAC, and Opus. A specific software or hardware implementation capable of audio compression and decompression to/from a specific audio coding format is called an audio codec; an example of an audio codec is LAME, which is one of several different codecs which implements encoding and decoding audio in the MP3 audio coding format in software.