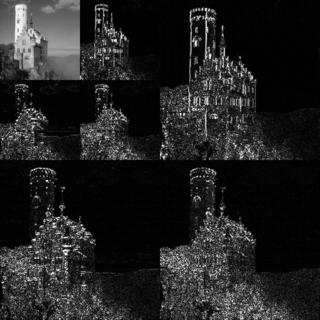

JPEG is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and image quality. JPEG typically achieves 10:1 compression with little perceptible loss in image quality. Since its introduction in 1992, JPEG has been the most widely used image compression standard in the world, and the most widely used digital image format, with several billion JPEG images produced every day as of 2015.

MPEG-1 is a standard for lossy compression of video and audio. It is designed to compress VHS-quality raw digital video and CD audio down to about 1.5 Mbit/s without excessive quality loss, making video CDs, digital cable/satellite TV and digital audio broadcasting (DAB) practical.

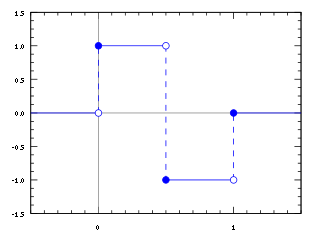

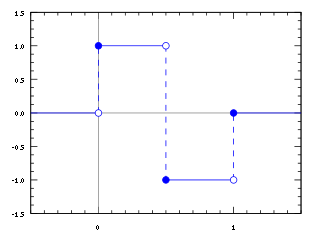

In mathematics, the Haar wavelet is a sequence of rescaled "square-shaped" functions which together form a wavelet family or basis. Wavelet analysis is similar to Fourier analysis in that it allows a target function over an interval to be represented in terms of an orthonormal basis. The Haar sequence is now recognised as the first known wavelet basis and is extensively used as a teaching example.

A discrete cosine transform (DCT) expresses a finite sequence of data points in terms of a sum of cosine functions oscillating at different frequencies. The DCT, first proposed by Nasir Ahmed in 1972, is a widely used transformation technique in signal processing and data compression. It is used in most digital media, including digital images, digital video, digital audio, digital television, digital radio, and speech coding. DCTs are also important to numerous other applications in science and engineering, such as digital signal processing, telecommunication devices, reducing network bandwidth usage, and spectral methods for the numerical solution of partial differential equations.

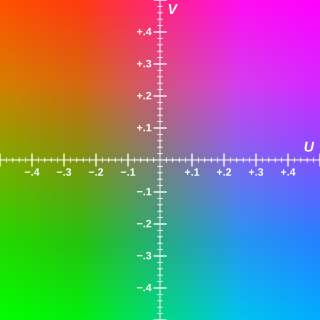

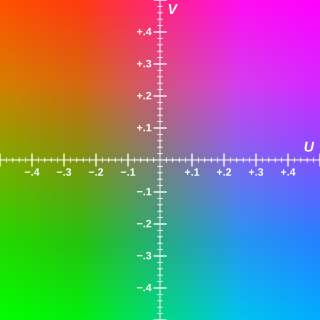

Y′UV, also written YUV, is the color model found in the PAL analogue color TV standard. A color is described as a Y′ component (luma) and two chroma components U and V. The prime symbol (') denotes that the luma is calculated from gamma-corrected RGB input and that it is different from true luminance. Today, the term YUV is commonly used in the computer industry to describe colorspaces that are encoded using YCbCr.

Digital image processing is the use of a digital computer to process digital images through an algorithm. As a subcategory or field of digital signal processing, digital image processing has many advantages over analog image processing. It allows a much wider range of algorithms to be applied to the input data and can avoid problems such as the build-up of noise and distortion during processing. Since images are defined over two dimensions digital image processing may be modeled in the form of multidimensional systems. The generation and development of digital image processing are mainly affected by three factors: first, the development of computers; second, the development of mathematics ; third, the demand for a wide range of applications in environment, agriculture, military, industry and medical science has increased.

In physics and mathematics, the Lorentz group is the group of all Lorentz transformations of Minkowski spacetime, the classical and quantum setting for all (non-gravitational) physical phenomena. The Lorentz group is named for the Dutch physicist Hendrik Lorentz.

Quantization, in mathematics and digital signal processing, is the process of mapping input values from a large set to output values in a (countable) smaller set, often with a finite number of elements. Rounding and truncation are typical examples of quantization processes. Quantization is involved to some degree in nearly all digital signal processing, as the process of representing a signal in digital form ordinarily involves rounding. Quantization also forms the core of essentially all lossy compression algorithms.

In digital photography, computer-generated imagery, and colorimetry, a greyscale or grayscale image is one in which the value of each pixel is a single sample representing only an amount of light; that is, it carries only intensity information. Grayscale images, are black-and-white or gray monochrome, and composed exclusively of shades of gray. The contrast ranges from black at the weakest intensity to white at the strongest.

YCbCr, Y′CbCr, or Y Pb/Cb Pr/Cr, also written as YCBCR or Y′CBCR, is a family of color spaces used as a part of the color image pipeline in video and digital photography systems. Y′ is the luma component and CB and CR are the blue-difference and red-difference chroma components. Y′ is distinguished from Y, which is luminance, meaning that light intensity is nonlinearly encoded based on gamma corrected RGB primaries.

sRGB is a standard RGB color space that HP and Microsoft created cooperatively in 1996 to use on monitors, printers, and the World Wide Web. It was subsequently standardized by the International Electrotechnical Commission (IEC) as IEC 61966-2-1:1999. sRGB is the current defined standard colorspace for the web, and it is usually the assumed colorspace for images that are neither tagged for a colorspace nor have an embedded color profile.

In numerical analysis and functional analysis, a discrete wavelet transform (DWT) is any wavelet transform for which the wavelets are discretely sampled. As with other wavelet transforms, a key advantage it has over Fourier transforms is temporal resolution: it captures both frequency and location information.

Dilution of precision (DOP), or geometric dilution of precision (GDOP), is a term used in satellite navigation and geomatics engineering to specify the error propagation as a mathematical effect of navigation satellite geometry on positional measurement precision.

The Rabi problem concerns the response of an atom to an applied harmonic electric field, with an applied frequency very close to the atom's natural frequency. It provides a simple and generally solvable example of light–atom interactions and is named after Isidor Isaac Rabi.

PGF is a wavelet-based bitmapped image format that employs lossless and lossy data compression. PGF was created to improve upon and replace the JPEG format. It was developed at the same time as JPEG 2000 but with a focus on speed over compression ratio.

Ordered dithering is any image dithering algorithm which uses a pre-set threshold map tiled across an image. It is commonly used to display a continuous image on a display of smaller color depth. For example, Microsoft Windows uses it in 16-color graphics modes. The algorithm is characterized by noticeable crosshatch patterns in the result.

In numerical analysis, the interval finite element method is a finite element method that uses interval parameters. Interval FEM can be applied in situations where it is not possible to get reliable probabilistic characteristics of the structure. This is important in concrete structures, wood structures, geomechanics, composite structures, biomechanics and in many other areas. The goal of the Interval Finite Element is to find upper and lower bounds of different characteristics of the model and use these results in the design process. This is so called worst case design, which is closely related to the limit state design.

The direct-quadrature-zerotransformation or zero-direct-quadraturetransformation is a tensor that rotates the reference frame of a three-element vector or a three-by-three element matrix in an effort to simplify analysis. The DQZ transform is the product of the Clarke transform and the Park transform, first proposed in 1929 by Robert H. Park.

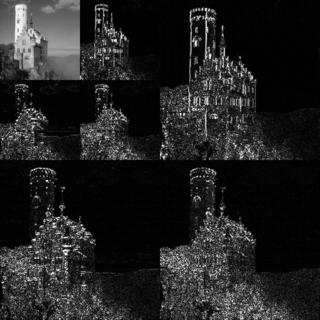

In digital image and video processing, a color layout descriptor (CLD) is designed to capture the spatial distribution of color in an image. The feature extraction process consists of two parts: grid based representative color selection and discrete cosine transform with quantization.

ICTCP, ICtCp, or ITP is a color representation format specified in the Rec. ITU-R BT.2100 standard that is used as a part of the color image pipeline in video and digital photography systems for high dynamic range (HDR) and wide color gamut (WCG) imagery. It was developed by Dolby Laboratories from the IPT color space by Ebner and Fairchild. The format is derived from an associated RGB color space by a coordinate transformation that includes two matrix transformations and an intermediate nonlinear transfer function that is informally known as gamma pre-correction. The transformation produces three signals called I, CT, and CP. The ICTCP transformation can be used with RGB signals derived from either the perceptual quantizer (PQ) or hybrid log–gamma (HLG) nonlinearity functions, but is most commonly associated with the PQ function.