In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size for storing, handling, and transmitting content. Higher degrees of approximation create coarser images as more details are removed. This is opposed to lossless data compression which does not degrade the data. The amount of data reduction possible using lossy compression is much higher than using lossless techniques.

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates.

Image compression is a type of data compression applied to digital images, to reduce their cost for storage or transmission. Algorithms may take advantage of visual perception and the statistical properties of image data to provide superior results compared with generic data compression methods which are used for other digital data.

A video codec is software or hardware that compresses and decompresses digital video. In the context of video compression, codec is a portmanteau of encoder and decoder, while a device that only compresses is typically called an encoder, and one that only decompresses is a decoder.

Fractal compression is a lossy compression method for digital images, based on fractals. The method is best suited for textures and natural images, relying on the fact that parts of an image often resemble other parts of the same image. Fractal algorithms convert these parts into mathematical data called "fractal codes" which are used to recreate the encoded image.

In computing, Deflate is a lossless data compression file format that uses a combination of LZ77 and Huffman coding. It was designed by Phil Katz, for version 2 of his PKZIP archiving tool. Deflate was later specified in RFC 1951 (1996).

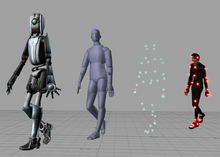

Texture mapping is a method for mapping a texture on a computer-generated graphic. "Texture" in this context can be high frequency detail, surface texture, or color.

A compression artifact is a noticeable distortion of media caused by the application of lossy compression. Lossy data compression involves discarding some of the media's data so that it becomes small enough to be stored within the desired disk space or transmitted (streamed) within the available bandwidth. If the compressor cannot store enough data in the compressed version, the result is a loss of quality, or introduction of artifacts. The compression algorithm may not be intelligent enough to discriminate between distortions of little subjective importance and those objectionable to the user.

In scientific visualization and computer graphics, volume rendering is a set of techniques used to display a 2D projection of a 3D discretely sampled data set, typically a 3D scalar field.

In computer graphics, texture filtering or texture smoothing is the method used to determine the texture color for a texture mapped pixel, using the colors of nearby texels.

S3 Texture Compression (S3TC) is a group of related lossy texture compression algorithms originally developed by Iourcha et al. of S3 Graphics, Ltd. for use in their Savage 3D computer graphics accelerator. The method of compression is strikingly similar to the previously published Color Cell Compression, which is in turn an adaptation of Block Truncation Coding published in the late 1970s. Unlike some image compression algorithms, S3TC's fixed-rate data compression coupled with the single memory access made it well-suited for use in compressing textures in hardware-accelerated 3D computer graphics. Its subsequent inclusion in Microsoft's DirectX 6.0 and OpenGL 1.3 led to widespread adoption of the technology among hardware and software makers. While S3 Graphics is no longer a competitor in the graphics accelerator market, license fees have been levied and collected for the use of S3TC technology until October 2017, for example in game consoles and graphics cards. The wide use of S3TC has led to a de facto requirement for OpenGL drivers to support it, but the patent-encumbered status of S3TC presented a major obstacle to open source implementations, while implementation approaches which tried to avoid the patented parts existed.

Generation loss is the loss of quality between subsequent copies or transcodes of data. Anything that reduces the quality of the representation when copying, and would cause further reduction in quality on making a copy of the copy, can be considered a form of generation loss. File size increases are a common result of generation loss, as the introduction of artifacts may actually increase the entropy of the data through each generation.

Digital artifact in information science, is any undesired or unintended alteration in data introduced in a digital process by an involved technique and/or technology.

PVRTC and PVRTC2 are a family of lossy, fixed-rate texture compression formats used in PowerVR's MBX, SGX and Rogue technologies. The PVRTC algorithm is documented in Simon Fenney's paper "Texture Compression using Low-Frequency Signal Modulation" that was presented at Graphics Hardware 2003.

Color Cell Compression is a lossy image compression algorithm developed by Campbell et al., in 1986, which can be considered an early forerunner of modern texture compression algorithms, such as S3 Texture Compression and Adaptive Scalable Texture Compression. It is closely related to Block Truncation Coding, another lossy image compression algorithm, which predates Color Cell Compression, in that it uses the dominant luminance of a block of pixels to partition said pixels into two representative colors. The primary difference between Block Truncation Coding and Color Cell Compression is that the former was designed to compress grayscale images and the latter was designed to compress color images. Also, Block Truncation Coding requires that the standard deviation of the colors of pixels in a block be computed in order to compress an image, whereas Color Cell Compression does not use the standard deviation. Both algorithms, though, can compress an image down to effectively 2 bits per pixel.

Adaptive scalable texture compression (ASTC) is a lossy block-based texture compression algorithm developed by Jørn Nystad et al. of ARM Ltd. and AMD.

A vision processing unit (VPU) is an emerging class of microprocessor; it is a specific type of AI accelerator, designed to accelerate machine vision tasks.

This is a glossary of terms relating to computer graphics.

SMPTE ST 2117-1, informally known as VC-6, is a video coding format.