Related Research Articles

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size for storing, handling, and transmitting content. The different versions of the photo of the cat on this page show how higher degrees of approximation create coarser images as more details are removed. This is opposed to lossless data compression which does not degrade the data. The amount of data reduction possible using lossy compression is much higher than using lossless techniques.

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates.

Portable Network Graphics is a raster-graphics file format that supports lossless data compression. PNG was developed as an improved, non-patented replacement for Graphics Interchange Format (GIF)—unofficially, the initials PNG stood for the recursive acronym "PNG's not GIF".

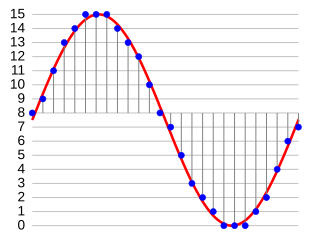

Image compression is a type of data compression applied to digital images, to reduce their cost for storage or transmission. Algorithms may take advantage of visual perception and the statistical properties of image data to provide superior results compared with generic data compression methods which are used for other digital data.

In information theory, an entropy coding is any lossless data compression method that attempts to approach the lower bound declared by Shannon's source coding theorem, which states that any lossless data compression method must have an expected code length greater than or equal to the entropy of the source.

Audio Interchange File Format (AIFF) is an audio file format standard used for storing sound data for personal computers and other electronic audio devices. The format was developed by Apple Inc. in 1988 based on Electronic Arts' Interchange File Format and is most commonly used on Apple Macintosh computer systems.

LZ77 and LZ78 are the two lossless data compression algorithms published in papers by Abraham Lempel and Jacob Ziv in 1977 and 1978. They are also known as LZ1 and LZ2 respectively. These two algorithms form the basis for many variations including LZW, LZSS, LZMA and others. Besides their academic influence, these algorithms formed the basis of several ubiquitous compression schemes, including GIF and the DEFLATE algorithm used in PNG and ZIP.

Tag Image File Format or Tagged Image File Format, commonly known by the abbreviations TIFF or TIF, is an image file format for storing raster graphics images, popular among graphic artists, the publishing industry, and photographers. TIFF is widely supported by scanning, faxing, word processing, optical character recognition, image manipulation, desktop publishing, and page-layout applications. The format was created by the Aldus Corporation for use in desktop publishing. It published the latest version 6.0 in 1992, subsequently updated with an Adobe Systems copyright after the latter acquired Aldus in 1994. Several Aldus or Adobe technical notes have been published with minor extensions to the format, and several specifications have been based on TIFF 6.0, including TIFF/EP, TIFF/IT, TIFF-F and TIFF-FX.

OpenEXR is a high-dynamic range, multi-channel raster file format, released as an open standard along with a set of software tools created by Industrial Light & Magic (ILM), under a free software license similar to the BSD license.

Adaptive coding refers to variants of entropy encoding methods of lossless data compression. They are particularly suited to streaming data, as they adapt to localized changes in the characteristics of the data, and don't require a first pass over the data to calculate a probability model. The cost paid for these advantages is that the encoder and decoder must be more complex to keep their states synchronized, and more computational power is needed to keep adapting the encoder/decoder state.

Prediction by partial matching (PPM) is an adaptive statistical data compression technique based on context modeling and prediction. PPM models use a set of previous symbols in the uncompressed symbol stream to predict the next symbol in the stream. PPM algorithms can also be used to cluster data into predicted groupings in cluster analysis.

ART is a proprietary image file format used mostly by the America Online (AOL) service and client software.

WavPack is a free and open-source lossless audio compression format and application implementing the format. It is unique in the way that it supports hybrid audio compression alongside normal compression which is similar to how FLAC works. It also supports compressing a wide variety of lossless formats, including various variants of PCM and also DSD as used in SACDs, together with its support for surround audio.

G.722 is an ITU-T standard 7 kHz wideband audio codec operating at 48, 56 and 64 kbit/s. It was approved by ITU-T in November 1988. Technology of the codec is based on sub-band ADPCM (SB-ADPCM). The corresponding narrow-band codec based on the same technology is G.726.

An image file format is a file format for a digital image. There are many formats that can be used, such as JPEG, PNG, and GIF. Most formats up until 2022 were for storing 2D images, not 3D ones. The data stored in an image file format may be compressed or uncompressed. If the data is compressed, it may be done so using lossy compression or lossless compression. For graphic design applications, vector formats are often used. Some image file formats support transparency.

Lempel–Ziv–Stac is a lossless data compression algorithm that uses a combination of the LZ77 sliding-window compression algorithm and fixed Huffman coding. It was originally developed by Stac Electronics for tape compression, and subsequently adapted for hard disk compression and sold as the Stacker disk compression software. It was later specified as a compression algorithm for various network protocols. LZS is specified in the Cisco IOS stack.

An audio coding format is a content representation format for storage or transmission of digital audio. Examples of audio coding formats include MP3, AAC, Vorbis, FLAC, and Opus. A specific software or hardware implementation capable of audio compression and decompression to/from a specific audio coding format is called an audio codec; an example of an audio codec is LAME, which is one of several different codecs which implements encoding and decoding audio in the MP3 audio coding format in software.

Zstandard is a lossless data compression algorithm developed by Yann Collet at Facebook. Zstd is the corresponding reference implementation in C, released as open-source software on 31 August 2016.

References

- ↑ "Adaptive compression". PC Magazine Encyclopedia. Archived from the original on 20 October 2007. Retrieved 21 April 2011.