In probability theory and related fields, a stochastic or random process is a mathematical object usually defined as a family of random variables. Many stochastic processes can be represented by time series. However, a stochastic process is by nature continuous while a time series is a set of observations indexed by integers. A stochastic process may involve several related random variables.

A Markov chain is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). It is named after the Russian mathematician Andrey Markov.

In probability theory and statistics, the term Markov property refers to the memoryless property of a stochastic process. It is named after the Russian mathematician Andrey Markov.

The term strong Markov property is similar to the Markov property, except that the meaning of "present" is defined in terms of a random variable known as a stopping time.

In probability theory, a Lévy process, named after the French mathematician Paul Lévy, is a stochastic process with independent, stationary increments: it represents the motion of a point whose successive displacements are random, in which displacements in pairwise disjoint time intervals are independent, and displacements in different time intervals of the same length have identical probability distributions. A Lévy process may thus be viewed as the continuous-time analog of a random walk.

In mathematics, a Markov decision process (MDP) is a discrete-time stochastic control process. It provides a mathematical framework for modeling decision making in situations where outcomes are partly random and partly under the control of a decision maker. MDPs are useful for studying optimization problems solved via dynamic programming. MDPs were known at least as early as the 1950s; a core body of research on Markov decision processes resulted from Ronald Howard's 1960 book, Dynamic Programming and Markov Processes. They are used in many disciplines, including robotics, automatic control, economics and manufacturing. The name of MDPs comes from the Russian mathematician Andrey Markov as they are an extension of Markov chains.

In probability theory, in particular in the study of stochastic processes, a stopping time is a specific type of “random time”: a random variable whose value is interpreted as the time at which a given stochastic process exhibits a certain behavior of interest. A stopping time is often defined by a stopping rule, a mechanism for deciding whether to continue or stop a process on the basis of the present position and past events, and which will almost always lead to a decision to stop at some finite time.

In probability theory, an empirical process is a stochastic process that describes the proportion of objects in a system in a given state. For a process in a discrete state space a population continuous time Markov chain or Markov population model is a process which counts the number of objects in a given state . In mean field theory, limit theorems are considered and generalise the central limit theorem for empirical measures. Applications of the theory of empirical processes arise in non-parametric statistics.

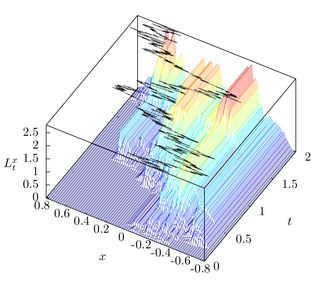

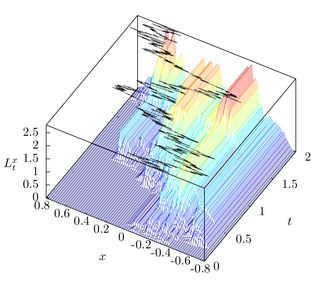

In the mathematical theory of stochastic processes, local time is a stochastic process associated with semimartingale processes such as Brownian motion, that characterizes the amount of time a particle has spent at a given level. Local time appears in various stochastic integration formulas, such as Tanaka's formula, if the integrand is not sufficiently smooth. It is also studied in statistical mechanics in the context of random fields.

In queueing models, a discipline within the mathematical theory of probability, the quasi-birth–death process describes a generalisation of the birth–death process. As with the birth-death process it moves up and down between levels one at a time, but the time between these transitions can have a more complicated distribution encoded in the blocks.

In queueing theory, a discipline within the mathematical theory of probability, an M/M/1 queue represents the queue length in a system having a single server, where arrivals are determined by a Poisson process and job service times have an exponential distribution. The model name is written in Kendall's notation. The model is the most elementary of queueing models and an attractive object of study as closed-form expressions can be obtained for many metrics of interest in this model. An extension of this model with more than one server is the M/M/c queue.

In actuarial science and applied probability ruin theory uses mathematical models to describe an insurer's vulnerability to insolvency/ruin. In such models key quantities of interest are the probability of ruin, distribution of surplus immediately prior to ruin and deficit at time of ruin.

In the mathematical study of stochastic processes, a Harris chain is a Markov chain where the chain returns to a particular part of the state space an unbounded number of times. Harris chains are regenerative processes and are named after Theodore Harris. The theory of Harris chains and Harris recurrence is useful for treating Markov chains on general state spaces.

In queueing theory, a discipline within the mathematical theory of probability, a fluid queue is a mathematical model used to describe the fluid level in a reservoir subject to randomly determined periods of filling and emptying. The term dam theory was used in earlier literature for these models. The model has been used to approximate discrete models, model the spread of wildfires, in ruin theory and to model high speed data networks. The model applies the leaky bucket algorithm to a stochastic source.

In probability theory, reflected Brownian motion is a Wiener process in a space with reflecting boundaries.

In queueing theory, a discipline within the mathematical theory of probability, the M/M/∞ queue is a multi-server queueing model where every arrival experiences immediate service and does not wait. In Kendall's notation it describes a system where arrivals are governed by a Poisson process, there are infinitely many servers, so jobs do not need to wait for a server. Each job has an exponentially distributed service time. It is a limit of the M/M/c queue model where the number of servers c becomes very large.

In probability theory, an interacting particle system (IPS) is a stochastic process on some configuration space given by a site space, a countable-infinite graph and a local state space, a compact metric space . More precisely IPS are continuous-time Markov jump processes describing the collective behavior of stochastically interacting components. IPS are the continuous-time analogue of stochastic cellular automata.

In probability theory, Kelly's lemma states that for a stationary continuous time Markov chain, a process defined as the time-reversed process has the same stationary distribution as the forward-time process. The theorem is named after Frank Kelly.

In queueing theory, a discipline within the mathematical theory of probability, the G/M/1 queue represents the queue length in a system where interarrival times have a general distribution and service times for each job have an exponential distribution. The system is described in Kendall's notation where the G denotes a general distribution, M the exponential distribution for service times and the 1 that the model has a single server.

In the mathematical theory of probability, Blumenthal's zero–one law, named after Robert McCallum Blumenthal, is a statement about the nature of the beginnings of right continuous Feller process. Loosely, it states that any right continuous Feller process on starting from deterministic point has also deterministic initial movement.

An additive process, in probability theory, is a cadlag, continuous in probability stochastic process with independent increments. An additive process is the generalization of a Lévy process. An example of an additive process is a Brownian motion with a time-dependent drift. The additive process is introduced by Paul Lévy in 1937.