In machine learning, supervised learning (SL) is a paradigm where a model is trained using input objects and desired output values, which are often human-made labels. The training process builds a function that maps new data to expected output values. An optimal scenario will allow for the algorithm to accurately determine output values for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way. This statistical quality of an algorithm is measured via a generalization error.

SciPy is a free and open-source Python library used for scientific computing and technical computing.

In computer science, array programming refers to solutions that allow the application of operations to an entire set of values at once. Such solutions are commonly used in scientific and engineering settings.

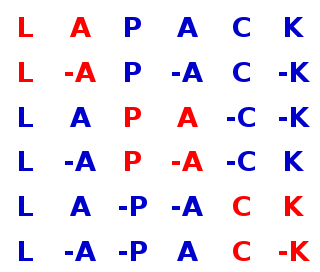

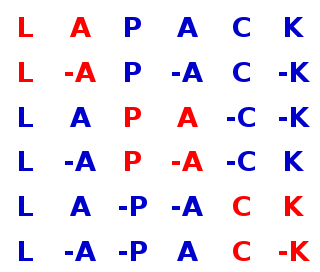

LAPACK is a standard software library for numerical linear algebra. It provides routines for solving systems of linear equations and linear least squares, eigenvalue problems, and singular value decomposition. It also includes routines to implement the associated matrix factorizations such as LU, QR, Cholesky and Schur decomposition. LAPACK was originally written in FORTRAN 77, but moved to Fortran 90 in version 3.2 (2008). The routines handle both real and complex matrices in both single and double precision. LAPACK relies on an underlying BLAS implementation to provide efficient and portable computational building blocks for its routines.

Basic Linear Algebra Subprograms (BLAS) is a specification that prescribes a set of low-level routines for performing common linear algebra operations such as vector addition, scalar multiplication, dot products, linear combinations, and matrix multiplication. They are the de facto standard low-level routines for linear algebra libraries; the routines have bindings for both C and Fortran. Although the BLAS specification is general, BLAS implementations are often optimized for speed on a particular machine, so using them can bring substantial performance benefits. BLAS implementations will take advantage of special floating point hardware such as vector registers or SIMD instructions.

In computing, CUDA is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated general-purpose processing, an approach called general-purpose computing on GPUs. CUDA was created by Nvidia in 2006. When it was first introduced, the name was an acronym for Compute Unified Device Architecture, but Nvidia later dropped the common use of the acronym and now rarely expands it.

Shogun is a free, open-source machine learning software library written in C++. It offers numerous algorithms and data structures for machine learning problems. It offers interfaces for Octave, Python, R, Java, Lua, Ruby and C# using SWIG.

Apache Mahout is a project of the Apache Software Foundation to produce free implementations of distributed or otherwise scalable machine learning algorithms focused primarily on linear algebra. In the past, many of the implementations use the Apache Hadoop platform, however today it is primarily focused on Apache Spark. Mahout also provides Java/Scala libraries for common math operations and primitive Java collections. Mahout is a work in progress; a number of algorithms have been implemented.

Armadillo is a linear algebra software library for the C++ programming language. It aims to provide efficient and streamlined base calculations, while at the same time having a straightforward and easy-to-use interface. Its intended target users are scientists and engineers.

ALGLIB is a cross-platform open source numerical analysis and data processing library. It can be used from several programming languages.

IT++ is a C++ library of classes and functions for linear algebra, numerical optimization, signal processing, communications, and statistics. It is being developed by researchers in these areas and is widely used by researchers, both in the communications industry and universities. The IT++ library originates from the former Department of Information Theory at the Chalmers University of Technology, Gothenburg, Sweden.

Vowpal Wabbit (VW) is an open-source fast online interactive machine learning system library and program developed originally at Yahoo! Research, and currently at Microsoft Research. It was started and is led by John Langford. Vowpal Wabbit's interactive learning support is particularly notable including Contextual Bandits, Active Learning, and forms of guided Reinforcement Learning. Vowpal Wabbit provides an efficient scalable out-of-core implementation with support for a number of machine learning reductions, importance weighting, and a selection of different loss functions and optimization algorithms.

Eclipse Deeplearning4j is a programming library written in Java for the Java virtual machine (JVM). It is a framework with wide support for deep learning algorithms. Deeplearning4j includes implementations of the restricted Boltzmann machine, deep belief net, deep autoencoder, stacked denoising autoencoder and recursive neural tensor network, word2vec, doc2vec, and GloVe. These algorithms all include distributed parallel versions that integrate with Apache Hadoop and Spark.

The following outline is provided as an overview of, and topical guide to, machine learning:

Owl Scientific Computing is a software system for scientific and engineering computing developed in the Department of Computer Science and Technology, University of Cambridge. The System Research Group (SRG) in the department recognises Owl as one of the representative systems developed in SRG in the 2010s. The source code is licensed under the MIT License and can be accessed from the GitHub repository.

The first edition of the textbook Data Science and Predictive Analytics: Biomedical and Health Applications using R, authored by Ivo D. Dinov, was published in August 2018 by Springer. The second edition of the book was printed in 2023.