In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events.

In mathematical analysis, a function of bounded variation, also known as BV function, is a real-valued function whose total variation is bounded (finite): the graph of a function having this property is well behaved in a precise sense. For a continuous function of a single variable, being of bounded variation means that the distance along the direction of the y-axis, neglecting the contribution of motion along x-axis, traveled by a point moving along the graph has a finite value. For a continuous function of several variables, the meaning of the definition is the same, except for the fact that the continuous path to be considered cannot be the whole graph of the given function, but can be every intersection of the graph itself with a hyperplane parallel to a fixed x-axis and to the y-axis.

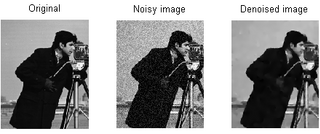

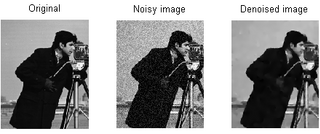

Noise reduction is the process of removing noise from a signal. Noise reduction techniques exist for audio and images. Noise reduction algorithms may distort the signal to some degree. Noise rejection is the ability of a circuit to isolate an undesired signal component from the desired signal component, as with common-mode rejection ratio.

In mathematics, the total variation identifies several slightly different concepts, related to the (local or global) structure of the codomain of a function or a measure. For a real-valued continuous function f, defined on an interval [a, b] ⊂ R, its total variation on the interval of definition is a measure of the one-dimensional arclength of the curve with parametric equation x ↦ f(x), for x ∈ [a, b]. Functions whose total variation is finite are called functions of bounded variation.

In computer vision and image processing, Otsu's method, named after Nobuyuki Otsu, is used to perform automatic image thresholding. In the simplest form, the algorithm returns a single intensity threshold that separate pixels into two classes, foreground and background. This threshold is determined by minimizing intra-class intensity variance, or equivalently, by maximizing inter-class variance. Otsu's method is a one-dimensional discrete analogue of Fisher's discriminant analysis, is related to Jenks optimization method, and is equivalent to a globally optimal k-means performed on the intensity histogram. The extension to multi-level thresholding was described in the original paper, and computationally efficient implementations have since been proposed.

Geometry processing is an area of research that uses concepts from applied mathematics, computer science and engineering to design efficient algorithms for the acquisition, reconstruction, analysis, manipulation, simulation and transmission of complex 3D models. As the name implies, many of the concepts, data structures, and algorithms are directly analogous to signal processing and image processing. For example, where image smoothing might convolve an intensity signal with a blur kernel formed using the Laplace operator, geometric smoothing might be achieved by convolving a surface geometry with a blur kernel formed using the Laplace-Beltrami operator.

In decision theory, a scoring rule provides evaluation metrics for probabilistic predictions or forecasts. While "regular" loss functions assign a goodness-of-fit score to a predicted value and an observed value, scoring rules assign such a score to a predicted probability distribution and an observed value. On the other hand, a scoring function provides a summary measure for the evaluation of point predictions, i.e. one predicts a property or functional , like the expectation or the median.

In electronics and signal processing, mainly in digital signal processing, a Gaussian filter is a filter whose impulse response is a Gaussian function. Gaussian filters have the properties of having no overshoot to a step function input while minimizing the rise and fall time. This behavior is closely connected to the fact that the Gaussian filter has the minimum possible group delay. A Gaussian filter will have the best combination of suppression of high frequencies while also minimizing spatial spread, being the critical point of the uncertainty principle. These properties are important in areas such as oscilloscopes and digital telecommunication systems.

The topological derivative is, conceptually, a derivative of a shape functional with respect to infinitesimal changes in its topology, such as adding an infinitesimal hole or crack. When used in higher dimensions than one, the term topological gradient is also used to name the first-order term of the topological asymptotic expansion, dealing only with infinitesimal singular domain perturbations. It has applications in shape optimization, topology optimization, image processing and mechanical modeling.

In the theory of stochastic processes, filtering describes the problem of determining the state of a system from an incomplete and potentially noisy set of observations. While originally motivated by problems in engineering, filtering found applications in many fields from signal processing to finance.

A bilateral filter is a non-linear, edge-preserving, and noise-reducing smoothing filter for images. It replaces the intensity of each pixel with a weighted average of intensity values from nearby pixels. This weight can be based on a Gaussian distribution. Crucially, the weights depend not only on Euclidean distance of pixels, but also on the radiometric differences. This preserves sharp edges.

Prototype filters are electronic filter designs that are used as a template to produce a modified filter design for a particular application. They are an example of a nondimensionalised design from which the desired filter can be scaled or transformed. They are most often seen in regard to electronic filters and especially linear analogue passive filters. However, in principle, the method can be applied to any kind of linear filter or signal processing, including mechanical, acoustic and optical filters.

In signal processing, particularly image processing, total variation denoising, also known as total variation regularization or total variation filtering, is a noise removal process (filter). It is based on the principle that signals with excessive and possibly spurious detail have high total variation, that is, the integral of the image gradient magnitude is high. According to this principle, reducing the total variation of the signal—subject to it being a close match to the original signal—removes unwanted detail whilst preserving important details such as edges. The concept was pioneered by L. I. Rudin, S. Osher, and E. Fatemi in 1992 and so is today known as the ROF model.

Foreground detection is one of the major tasks in the field of computer vision and image processing whose aim is to detect changes in image sequences. Background subtraction is any technique which allows an image's foreground to be extracted for further processing.

Generalized filtering is a generic Bayesian filtering scheme for nonlinear state-space models. It is based on a variational principle of least action, formulated in generalized coordinates of motion. Note that "generalized coordinates of motion" are related to—but distinct from—generalized coordinates as used in (multibody) dynamical systems analysis. Generalized filtering furnishes posterior densities over hidden states generating observed data using a generalized gradient descent on variational free energy, under the Laplace assumption. Unlike classical filtering, generalized filtering eschews Markovian assumptions about random fluctuations. Furthermore, it operates online, assimilating data to approximate the posterior density over unknown quantities, without the need for a backward pass. Special cases include variational filtering, dynamic expectation maximization and generalized predictive coding.

Kernel methods are a well-established tool to analyze the relationship between input data and the corresponding output of a function. Kernels encapsulate the properties of functions in a computationally efficient way and allow algorithms to easily swap functions of varying complexity.

In computer science, a suffix automaton is an efficient data structure for representing the substring index of a given string which allows the storage, processing, and retrieval of compressed information about all its substrings. The suffix automaton of a string is the smallest directed acyclic graph with a dedicated initial vertex and a set of "final" vertices, such that paths from the initial vertex to final vertices represent the suffixes of the string.

In image Noise reduction, local pixel grouping is the algorithm to remove noise from images using principal component analysis (PCA).

Quantum image processing (QIMP) is using quantum computing or quantum information processing to create and work with quantum images.

A guided filter is an edge-preserving smoothing image filter. As with a bilateral filter, it can filter out noise or texture while retaining sharp edges.