In CJK (Chinese/Japanese/Korean) computing

The term DBCS traditionally refers to a character encoding where each graphic character is encoded in two bytes.

In an 8-bit code, such as Big-5 or Shift JIS, a character from the DBCS is represented with a lead (first) byte with the most significant bit set (i.e., being greater than seven bits), and paired up with a single-byte character-set (SBCS). For the practical reason of maintaining compatibility with unmodified, off-the-shelf software, the SBCS is associated with half-width characters and the DBCS with full-width characters. In a 7-bit code such as ISO-2022-JP, escape sequences or shift codes are used to switch between the SBCS and DBCS.

Sometimes, the use of the term "DBCS" can imply an underlying structure that does not comply with ISO 2022. For example, "DBCS" can sometimes mean a double-byte encoding that is specifically not Extended Unix Code (EUC).

This original meaning of DBCS is different from what some consider correct usage today. Some insist that these character encodings be properly called multi-byte character sets (MBCS) or variable-width encodings, because character encodings such as EUC-JP, EUC-KR, EUC-TW, GB 18030, and UTF-8 use more than two bytes for some characters, and they support one byte for other characters.

Ambiguity

Some people use DBCS to mean the UTF-16 and UTF-8 encodings, while other people use the term DBCS to mean older (pre-Unicode) character encodings that use more than one byte per character. Shift JIS, GB 2312 and Big5 are a few character encodings that can contain more than one byte per character, but even using the term DBCS for these character encodings is incorrect terminology because these character encodings are really variable-width encodings (as are both UTF-16 and UTF-8). Some IBM mainframes do have true DBCS code pages, which contain only the double byte portion of a multi-byte code page.

If a person uses the term "DBCS enablement" for software internationalization, they are using ambiguous terminology. They either mean they want to write software for East Asian markets using older technology with code pages, or they are planning on using Unicode. Sometimes this term also implies translation into an East Asian language. Usually "Unicode enablement" means internationalizing software by using Unicode, and "DBCS enablement" means using incompatible character encodings that exist between the various countries in East Asia for internationalizing software. Since Unicode, unlike many other character encodings, supports all the major languages in East Asia, it is generally easier to enable and maintain software that uses Unicode. DBCS (non-Unicode) enablement is usually only desired when much older operating systems or applications do not support Unicode.

Character encoding is the process of assigning numbers to graphical characters, especially the written characters of human language, allowing them to be stored, transmitted, and transformed using digital computers. The numerical values that make up a character encoding are known as "code points" and collectively comprise a "code space", a "code page", or a "character map".

While Hypertext Markup Language (HTML) has been in use since 1991, HTML 4.0 from December 1997 was the first standardized version where international characters were given reasonably complete treatment. When an HTML document includes special characters outside the range of seven-bit ASCII, two goals are worth considering: the information's integrity, and universal browser display.

Unicode, formally the Unicode Standard, is an information technology standard for the consistent encoding, representation, and handling of text expressed in most of the world's writing systems. The standard, which is maintained by the Unicode Consortium, defines 144,697 characters covering 159 modern and historic scripts, as well as symbols, emoji, and non-visual control and formatting codes.

UTF-16 (16-bit Unicode Transformation Format) is a character encoding capable of encoding all 1,112,064 valid character code points of Unicode (in fact this number of code points is dictated by the design of UTF-16). The encoding is variable-length, as code points are encoded with one or two 16-bit code units. UTF-16 arose from an earlier obsolete fixed-width 16-bit encoding, now known as UCS-2 (for 2-byte Universal Character Set), once it became clear that more than 216 (65,536) code points were needed.

Big-5 or Big5 is a Chinese character encoding method used in Taiwan, Hong Kong, and Macau for traditional Chinese characters.

In internationalization, CJK characters is a collective term for the Chinese, Japanese, and Korean languages, all of which include Chinese characters and derivatives in their writing systems, sometimes paired with other scripts. Collectively, the CJK characters often include Hànzì in Chinese, Kanji and Kana in Japanese, Hanja and Hangul in Korean. Rarely, Vietnamese is included, making the abbreviation CJKV, since Vietnamese historically used Chinese characters as well, in which context they were known as Chữ Hán and Chữ Nôm in Vietnamese.

In computing, a code page is a character encoding and as such it is a specific association of a set of printable characters and control characters with unique numbers. Typically each number represents the binary value in a single byte.

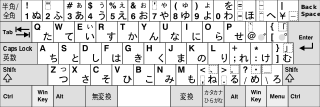

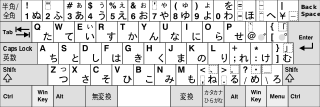

In relation to the Japanese language and computers many adaptation issues arise, some unique to Japanese and others common to languages which have a very large number of characters. The number of characters needed in order to write in English is quite small, and thus it is possible to use only one byte (28=256 possible values) to encode each English character. However, the number of characters in Japanese is many more than 256 and thus cannot be encoded using a single byte - Japanese is thus encoded using two or more bytes, in a so-called "double byte" or "multi-byte" encoding. Problems that arise relate to transliteration and romanization, character encoding, and input of Japanese text.

In computing, JIS encoding refers to several Japanese Industrial Standards for encoding the Japanese language. Strictly speaking, the term means either:

ISO/IEC 2022Information technology—Character code structure and extension techniques, is an ISO/IEC standard in the field of character encoding. Originating in 1971, it was most recently revised in 1994.

Shift JIS is a character encoding for the Japanese language, originally developed by a Japanese company called ASCII Corporation in conjunction with Microsoft and standardized as JIS X 0208 Appendix 1. By February 2021, 0.1% of all web pages used Shift JIS, a decline from 1.3% in July 2014.

Extended Unix Code (EUC) is a multibyte character encoding system used primarily for Japanese, Korean, and simplified Chinese.

A variable-width encoding is a type of character encoding scheme in which codes of differing lengths are used to encode a character set for representation, usually in a computer. Most common variable-width encodings are multibyte encodings, which use varying numbers of bytes (octets) to encode different characters. (Some authors, notably in Microsoft documentation, use the term multibyte character set, which is a misnomer, because representation size is an attribute of the encoding, not of the character set.)

A CCSID is a 16-bit number that represents a particular encoding of a specific code page. For example, Unicode is a code page that has several encoding forms, like UTF-8, UTF-16 and UTF-32, but which may or may not actually be accompanied by a CCSID number to indicate that this encoding is being used.

Unified Hangul Code (UHC), or Extended Wansung, also known under Microsoft Windows as Code Page 949, is the Microsoft Windows code page for the Korean language. It is an extension of Wansung Code to include all 11172 non-partial Hangul syllables present in Johab. This corresponds to the pre-composed syllables available in Unicode 2.0 and later.

JIS X 0201, a Japanese Industrial Standard developed in 1969, was the first Japanese electronic character set to become widely used. It is either a 7-bit encoding or an 8-bit encoding, although the 8-bit form is dominant for modern use. The full name of this standard is 7-bit and 8-bit coded character sets for information interchange (7ビット及び8ビットの情報交換用符号化文字集合).

Half-width kana are katakana characters displayed compressed at half their normal width, instead of the usual square (1:1) aspect ratio. For example, the usual (full-width) form of the katakana ka is カ while the half-width form is カ. Half-width hiragana is not included in Unicode, although it's usable on Web or E-books via CSS's font-feature-settings: "hwid" 1 with Adobe-Japan1-6 based OpenType fonts. Half-width kanji is not usable on modern computers even though it is used in some receipt printers, electric bulletin board or old computers.

In CJK computing, graphic characters are traditionally classed into fullwidth and halfwidth characters. Unlike monospaced fonts, a halfwidth character occupies half the width of a fullwidth character, hence the name.

IBM code page 949 (IBM-949) is a character encoding which has been used by IBM to represent Korean language text on computers. It is a variable-width encoding which represents the characters from the Wansung code defined by the South Korean standard KS X 1001 in a format compatible with EUC-KR, but adds IBM extensions for additional hanja, additional precomposed Hangul syllables, and user-defined characters.

Several mutually incompatible versions of the Extended Binary Coded Decimal Interchange Code (EBCDIC) have been used to represent the Japanese language on computers, including variants defined by Hitachi, Fujitsu, IBM and others. Some are variable-width encodings, employing locking shift codes to switch between single-byte and double-byte modes. Unlike other EBCDIC locales, the lowercase basic Latin letters are often not preserved in their usual locations.