Character encoding is the process of assigning numbers to graphical characters, especially the written characters of human language, allowing them to be stored, transmitted, and transformed using computers. The numerical values that make up a character encoding are known as code points and collectively comprise a code space, a code page, or character map.

While Hypertext Markup Language (HTML) has been in use since 1991, HTML 4.0 from December 1997 was the first standardized version where international characters were given reasonably complete treatment. When an HTML document includes special characters outside the range of seven-bit ASCII, two goals are worth considering: the information's integrity, and universal browser display.

Unicode, formally The Unicode Standard, is a text encoding standard maintained by the Unicode Consortium designed to support the use of text in all of the world's writing systems that can be digitized. Version 16.0 of the standard defines 154998 characters and 168 scripts used in various ordinary, literary, academic, and technical contexts.

Web pages authored using HyperText Markup Language (HTML) may contain multilingual text represented with the Unicode universal character set. Key to the relationship between Unicode and HTML is the relationship between the "document character set", which defines the set of characters that may be present in an HTML document and assigns numbers to them, and the "external character encoding", or "charset", used to encode a given document as a sequence of bytes.

UTF-8 is a character encoding standard used for electronic communication. Defined by the Unicode Standard, the name is derived from Unicode Transformation Format – 8-bit. Almost every webpage is stored in UTF-8.

UTF-16 (16-bit Unicode Transformation Format) is a character encoding method capable of encoding all 1,112,064 valid code points of Unicode. The encoding is variable-length as code points are encoded with one or two 16-bitcode units. UTF-16 arose from an earlier obsolete fixed-width 16-bit encoding now known as UCS-2 (for 2-byte Universal Character Set), once it became clear that more than 216 (65,536) code points were needed, including most emoji and important CJK characters such as for personal and place names.

The byte-order mark (BOM) is a particular usage of the special Unicode character code, U+FEFFZERO WIDTH NO-BREAK SPACE, whose appearance as a magic number at the start of a text stream can signal several things to a program reading the text:

UTF-32 (32-bit Unicode Transformation Format), sometimes called UCS-4, is a fixed-length encoding used to encode Unicode code points that uses exactly 32 bits (four bytes) per code point (but a number of leading bits must be zero as there are far fewer than 232 Unicode code points, needing actually only 21 bits). In contrast, all other Unicode transformation formats are variable-length encodings. Each 32-bit value in UTF-32 represents one Unicode code point and is exactly equal to that code point's numerical value.

Windows-1252 or CP-1252 is a legacy single-byte character encoding that is used by default in Microsoft Windows throughout the Americas, Western Europe, Oceania, and much of Africa.

UTF-7 is an obsolete variable-length character encoding for representing Unicode text using a stream of ASCII characters. It was originally intended to provide a means of encoding Unicode text for use in Internet E-mail messages that was more efficient than the combination of UTF-8 with quoted-printable.

ISO/IEC 8859-9:1999, Information technology — 8-bit single-byte coded graphic character sets — Part 9: Latin alphabet No. 5, is part of the ISO/IEC 8859 series of ASCII-based standard character encodings, first edition published in 1989. It is designated ECMA-128 by Ecma International and TS 5881 as a Turkish standard. It is informally referred to as Latin-5 or Turkish. It was designed to cover the Turkish language, designed as being of more use than the ISO/IEC 8859-3 encoding. It is identical to ISO/IEC 8859-1 except for the replacement of six Icelandic characters with characters unique to the Turkish alphabet. And the uppercase of i is İ; the lowercase of I is ı.

GB 18030 is a Chinese government standard, described as Information Technology — Chinese coded character set and defines the required language and character support necessary for software in China. GB18030 is the registered Internet name for the official character set of the People's Republic of China (PRC) superseding GB2312. As a Unicode Transformation Format, GB18030 supports both simplified and traditional Chinese characters. It is also compatible with legacy encodings including GB/T 2312, CP936, and GBK 1.0.

Shift JIS is a character encoding for the Japanese language, originally developed by the Japanese company ASCII Corporation in conjunction with Microsoft and standardized as JIS X 0208 Appendix 1.

Extended Unix Code (EUC) is a multibyte character encoding system used primarily for Japanese, Korean, and simplified Chinese (characters).

Binary Ordered Compression for Unicode (BOCU) is a MIME compatible Unicode compression scheme. BOCU-1 combines the wide applicability of UTF-8 with the compactness of Standard Compression Scheme for Unicode (SCSU). This Unicode encoding is designed to be useful for compressing short strings, and maintains code point order. BOCU-1 is specified in a Unicode Technical Note.

The Compatibility Encoding Scheme for UTF-16: 8-Bit (CESU-8) is a variant of UTF-8 that is described in Unicode Technical Report #26. A Unicode code point from the Basic Multilingual Plane (BMP), i.e. a code point in the range U+0000 to U+FFFF, is encoded in the same way as in UTF-8. A Unicode supplementary character, i.e. a code point in the range U+10000 to U+10FFFF, is first represented as a surrogate pair, like in UTF-16, and then each surrogate code point is encoded in UTF-8. Therefore, CESU-8 needs six bytes for each Unicode supplementary character while UTF-8 needs only four. Though not specified in the technical report, unpaired surrogates are also encoded as 3 bytes each, and CESU-8 is exactly the same as applying an older UCS-2 to UTF-8 converter to UTF-16 data.

This article compares Unicode encodings in two types of environments: 8-bit clean environments, and environments that forbid the use of byte values with the high bit set. Originally, such prohibitions allowed for links that used only seven data bits, but they remain in some standards and so some standard-conforming software must generate messages that comply with the restrictions. The Standard Compression Scheme for Unicode and the Binary Ordered Compression for Unicode are excluded from the comparison tables because it is difficult to simply quantify their size.

Unified Hangul Code (UHC), or Extended Wansung, also known under Microsoft Windows as Code Page 949, is the Microsoft Windows code page for the Korean language. It is an extension of Wansung Code to include all 11172 non-partial Hangul syllables present in Johab. This corresponds to the pre-composed syllables available in Unicode 2.0 and later.

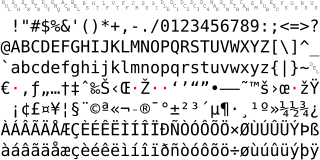

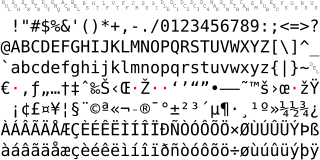

The Basic Latin Unicode block, sometimes informally called C0 Controls and Basic Latin, is the first block of the Unicode standard, and the only block which is encoded in one byte in UTF-8. The block contains all the letters and control codes of the ASCII encoding. It ranges from U+0000 to U+007F, contains 128 characters and includes the C0 controls, ASCII punctuation and symbols, ASCII digits, both the uppercase and lowercase of the English alphabet and a control character.

Microsoft Windows code page 932, also called Windows-31J amongst other names, is the Microsoft Windows code page for the Japanese language, which is an extended variant of the Shift JIS Japanese character encoding. It contains standard 7-bit ASCII codes, and Japanese characters are indicated by the high bit of the first byte being set to 1. Some code points in this page require a second byte, so characters use either 8 or 16 bits for encoding.