MPEG-2 is a standard for "the generic coding of moving pictures and associated audio information". It describes a combination of lossy video compression and lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth. While MPEG-2 is not as efficient as newer standards such as H.264/AVC and H.265/HEVC, backwards compatibility with existing hardware and software means it is still widely used, for example in over-the-air digital television broadcasting and in the DVD-Video standard.

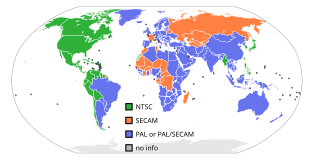

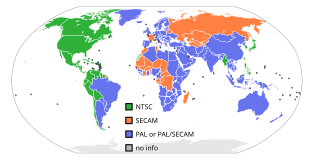

NTSC is the first American standard for analog television, published and adopted in 1941. In 1961, it was assigned the designation System M. It is also known as EIA standard 170.

Interlaced video is a technique for doubling the perceived frame rate of a video display without consuming extra bandwidth. The interlaced signal contains two fields of a video frame captured consecutively. This enhances motion perception to the viewer, and reduces flicker by taking advantage of the characteristics of the human visual system.

Telecine is the process of transferring film into video and is performed in a color suite. The term is also used to refer to the equipment used in this post-production process.

Advanced Television Systems Committee (ATSC) standards are an international set of standards for broadcast and digital television transmission over terrestrial, cable and satellite networks. It is largely a replacement for the analog NTSC standard and, like that standard, is used mostly in the United States, Mexico, Canada, South Korea and Trinidad & Tobago. Several former NTSC users, such as Japan, have not used ATSC during their digital television transition, because they adopted other systems such as ISDB developed by Japan, and DVB developed in Europe, for example.

Broadcasttelevision systems are the encoding or formatting systems for the transmission and reception of terrestrial television signals.

The refresh rate, also known as vertical refresh rate or vertical scan rate in reference to terminology originating with the cathode-ray tubes (CRTs), is the number of times per second that a raster-based display device displays a new image. This is independent from frame rate, which describes how many images are stored or generated every second by the device driving the display. On CRT displays, higher refresh rates produce less flickering, thereby reducing eye strain. In other technologies such as liquid-crystal displays, the refresh rate affects only how often the image can potentially be updated.

In video technology, 24p refers to a video format that operates at 24 frames per second frame rate with progressive scanning. Originally, 24p was used in the non-linear editing of film-originated material. Today, 24p formats are being increasingly used for aesthetic reasons in image acquisition, delivering film-like motion characteristics. Some vendors advertise 24p products as a cheaper alternative to film acquisition.

Deinterlacing is the process of converting interlaced video into a non-interlaced or progressive form. Interlaced video signals are commonly found in analog television, VHS, Laserdisc, digital television (HDTV) when in the 1080i format, some DVD titles, and a smaller number of Blu-ray discs.

720p is a progressive HD signal format with 720 horizontal lines/1280 columns and an aspect ratio (AR) of 16:9, normally known as widescreen HD (1.78:1). All major HD broadcasting standards include a 720p format, which has a resolution of 1280×720p.

1080i is a term used in high-definition television (HDTV) and video display technology. It means a video mode with 1080 lines of vertical resolution. The "i" stands for interlaced scanning method. This format was once a standard in HDTV. It was particularly used for broadcast television. This is because it can deliver high-resolution images without needing excessive bandwidth. This format is used in the SMPTE 292M standard.

576i is a standard-definition digital video mode, originally used for digitizing 625 line analogue television in most countries of the world where the utility frequency for electric power distribution is 50 Hz. Because of its close association with the legacy colour encoding systems, it is often referred to as PAL, PAL/SECAM or SECAM when compared to its 60 Hz NTSC-colour-encoded counterpart, 480i.

High-definition video is video of higher resolution and quality than standard-definition. While there is no standardized meaning for high-definition, generally any video image with considerably more than 480 vertical scan lines or 576 vertical lines (Europe) is considered high-definition. 480 scan lines is generally the minimum even though the majority of systems greatly exceed that. Images of standard resolution captured at rates faster than normal, by a high-speed camera may be considered high-definition in some contexts. Some television series shot on high-definition video are made to look as if they have been shot on film, a technique which is often known as filmizing.

1080p is a set of HDTV high-definition video modes characterized by 1,920 pixels displayed across the screen horizontally and 1,080 pixels down the screen vertically; the p stands for progressive scan, i.e. non-interlaced. The term usually assumes a widescreen aspect ratio of 16:9, implying a resolution of 2.1 megapixels. It is often marketed as Full HD or FHD, to contrast 1080p with 720p resolution screens. Although 1080p is sometimes referred to as 2K resolution, other sources differentiate between 1080p and (true) 2K resolution.

Progressive segmented Frame is a scheme designed to acquire, store, modify, and distribute progressive scan video using interlaced equipment.

Three-two pull down is a term used in filmmaking and television production for the post-production process of transferring film to video.

Television standards conversion is the process of changing a television transmission or recording from one video system to another. Converting video between different numbers of lines, frame rates, and color models in video pictures is a complex technical problem. However, the international exchange of television programming makes standards conversion necessary so that video may be viewed in another nation with a differing standard. Typically video is fed into video standards converter which produces a copy according to a different video standard. One of the most common conversions is between the NTSC and PAL standards.

Rec. 709, also known as Rec.709, BT.709, and ITU 709, is a standard developed by ITU-R for image encoding and signal characteristics of high-definition television.

High-definition television (HDTV) describes a television or video system which provides a substantially higher image resolution than the previous generation of technologies. The term has been used since at least 1933; in more recent times, it refers to the generation following standard-definition television (SDTV). It is the standard video format used in most broadcasts: terrestrial broadcast television, cable television, satellite television.