Related Research Articles

In programming and information security, a buffer overflow or buffer overrun is an anomaly whereby a program writes data to a buffer beyond the buffer's allocated memory, overwriting adjacent memory locations.

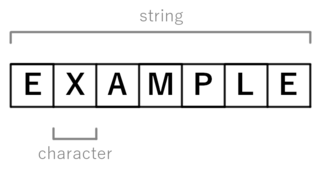

In computer programming, a string is traditionally a sequence of characters, either as a literal constant or as some kind of variable. The latter may allow its elements to be mutated and the length changed, or it may be fixed. A string is generally considered as a data type and is often implemented as an array data structure of bytes that stores a sequence of elements, typically characters, using some character encoding. String may also denote more general arrays or other sequence data types and structures.

UTF-8 is a variable-length character encoding standard used for electronic communication. Defined by the Unicode Standard, the name is derived from Unicode Transformation Format – 8-bit.

UTF-16 (16-bit Unicode Transformation Format) is a character encoding capable of encoding all 1,112,064 valid code points of Unicode (in fact this number of code points is dictated by the design of UTF-16). The encoding is variable-length, as code points are encoded with one or two 16-bit code units. UTF-16 arose from an earlier obsolete fixed-width 16-bit encoding, now known as UCS-2 (for 2-byte Universal Character Set), once it became clear that more than 216 (65,536) code points were needed.

UTF-32 (32-bit Unicode Transformation Format) is a fixed-length encoding used to encode Unicode code points that uses exactly 32 bits (four bytes) per code point (but a number of leading bits must be zero as there are far fewer than 232 Unicode code points, needing actually only 21 bits). UTF-32 is a fixed-length encoding, in contrast to all other Unicode transformation formats, which are variable-length encodings. Each 32-bit value in UTF-32 represents one Unicode code point and is exactly equal to that code point's numerical value.

x86 assembly language is the name for the family of assembly languages which provide some level of backward compatibility with CPUs back to the Intel 8008 microprocessor, which was launched in April 1972. It is used to produce object code for the x86 class of processors.

In hacking, a shellcode is a small piece of code used as the payload in the exploitation of a software vulnerability. It is called "shellcode" because it typically starts a command shell from which the attacker can control the compromised machine, but any piece of code that performs a similar task can be called shellcode. Because the function of a payload is not limited to merely spawning a shell, some have suggested that the name shellcode is insufficient. However, attempts at replacing the term have not gained wide acceptance. Shellcode is commonly written in machine code.

The null character is a control character with the value zero. It is present in many character sets, including those defined by the Baudot and ITA2 codes, ISO/IEC 646, the C0 control code, the Universal Coded Character Set, and EBCDIC. It is available in nearly all mainstream programming languages. It is often abbreviated as NUL. In 8-bit codes, it is known as a null byte.

The syntax of the C programming language is the set of rules governing writing of software in C. It is designed to allow for programs that are extremely terse, have a close relationship with the resulting object code, and yet provide relatively high-level data abstraction. C was the first widely successful high-level language for portable operating-system development.

In formal language theory, the empty string, or empty word, is the unique string of length zero.

A wide character is a computer character datatype that generally has a size greater than the traditional 8-bit character. The increased datatype size allows for the use of larger coded character sets.

This article compares Unicode encodings. Two situations are considered: 8-bit-clean environments, and environments that forbid use of byte values that have the high bit set. Originally such prohibitions were to allow for links that used only seven data bits, but they remain in some standards and so some standard-conforming software must generate messages that comply with the restrictions. Standard Compression Scheme for Unicode and Binary Ordered Compression for Unicode are excluded from the comparison tables because it is difficult to simply quantify their size.

CD-Text is an extension of the Red Book Compact Disc specifications standard for audio CDs. It allows storage of additional information on a standards-compliant audio CD.

The C++ programming language has support for string handling, mostly implemented in its standard library. The language standard specifies several string types, some inherited from C, some designed to make use of the language's features, such as classes and RAII. The most-used of these is std::string.

In computer programming, a netstring is a formatting method for byte strings that uses a declarative notation to indicate the size of the string.

bcrypt is a password-hashing function designed by Niels Provos and David Mazières, based on the Blowfish cipher and presented at USENIX in 1999. Besides incorporating a salt to protect against rainbow table attacks, bcrypt is an adaptive function: over time, the iteration count can be increased to make it slower, so it remains resistant to brute-force search attacks even with increasing computation power.

Escape sequences are used in the programming languages C and C++, and their design was copied in many other languages such as Java, PHP, C#, etc. An escape sequence is a sequence of characters that does not represent itself when used inside a character or string literal, but is translated into another character or a sequence of characters that may be difficult or impossible to represent directly.

The C programming language has a set of functions implementing operations on strings in its standard library. Various operations, such as copying, concatenation, tokenization and searching are supported. For character strings, the standard library uses the convention that strings are null-terminated: a string of n characters is represented as an array of n + 1 elements, the last of which is a "NUL character" with numeric value 0.

Universal Binary JSON (UBJSON) is a computer data interchange format. It is a binary form directly imitating JSON, but requiring fewer bytes of data. It aims to achieve the generality of JSON, combined with being much easier to process than JSON.

Although C++ is one of the most widespread programming languages, many prominent software engineers criticize C++ for being overly complex and fundamentally flawed. Among the critics have been: Robert Pike, Joshua Bloch, Linus Torvalds, Donald Knuth, Richard Stallman, and Ken Thompson. C++ was widely adopted and implemented as a systems language through most of its existence, it has been used to build many pieces of very important software.

References

- ↑ "Chapter 15 - MIPS Assembly Language" (PDF). Carleton University. Retrieved 9 October 2023.

- ↑ Ritchie, Dennis M. (April 1993). The development of the C language. Second History of Programming Languages conference. Cambridge, MA.

- ↑ Ritchie, Dennis M. (1996). "The development of the C language". In Bergin, Jr., Thomas J.; Gibson, Jr., Richard G. (eds.). History of Programming Languages (2 ed.). New York: ACM Press. ISBN 0-201-89502-1 – via Addison-Wesley (Reading, Mass).

- ↑ IBM z/Architecture Principles of Operation

- ↑ Kamp, Poul-Henning (25 July 2011), "The Most Expensive One-byte Mistake", ACM Queue, 9 (7): 40–43, doi: 10.1145/2001562.2010365 , ISSN 1542-7730, S2CID 30282393

- ↑ Rain Forest Puppy (9 September 1999). "Perl CGI problems". Phrack Magazine. artofhacking.com. 9 (55): 7. Retrieved 3 January 2016.

- ↑ "Null byte injection on PHP?".

- ↑ "UTF-8, a transformation format of ISO 10646" . Retrieved 19 September 2013.

- ↑ "Unicode/UTF-8-character table" . Retrieved 13 September 2013.

- ↑ Kuhn, Markus. "UTF-8 and Unicode FAQ" . Retrieved 13 September 2013.