Standard definition

The standard definition of a reference range for a particular measurement is defined as the interval between which 95% of values of a reference population fall into, in such a way that 2.5% of the time a value will be less than the lower limit of this interval, and 2.5% of the time it will be larger than the upper limit of this interval, whatever the distribution of these values. [1]

Reference ranges that are given by this definition are sometimes referred as standard ranges.

Since a range is a defined statistical value (Range (statistics)) that describes the interval between the smallest and largest values, many, including the International Federation of Clinical Chemistry prefer to use the expression reference interval rather than reference range. [2]

Regarding the target population, if not otherwise specified, a standard reference range generally denotes the one in healthy individuals, or without any known condition that directly affects the ranges being established. These are likewise established using reference groups from the healthy population, and are sometimes termed normal ranges or normal values (and sometimes "usual" ranges/values). However, using the term normal may not be appropriate as not everyone outside the interval is abnormal, and people who have a particular condition may still fall within this interval.

However, reference ranges may also be established by taking samples from the whole population, with or without diseases and conditions. In some cases, diseased individuals are taken as the population, establishing reference ranges among those having a disease or condition. Preferably, there should be specific reference ranges for each subgroup of the population that has any factor that affects the measurement, such as, for example, specific ranges for each sex, age group, race or any other general determinant.

Establishment methods

Methods for establishing reference ranges can be based on assuming a normal distribution or a log-normal distribution, or directly from percentages of interest, as detailed respectively in following sections. When establishing reference ranges from bilateral organs (e.g., vision or hearing), both results from the same individual can be used, although intra-subject correlation must be taken into account. [3]

Normal distribution

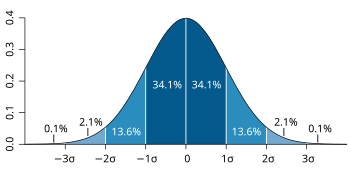

The 95% interval, is often estimated by assuming a normal distribution of the measured parameter, in which case it can be defined as the interval limited by 1.96 [4] (often rounded up to 2) population standard deviations from either side of the population mean (also called the expected value). However, in the real world, neither the population mean nor the population standard deviation are known. They both need to be estimated from a sample, whose size can be designated n. The population standard deviation is estimated by the sample standard deviation and the population mean is estimated by the sample mean (also called mean or arithmetic mean). To account for these estimations, the 95% prediction interval (95% PI) is calculated as:

- 95% PI = mean ± t0.975,n−1·√(n+1)/n·sd,

where is the 97.5% quantile of a Student's t-distribution with n−1 degrees of freedom.

When the sample size is large (n≥30)

This method is often acceptably accurate if the standard deviation, as compared to the mean, is not very large. A more accurate method is to perform the calculations on logarithmized values, as described in separate section later.

The following example of this (not logarithmized) method is based on values of fasting plasma glucose taken from a reference group of 12 subjects: [5]

| Fasting plasma glucose (FPG) in mmol/L | Deviation from mean m | Squared deviation from mean m | |

|---|---|---|---|

| Subject 1 | 5.5 | 0.17 | 0.029 |

| Subject 2 | 5.2 | -0.13 | 0.017 |

| Subject 3 | 5.2 | -0.13 | 0.017 |

| Subject 4 | 5.8 | 0.47 | 0.221 |

| Subject 5 | 5.6 | 0.27 | 0.073 |

| Subject 6 | 4.6 | -0.73 | 0.533 |

| Subject 7 | 5.6 | 0.27 | 0.073 |

| Subject 8 | 5.9 | 0.57 | 0.325 |

| Subject 9 | 4.7 | -0.63 | 0.397 |

| Subject 10 | 5 | -0.33 | 0.109 |

| Subject 11 | 5.7 | 0.37 | 0.137 |

| Subject 12 | 5.2 | -0.13 | 0.017 |

| Mean = 5.33 (m) n=12 | Mean = 0.00 | Sum/(n−1) = 1.95/11 =0.18 = standard deviation (s.d.) |

As can be given from, for example, a table of selected values of Student's t-distribution, the 97.5% percentile with (12-1) degrees of freedom corresponds to

Subsequently, the lower and upper limits of the standard reference range are calculated as:

Thus, the standard reference range for this example is estimated to be 4.4 to 6.3 mmol/L.

Confidence interval of limit

The 90% confidence interval of a standard reference range limit as estimated assuming a normal distribution can be calculated by: [6]

- Lower limit of the confidence interval = percentile limit - 2.81 × SD⁄√n

- Upper limit of the confidence interval = percentile limit + 2.81 × SD⁄√n,

where SD is the standard deviation, and n is the number of samples.

Taking the example from the previous section, the number of samples is 12 and the standard deviation is 0.42 mmol/L, resulting in:

- Lower limit of the confidence interval of the lower limit of the standard reference range = 4.4 - 2.81 × 0.42⁄√12 ≈ 4.1

- Upper limit of the confidence interval of the lower limit of the standard reference range = 4.4 + 2.81 × 0.42⁄√12 ≈ 4.7

Thus, the lower limit of the reference range can be written as 4.4 (90% CI 4.1–4.7) mmol/L.

Likewise, with similar calculations, the upper limit of the reference range can be written as 6.3 (90% CI 6.0–6.6) mmol/L.

These confidence intervals reflect random error, but do not compensate for systematic error, which in this case can arise from, for example, the reference group not having fasted long enough before blood sampling.

As a comparison, actual reference ranges used clinically for fasting plasma glucose are estimated to have a lower limit of approximately 3.8 [7] to 4.0, [8] and an upper limit of approximately 6.0 [8] to 6.1. [9]

Log-normal distribution

In reality, biological parameters tend to have a log-normal distribution, [10] rather than the normal distribution or Gaussian distribution.

An explanation for this log-normal distribution for biological parameters is: The event where a sample has half the value of the mean or median tends to have almost equal probability to occur as the event where a sample has twice the value of the mean or median. Also, only a log-normal distribution can compensate for the inability of almost all biological parameters to be of negative numbers (at least when measured on absolute scales), with the consequence that there is no definite limit to the size of outliers (extreme values) on the high side, but, on the other hand, they can never be less than zero, resulting in a positive skewness.

As shown in diagram at right, this phenomenon has relatively small effect if the standard deviation (as compared to the mean) is relatively small, as it makes the log-normal distribution appear similar to a normal distribution. Thus, the normal distribution may be more appropriate to use with small standard deviations for convenience, and the log-normal distribution with large standard deviations.

In a log-normal distribution, the geometric standard deviations and geometric mean more accurately estimate the 95% prediction interval than their arithmetic counterparts.

Necessity

Reference ranges for substances that are usually within relatively narrow limits (coefficient of variation less than 0.213, as detailed below) such as electrolytes can be estimated by assuming normal distribution, whereas reference ranges for those that vary significantly (coefficient of variation generally over 0.213) such as most hormones [11] are more accurately established by log-normal distribution.

The necessity to establish a reference range by log-normal distribution rather than normal distribution can be regarded as depending on how much difference it would make to not do so, which can be described as the ratio:

- Difference ratio = | Limitlog-normal - Limitnormal|/ Limitlog-normal

where:

- Limitlog-normal is the (lower or upper) limit as estimated by assuming log-normal distribution

- Limitnormal is the (lower or upper) limit as estimated by assuming normal distribution.

This difference can be put solely in relation to the coefficient of variation, as in the diagram at right, where:

- Coefficient of variation = s.d./m

where:

- s.d. is the standard deviation

- m is the arithmetic mean

In practice, it can be regarded as necessary to use the establishment methods of a log-normal distribution if the difference ratio becomes more than 0.1, meaning that a (lower or upper) limit estimated from an assumed normal distribution would be more than 10% different from the corresponding limit as estimated from a (more accurate) log-normal distribution. As seen in the diagram, a difference ratio of 0.1 is reached for the lower limit at a coefficient of variation of 0.213 (or 21.3%), and for the upper limit at a coefficient of variation at 0.413 (41.3%). The lower limit is more affected by increasing coefficient of variation, and its "critical" coefficient of variation of 0.213 corresponds to a ratio of (upper limit)/(lower limit) of 2.43, so as a rule of thumb, if the upper limit is more than 2.4 times the lower limit when estimated by assuming normal distribution, then it should be considered to do the calculations again by log-normal distribution.

Taking the example from previous section, the standard deviation (s.d.) is estimated at 0.42 and the arithmetic mean (m) is estimated at 5.33. Thus the coefficient of variation is 0.079. This is less than both 0.213 and 0.413, and thus both the lower and upper limit of fasting blood glucose can most likely be estimated by assuming normal distribution. More specifically, the coefficient of variation of 0.079 corresponds to a difference ratio of 0.01 (1%) for the lower limit and 0.007 (0.7%) for the upper limit.

From logarithmized sample values

A method to estimate the reference range for a parameter with log-normal distribution is to logarithmize all the measurements with an arbitrary base (for example e), derive the mean and standard deviation of these logarithms, determine the logarithms located (for a 95% prediction interval) 1.96 standard deviations below and above that mean, and subsequently exponentiate using those two logarithms as exponents and using the same base as was used in logarithmizing, with the two resultant values being the lower and upper limit of the 95% prediction interval.

The following example of this method is based on the same values of fasting plasma glucose as used in the previous section, using e as a base: [5]

| Fasting plasma glucose (FPG) in mmol/L | log e (FPG) | loge(FPG) deviation from mean μlog | Squared deviation from mean | |

|---|---|---|---|---|

| Subject 1 | 5.5 | 1.70 | 0.029 | 0.000841 |

| Subject 2 | 5.2 | 1.65 | 0.021 | 0.000441 |

| Subject 3 | 5.2 | 1.65 | 0.021 | 0.000441 |

| Subject 4 | 5.8 | 1.76 | 0.089 | 0.007921 |

| Subject 5 | 5.6 | 1.72 | 0.049 | 0.002401 |

| Subject 6 | 4.6 | 1.53 | 0.141 | 0.019881 |

| Subject 7 | 5.6 | 1.72 | 0.049 | 0.002401 |

| Subject 8 | 5.9 | 1.77 | 0.099 | 0.009801 |

| Subject 9 | 4.7 | 1.55 | 0.121 | 0.014641 |

| Subject 10 | 5.0 | 1.61 | 0.061 | 0.003721 |

| Subject 11 | 5.7 | 1.74 | 0.069 | 0.004761 |

| Subject 12 | 5.2 | 1.65 | 0.021 | 0.000441 |

| Mean: 5.33 (m) | Mean: 1.67 (μlog) | Sum/(n-1) : 0.068/11 = 0.0062 = standard deviation of loge(FPG) (σlog) |

Subsequently, the still logarithmized lower limit of the reference range is calculated as:

and the upper limit of the reference range as:

Conversion back to non-logarithmized values are subsequently performed as:

Thus, the standard reference range for this example is estimated to be 4.4 to 6.4.

From arithmetic mean and variance

An alternative method of establishing a reference range with the assumption of log-normal distribution is to use the arithmetic mean and standard deviation. This is somewhat more tedious to perform, but may be useful in cases where a study presents only the arithmetic mean and standard deviation, while leaving out the source data. If the original assumption of normal distribution is less appropriate than the log-normal one, then, using the arithmetic mean and standard deviation may be the only available parameters to determine the reference range.

By assuming that the expected value can represent the arithmetic mean in this case, the parameters μlog and σlog can be estimated from the arithmetic mean (m) and standard deviation (s.d.) as:

Following the exampled reference group from the previous section:

Subsequently, the logarithmized, and later non-logarithmized, lower and upper limit are calculated just as by logarithmized sample values.

Directly from percentages of interest

Reference ranges can also be established directly from the 2.5th and 97.5th percentile of the measurements in the reference group. For example, if the reference group consists of 200 people, and counting from the measurement with lowest value to highest, the lower limit of the reference range would correspond to the 5th measurement and the upper limit would correspond to the 195th measurement.

This method can be used even when measurement values do not appear to conform conveniently to any form of normal distribution or other function.

However, the reference range limits as estimated in this way have higher variance, and therefore less reliability, than those estimated by an arithmetic or log-normal distribution (when such is applicable), because the latter ones acquire statistical power from the measurements of the whole reference group rather than just the measurements at the 2.5th and 97.5th percentiles. Still, this variance decreases with increasing size of the reference group, and therefore, this method may be optimal where a large reference group easily can be gathered, and the distribution mode of the measurements is uncertain.

Bimodal distribution

In case of a bimodal distribution (seen at right), it is useful to find out why this is the case. Two reference ranges can be established for the two different groups of people, making it possible to assume a normal distribution for each group. This bimodal pattern is commonly seen in tests that differ between men and women, such as prostate specific antigen.

Interpretation of standard ranges in medical tests

In case of medical tests whose results are of continuous values, reference ranges can be used in the interpretation of an individual test result. This is primarily used for diagnostic tests and screening tests, while monitoring tests may optimally be interpreted from previous tests of the same individual instead.

Probability of random variability

Reference ranges aid in the evaluation of whether a test result's deviation from the mean is a result of random variability or a result of an underlying disease or condition. If the reference group used to establish the reference range can be assumed to be representative of the individual person in a healthy state, then a test result from that individual that turns out to be lower or higher than the reference range can be interpreted as that there is less than 2.5% probability that this would have occurred by random variability in the absence of disease or other condition, which, in turn, is strongly indicative for considering an underlying disease or condition as a cause.

Such further consideration can be performed, for example, by an epidemiology-based differential diagnostic procedure, where potential candidate conditions are listed that may explain the finding, followed by calculations of how probable they are to have occurred in the first place, in turn followed by a comparison with the probability that the result would have occurred by random variability.

If the establishment of the reference range could have been made assuming a normal distribution, then the probability that the result would be an effect of random variability can be further specified as follows:

The standard deviation, if not given already, can be inversely calculated by the fact that the absolute value of the difference between the mean and either the upper or lower limit of the reference range is approximately 2 standard deviations (more accurately 1.96), and thus:

- Standard deviation (s.d.) ≈ | (Mean) - (Upper limit) |/2.

The standard score for the individual's test can subsequently be calculated as:

- Standard score (z) = | (Mean) - (individual measurement) |/s.d..

The probability that a value is of a certain distance from the mean can subsequently be calculated from the relation between standard score and prediction intervals. For example, a standard score of 2.58 corresponds to a prediction interval of 99%, [12] corresponding to a probability of 0.5% that a result is at least such far from the mean in the absence of disease.

Example

Let's say, for example, that an individual takes a test that measures the ionized calcium in the blood, resulting in a value of 1.30 mmol/L, and a reference group that appropriately represents the individual has established a reference range of 1.05 to 1.25 mmol/L. The individual's value is higher than the upper limit of the reference range, and therefore has less than 2.5% probability of being a result of random variability, constituting a strong indication to make a differential diagnosis of possible causative conditions.

In this case, an epidemiology-based differential diagnostic procedure is used, and its first step is to find candidate conditions that can explain the finding.

Hypercalcemia (usually defined as a calcium level above the reference range) is mostly caused by either primary hyperparathyroidism or malignancy, [13] and therefore, it is reasonable to include these in the differential diagnosis.

Using for example epidemiology and the individual's risk factors, let's say that the probability that the hypercalcemia would have been caused by primary hyperparathyroidism in the first place is estimated to be 0.00125 (or 0.125%), the equivalent probability for cancer is 0.0002, and 0.0005 for other conditions. With a probability given as less than 0.025 of no disease, this corresponds to a probability that the hypercalcemia would have occurred in the first place of up to 0.02695. However, the hypercalcemia has occurred with a probability of 100%, resulting adjusted probabilities of at least 4.6% that primary hyperparathyroidism has caused the hypercalcemia, at least 0.7% for cancer, at least 1.9% for other conditions and up to 92.8% for that there is no disease and the hypercalcemia is caused by random variability.

In this case, further processing benefits from specification of the probability of random variability:

The value is assumed to conform acceptably to a normal distribution, so the mean can be assumed to be 1.15 in the reference group. The standard deviation, if not given already, can be inversely calculated by knowing that the absolute value of the difference between the mean and, for example, the upper limit of the reference range, is approximately 2 standard deviations (more accurately 1.96), and thus:

- Standard deviation (s.d.) ≈ | (Mean) - (Upper limit) |/2 = | 1.15 - 1.25 |/2 = 0.1/2 = 0.05.

The standard score for the individual's test is subsequently calculated as:

- Standard score (z) = | (Mean) - (individual measurement) |/s.d. = | 1.15 - 1.30 |/0.05 = 0.15/0.05 = 3.

The probability that a value is of so much larger value than the mean as having a standard score of 3 corresponds to a probability of approximately 0.14% (given by (100% − 99.7%)/2, with 99.7% here being given from the 68–95–99.7 rule).

Using the same probabilities that the hypercalcemia would have occurred in the first place by the other candidate conditions, the probability that hypercalcemia would have occurred in the first place is 0.00335, and given the fact that hypercalcemia has occurred gives adjusted probabilities of 37.3%, 6.0%, 14.9% and 41.8%, respectively, for primary hyperparathyroidism, cancer, other conditions and no disease.