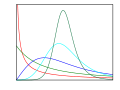

Fisher's moment coefficient of skewness

The skewness  of a random variable X is the third standardized moment

of a random variable X is the third standardized moment  , defined as: [4] [5]

, defined as: [4] [5]

where μ is the mean, σ is the standard deviation, E is the expectation operator, μ3 is the third central moment, and κt are the t-th cumulants. It is sometimes referred to as Pearson's moment coefficient of skewness, [5] or simply the moment coefficient of skewness, [4] but should not be confused with Pearson's other skewness statistics (see below). The last equality expresses skewness in terms of the ratio of the third cumulant κ3 to the 1.5th power of the second cumulant κ2. This is analogous to the definition of kurtosis as the fourth cumulant normalized by the square of the second cumulant. The skewness is also sometimes denoted Skew[X].

where μ is the mean, σ is the standard deviation, E is the expectation operator, μ3 is the third central moment, and κt are the t-th cumulants. It is sometimes referred to as Pearson's moment coefficient of skewness, [5] or simply the moment coefficient of skewness, [4] but should not be confused with Pearson's other skewness statistics (see below). The last equality expresses skewness in terms of the ratio of the third cumulant κ3 to the 1.5th power of the second cumulant κ2. This is analogous to the definition of kurtosis as the fourth cumulant normalized by the square of the second cumulant. The skewness is also sometimes denoted Skew[X].

If σ is finite and μ is finite too, then skewness can be expressed in terms of the non-central moment E[X3] by expanding the previous formula:

Sample skewness

For a sample of n values, two natural estimators of the population skewness are [6]

and

where  is the sample mean, s is the sample standard deviation, m2 is the (biased) sample second central moment, and m3 is the (biased) sample third central moment. [6]

is the sample mean, s is the sample standard deviation, m2 is the (biased) sample second central moment, and m3 is the (biased) sample third central moment. [6]  is a method of moments estimator.

is a method of moments estimator.

Another common definition of the sample skewness is [6] [7]

where  is the unique symmetric unbiased estimator of the third cumulant and

is the unique symmetric unbiased estimator of the third cumulant and  is the symmetric unbiased estimator of the second cumulant (i.e. the sample variance). This adjusted Fisher–Pearson standardized moment coefficient

is the symmetric unbiased estimator of the second cumulant (i.e. the sample variance). This adjusted Fisher–Pearson standardized moment coefficient  is the version found in Excel and several statistical packages including Minitab, SAS and SPSS. [7]

is the version found in Excel and several statistical packages including Minitab, SAS and SPSS. [7]

Under the assumption that the underlying random variable  is normally distributed, it can be shown that all three ratios

is normally distributed, it can be shown that all three ratios  ,

,  and

and  are unbiased and consistent estimators of the population skewness

are unbiased and consistent estimators of the population skewness  , with

, with  , i.e., their distributions converge to a normal distribution with mean 0 and variance 6 (Fisher, 1930). [6] The variance of the sample skewness is thus approximately

, i.e., their distributions converge to a normal distribution with mean 0 and variance 6 (Fisher, 1930). [6] The variance of the sample skewness is thus approximately  for sufficiently large samples. More precisely, in a random sample of size n from a normal distribution, [8] [9]

for sufficiently large samples. More precisely, in a random sample of size n from a normal distribution, [8] [9]

In normal samples,  has the smaller variance of the three estimators, with [6]

has the smaller variance of the three estimators, with [6]

For non-normal distributions,  ,

,  and

and  are generally biased estimators of the population skewness

are generally biased estimators of the population skewness  ; their expected values can even have the opposite sign from the true skewness. For instance, a mixed distribution consisting of very thin Gaussians centred at −99, 0.5, and 2 with weights 0.01, 0.66, and 0.33 has a skewness

; their expected values can even have the opposite sign from the true skewness. For instance, a mixed distribution consisting of very thin Gaussians centred at −99, 0.5, and 2 with weights 0.01, 0.66, and 0.33 has a skewness  of about −9.77, but in a sample of 3

of about −9.77, but in a sample of 3  has an expected value of about 0.32, since usually all three samples are in the positive-valued part of the distribution, which is skewed the other way.

has an expected value of about 0.32, since usually all three samples are in the positive-valued part of the distribution, which is skewed the other way.