Solutions to variational problems

Several measures of central tendency can be characterized as solving a variational problem, in the sense of the calculus of variations, namely minimizing variation from the center. That is, given a measure of statistical dispersion, one asks for a measure of central tendency that minimizes variation: such that variation from the center is minimal among all choices of center. In a quip, "dispersion precedes location". These measures are initially defined in one dimension, but can be generalized to multiple dimensions. This center may or may not be unique. In the sense of Lp spaces, the correspondence is:

| Lp | dispersion | central tendency |

|---|---|---|

| L0 | variation ratio | mode [lower-alpha 1] |

| L1 | average absolute deviation | median (geometric median) [lower-alpha 2] |

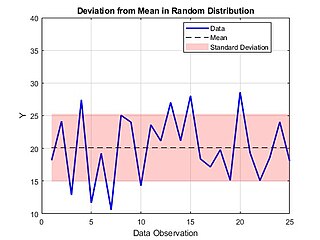

| L2 | standard deviation | mean (centroid) [lower-alpha 3] |

| L∞ | maximum deviation | midrange [lower-alpha 4] |

The associated functions are called p-norms: respectively 0-"norm", 1-norm, 2-norm, and ∞-norm. The function corresponding to the L0 space is not a norm, and is thus often referred to in quotes: 0-"norm".

In equations, for a given (finite) data set X, thought of as a vector x = (x1,…,xn), the dispersion about a point c is the "distance" from x to the constant vector c = (c,…,c) in the p-norm (normalized by the number of points n):

For p = 0 and p = ∞ these functions are defined by taking limits, respectively as p → 0 and p → ∞. For p = 0 the limiting values are 00 = 0 and a0 = 0 or a ≠ 0, so the difference becomes simply equality, so the 0-norm counts the number of unequal points. For p = ∞ the largest number dominates, and thus the ∞-norm is the maximum difference.

Uniqueness

The mean (L2 center) and midrange (L∞ center) are unique (when they exist), while the median (L1 center) and mode (L0 center) are not in general unique. This can be understood in terms of convexity of the associated functions (coercive functions).

The 2-norm and ∞-norm are strictly convex, and thus (by convex optimization) the minimizer is unique (if it exists), and exists for bounded distributions. Thus standard deviation about the mean is lower than standard deviation about any other point, and the maximum deviation about the midrange is lower than the maximum deviation about any other point.

The 1-norm is not strictly convex, whereas strict convexity is needed to ensure uniqueness of the minimizer. Correspondingly, the median (in this sense of minimizing) is not in general unique, and in fact any point between the two central points of a discrete distribution minimizes average absolute deviation.

The 0-"norm" is not convex (hence not a norm). Correspondingly, the mode is not unique – for example, in a uniform distribution any point is the mode.

Clustering

Instead of a single central point, one can ask for multiple points such that the variation from these points is minimized. This leads to cluster analysis, where each point in the data set is clustered with the nearest "center". Most commonly, using the 2-norm generalizes the mean to k-means clustering, while using the 1-norm generalizes the (geometric) median to k-medians clustering. Using the 0-norm simply generalizes the mode (most common value) to using the k most common values as centers.

Unlike the single-center statistics, this multi-center clustering cannot in general be computed in a closed-form expression, and instead must be computed or approximated by an iterative method; one general approach is expectation–maximization algorithms.

Information geometry

The notion of a "center" as minimizing variation can be generalized in information geometry as a distribution that minimizes divergence (a generalized distance) from a data set. The most common case is maximum likelihood estimation, where the maximum likelihood estimate (MLE) maximizes likelihood (minimizes expected surprisal), which can be interpreted geometrically by using entropy to measure variation: the MLE minimizes cross-entropy (equivalently, relative entropy, Kullback–Leibler divergence).

A simple example of this is for the center of nominal data: instead of using the mode (the only single-valued "center"), one often uses the empirical measure (the frequency distribution divided by the sample size) as a "center". For example, given binary data, say heads or tails, if a data set consists of 2 heads and 1 tails, then the mode is "heads", but the empirical measure is 2/3 heads, 1/3 tails, which minimizes the cross-entropy (total surprisal) from the data set. This perspective is also used in regression analysis, where least squares finds the solution that minimizes the distances from it, and analogously in logistic regression, a maximum likelihood estimate minimizes the surprisal (information distance).