Examples

A common example of a first-hitting-time model is a ruin problem, such as Gambler's ruin. In this example, an entity (often described as a gambler or an insurance company) has an amount of money which varies randomly with time, possibly with some drift. The model considers the event that the amount of money reaches 0, representing bankruptcy. The model can answer questions such as the probability that this occurs within finite time, or the mean time until which it occurs.

First-hitting-time models can be applied to expected lifetimes, of patients or mechanical devices. When the process reaches an adverse threshold state for the first time, the patient dies, or the device breaks down.

A financial application of the first hitting time probability has been developed by Marcello Minenna in order to compute the minimum investment time horizon. [11] [12]

First passage time of a 1D Brownian particle

One of the simplest and omnipresent stochastic systems is that of the Brownian particle in one dimension. This system describes the motion of a particle which moves stochastically in one dimensional space, with equal probability of moving to the left or to the right. Given that Brownian motion is used often as a tool to understand more complex phenomena, it is important to understand the probability of a first passage time of the Brownian particle of reaching some position distant from its start location. This is done through the following means.

The probability density function (PDF) for a particle in one dimension is found by solving the one-dimensional diffusion equation. (This equation states that the position probability density diffuses outward over time. It is analogous to say, cream in a cup of coffee if the cream was all contained within some small location initially. After a long time the cream has diffused throughout the entire drink evenly.) Namely,

given the initial condition  ; where

; where  is the position of the particle at some given time,

is the position of the particle at some given time,  is the tagged particle's initial position, and

is the tagged particle's initial position, and  is the diffusion constant with the S.I. units

is the diffusion constant with the S.I. units  (an indirect measure of the particle's speed). The bar in the argument of the instantaneous probability refers to the conditional probability. The diffusion equation states that the rate of change over time in the probability of finding the particle at

(an indirect measure of the particle's speed). The bar in the argument of the instantaneous probability refers to the conditional probability. The diffusion equation states that the rate of change over time in the probability of finding the particle at  position depends on the deceleration over distance of such probability at that position.

position depends on the deceleration over distance of such probability at that position.

It can be shown that the one-dimensional PDF is

This states that the probability of finding the particle at  is Gaussian, and the width of the Gaussian is time dependent. More specifically the Full Width at Half Maximum (FWHM) – technically, this is actually the Full Duration at Half Maximum as the independent variable is time – scales like

is Gaussian, and the width of the Gaussian is time dependent. More specifically the Full Width at Half Maximum (FWHM) – technically, this is actually the Full Duration at Half Maximum as the independent variable is time – scales like

Using the PDF one is able to derive the average of a given function,  , at time

, at time  :

:

where the average is taken over all space (or any applicable variable).

The First Passage Time Density (FPTD) is the probability that a particle has first reached a point  at exactly time

at exactly time  (not at some time during the interval up to

(not at some time during the interval up to  ). This probability density is calculable from the Survival probability (a more common probability measure in statistics). Consider the absorbing boundary condition

). This probability density is calculable from the Survival probability (a more common probability measure in statistics). Consider the absorbing boundary condition  (The subscript c for the absorption point

(The subscript c for the absorption point  is an abbreviation for cliff used in many texts as an analogy to an absorption point). The PDF satisfying this boundary condition is given by

is an abbreviation for cliff used in many texts as an analogy to an absorption point). The PDF satisfying this boundary condition is given by

for  . The survival probability, the probability that the particle has remained at a position

. The survival probability, the probability that the particle has remained at a position  for all times up to

for all times up to  , is given by

, is given by

where  is the error function. The relation between the Survival probability and the FPTD is as follows: the probability that a particle has reached the absorption point between times

is the error function. The relation between the Survival probability and the FPTD is as follows: the probability that a particle has reached the absorption point between times  and

and  is

is  . If one uses the first-order Taylor approximation, the definition of the FPTD follows):

. If one uses the first-order Taylor approximation, the definition of the FPTD follows):

By using the diffusion equation and integrating, the explicit FPTD is

The first-passage time for a Brownian particle therefore follows a Lévy distribution.

For  , it follows from above that

, it follows from above that

where  . This equation states that the probability for a Brownian particle achieving a first passage at some long time (defined in the paragraph above) becomes increasingly small, but is always finite.

. This equation states that the probability for a Brownian particle achieving a first passage at some long time (defined in the paragraph above) becomes increasingly small, but is always finite.

The first moment of the FPTD diverges (as it is a so-called heavy-tailed distribution), therefore one cannot calculate the average FPT, so instead, one can calculate the typical time, the time when the FPTD is at a maximum ( ), i.e.,

), i.e.,

First-hitting-time applications in many families of stochastic processes

First hitting times are central features of many families of stochastic processes, including Poisson processes, Wiener processes, gamma processes, and Markov chains, to name but a few. The state of the stochastic process may represent, for example, the strength of a physical system, the health of an individual, or the financial condition of a business firm. The system, individual or firm fails or experiences some other critical endpoint when the process reaches a threshold state for the first time. The critical event may be an adverse event (such as equipment failure, congested heart failure, or lung cancer) or a positive event (such as recovery from illness, discharge from hospital stay, child birth, or return to work after traumatic injury). The lapse of time until that critical event occurs is usually interpreted generically as a ‘survival time’. In some applications, the threshold is a set of multiple states so one considers competing first hitting times for reaching the first threshold in the set, as is the case when considering competing causes of failure in equipment or death for a patient.

Latent vs observable

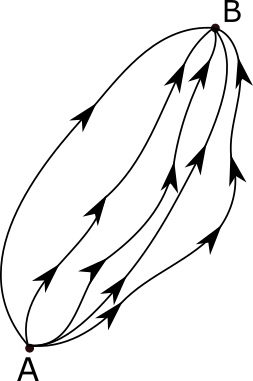

In many real world applications, a first-hitting-time (FHT) model has three underlying components: (1) a parent stochastic process , which might be latent, (2) a threshold (or the barrier) and (3) a time scale. The first hitting time is defined as the time when the stochastic process first reaches the threshold. It is very important to distinguish whether the sample path of the parent process is latent (i.e., unobservable) or observable, and such distinction is a characteristic of the FHT model. By far, latent processes are most common. To give an example, we can use a Wiener process

, which might be latent, (2) a threshold (or the barrier) and (3) a time scale. The first hitting time is defined as the time when the stochastic process first reaches the threshold. It is very important to distinguish whether the sample path of the parent process is latent (i.e., unobservable) or observable, and such distinction is a characteristic of the FHT model. By far, latent processes are most common. To give an example, we can use a Wiener process  as the parent stochastic process. Such Wiener process can be defined with the mean parameter

as the parent stochastic process. Such Wiener process can be defined with the mean parameter  , the variance parameter

, the variance parameter  , and the initial value

, and the initial value  .

.