MP3 is a coding format for digital audio developed largely by the Fraunhofer Society in Germany under the lead of Karlheinz Brandenburg, with support from other digital scientists in other countries. Originally defined as the third audio format of the MPEG-1 standard, it was retained and further extended—defining additional bit rates and support for more audio channels—as the third audio format of the subsequent MPEG-2 standard. A third version, known as MPEG-2.5—extended to better support lower bit rates—is commonly implemented but is not a recognized standard.

MPEG-1 is a standard for lossy compression of video and audio. It is designed to compress VHS-quality raw digital video and CD audio down to about 1.5 Mbit/s without excessive quality loss, making video CDs, digital cable/satellite TV and digital audio broadcasting (DAB) practical.

MPEG-2 is a standard for "the generic coding of moving pictures and associated audio information". It describes a combination of lossy video compression and lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth. While MPEG-2 is not as efficient as newer standards such as H.264/AVC and H.265/HEVC, backwards compatibility with existing hardware and software means it is still widely used, for example in over-the-air digital television broadcasting and in the DVD-Video standard.

Ogg is a free, open container format maintained by the Xiph.Org Foundation. The authors of the Ogg format state that it is unrestricted by software patents and is designed to provide for efficient streaming and manipulation of high-quality digital multimedia. Its name is derived from "ogging", jargon from the computer game Netrek.

The Real-time Transport Protocol (RTP) is a network protocol for delivering audio and video over IP networks. RTP is used in communication and entertainment systems that involve streaming media, such as telephony, video teleconference applications including WebRTC, television services and web-based push-to-talk features.

In telecommunications and computer networking, a network packet is a formatted unit of data carried by a packet-switched network. A packet consists of control information and user data; the latter is also known as the payload. Control information provides data for delivering the payload. Typically, control information is found in packet headers and trailers.

MPEG-1 Audio Layer II or MPEG-2 Audio Layer II is a lossy audio compression format defined by ISO/IEC 11172-3 alongside MPEG-1 Audio Layer I and MPEG-1 Audio Layer III (MP3). While MP3 is much more popular for PC and Internet applications, MP2 remains a dominant standard for audio broadcasting.

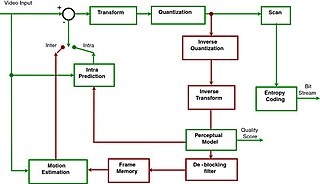

Advanced Video Coding (AVC), also referred to as H.264 or MPEG-4 Part 10, is a video compression standard based on block-oriented, motion-compensated coding. It is by far the most commonly used format for the recording, compression, and distribution of video content, used by 91% of video industry developers as of September 2019. It supports a maximum resolution of 8K UHD.

Serial digital interface (SDI) is a family of digital video interfaces first standardized by SMPTE in 1989. For example, ITU-R BT.656 and SMPTE 259M define digital video interfaces used for broadcast-grade video. A related standard, known as high-definition serial digital interface (HD-SDI), is standardized in SMPTE 292M; this provides a nominal data rate of 1.485 Gbit/s.

MPEG transport stream or simply transport stream (TS) is a standard digital container format for transmission and storage of audio, video, and Program and System Information Protocol (PSIP) data. It is used in broadcast systems such as DVB, ATSC and IPTV.

A container format or metafile is a file format that allows multiple data streams to be embedded into a single file, usually along with metadata for identifying and further detailing those streams. Notable examples of container formats include archive files and formats used for multimedia playback. Among the earliest cross-platform container formats were Distinguished Encoding Rules and the 1985 Interchange File Format.

Dolby Digital Plus, also known as Enhanced AC-3, is a digital audio compression scheme developed by Dolby Labs for the transport and storage of multi-channel digital audio. It is a successor to Dolby Digital (AC-3), and has a number of improvements over that codec, including support for a wider range of data rates, an increased channel count, and multi-program support, as well as additional tools (algorithms) for representing compressed data and counteracting artifacts. Whereas Dolby Digital (AC-3) supports up to five full-bandwidth audio channels at a maximum bitrate of 640 kbit/s, E-AC-3 supports up to 15 full-bandwidth audio channels at a maximum bitrate of 6.144 Mbit/s.

Flash Video is a container file format used to deliver digital video content over the Internet using Adobe Flash Player version 6 and newer. Flash Video content may also be embedded within SWF files. There are two different Flash Video file formats: FLV and F4V. The audio and video data within FLV files are encoded in the same way as SWF files. The F4V file format is based on the ISO base media file format, starting with Flash Player 9 update 3. Both formats are supported in Adobe Flash Player and developed by Adobe Systems. FLV was originally developed by Macromedia. In the early 2000s, Flash Video was the de facto standard for web-based streaming video. Users include Hulu, VEVO, Yahoo! Video, metacafe, Reuters.com, and many other news providers.

Real-Time Messaging Protocol (RTMP) is a communication protocol for streaming audio, video, and data over the Internet. Originally developed as a proprietary protocol by Macromedia for streaming between Flash Player and the Flash Communication Server, Adobe has released an incomplete version of the specification of the protocol for public use.

Asynchronous Serial Interface, or ASI, is a method of carrying an MPEG Transport Stream (MPEG-TS) over 75-ohm copper coaxial cable or optical fiber. It is popular in the television industry as a means of transporting broadcast programs from the studio to the final transmission equipment before it reaches viewers sitting at home.

Packetized Elementary Stream (PES) is a specification in the MPEG-2 Part 1 (Systems) and ITU-T H.222.0 that defines carrying of elementary streams in packets within MPEG program streams and MPEG transport streams. The elementary stream is packetized by encapsulating sequential data bytes from the elementary stream inside PES packet headers.

Program stream is a container format for multiplexing digital audio, video and more. The PS format is specified in MPEG-1 Part 1 and MPEG-2 Part 1, Systems. The MPEG-2 Program Stream is analogous and similar to ISO/IEC 11172 Systems layer and it is forward compatible.

CRI ADX is a proprietary audio container and compression format developed by CRI Middleware specifically for use in video games; it is derived from ADPCM but with lossy compression. Its most notable feature is a looping function that has proved useful for background sounds in various games that have adopted the format, including many games for the Sega Dreamcast as well as some PlayStation 2, GameCube and Wii games. One of the first games to use ADX was Burning Rangers, on the Sega Saturn. Notably, the Sonic the Hedgehog series since the Dreamcast generation and the majority of Sega games for home video consoles and PCs since the Dreamcast continue to use this format for sound and voice recordings. Jet Set Radio Future for original Xbox also used this format.

The Network Abstraction Layer (NAL) is a part of the H.264/AVC and HEVC video coding standards. The main goal of the NAL is the provision of a "network-friendly" video representation addressing "conversational" and "non conversational" applications. NAL has achieved a significant improvement in application flexibility relative to prior video coding standards.

SMPTE ST 2117-1, informally known as VC-6, is a video coding format.