Solving a homogeneous Diophantine equation is generally a very difficult problem, even in the simplest non-trivial case of three indeterminates (in the case of two indeterminates the problem is equivalent with testing if a rational number is the dth power of another rational number). A witness of the difficulty of the problem is Fermat's Last Theorem (for d > 2, there is no integer solution of the above equation), which needed more than three centuries of mathematicians' efforts before being solved.

For degrees higher than three, most known results are theorems asserting that there are no solutions (for example Fermat's Last Theorem) or that the number of solutions is finite (for example Falting's theorem).

For the degree three, there are general solving methods, which work on almost all equations that are encountered in practice, but no algorithm is known that works for every cubic equation. [8]

Degree two

Homogeneous Diophantine equations of degree two are easier to solve. The standard solving method proceeds in two steps. One has first to find one solution, or to prove that there is no solution. When a solution has been found, all solutions are then deduced.

For proving that there is no solution, one may reduce the equation modulo p. For example, the Diophantine equation

does not have any other solution than the trivial solution (0, 0, 0). In fact, by dividing x, y, and z by their greatest common divisor, one may suppose that they are coprime. The squares modulo 4 are congruent to 0 and 1. Thus the left-hand side of the equation is congruent to 0, 1, or 2, and the right-hand side is congruent to 0 or 3. Thus the equality may be obtained only if x, y, and z are all even, and are thus not coprime. Thus the only solution is the trivial solution (0, 0, 0). This shows that there is no rational point on a circle of radius  , centered at the origin.

, centered at the origin.

More generally, the Hasse principle allows deciding whether a homogeneous Diophantine equation of degree two has an integer solution, and computing a solution if there exist.

If a non-trivial integer solution is known, one may produce all other solutions in the following way.

Geometric interpretation

Let

be a homogeneous Diophantine equation, where  is a quadratic form (that is, a homogeneous polynomial of degree 2), with integer coefficients. The trivial solution is the solution where all

is a quadratic form (that is, a homogeneous polynomial of degree 2), with integer coefficients. The trivial solution is the solution where all  are zero. If

are zero. If  is a non-trivial integer solution of this equation, then

is a non-trivial integer solution of this equation, then  are the homogeneous coordinates of a rational point of the hypersurface defined by Q. Conversely, if

are the homogeneous coordinates of a rational point of the hypersurface defined by Q. Conversely, if  are homogeneous coordinates of a rational point of this hypersurface, where

are homogeneous coordinates of a rational point of this hypersurface, where  are integers, then

are integers, then  is an integer solution of the Diophantine equation. Moreover, the integer solutions that define a given rational point are all sequences of the form

is an integer solution of the Diophantine equation. Moreover, the integer solutions that define a given rational point are all sequences of the form

where k is any integer, and d is the greatest common divisor of the

It follows that solving the Diophantine equation  is completely reduced to finding the rational points of the corresponding projective hypersurface.

is completely reduced to finding the rational points of the corresponding projective hypersurface.

Parameterization

Let now  be an integer solution of the equation

be an integer solution of the equation  As Q is a polynomial of degree two, a line passing through A crosses the hypersurface at a single other point, which is rational if and only if the line is rational (that is, if the line is defined by rational parameters). This allows parameterizing the hypersurface by the lines passing through A, and the rational points are those that are obtained from rational lines, that is, those that correspond to rational values of the parameters.

As Q is a polynomial of degree two, a line passing through A crosses the hypersurface at a single other point, which is rational if and only if the line is rational (that is, if the line is defined by rational parameters). This allows parameterizing the hypersurface by the lines passing through A, and the rational points are those that are obtained from rational lines, that is, those that correspond to rational values of the parameters.

More precisely, one may proceed as follows.

By permuting the indices, one may suppose, without loss of generality that  Then one may pass to the affine case by considering the affine hypersurface defined by

Then one may pass to the affine case by considering the affine hypersurface defined by

which has the rational point

If this rational point is a singular point, that is if all partial derivatives are zero at R, all lines passing through R are contained in the hypersurface, and one has a cone. The change of variables

does not change the rational points, and transforms q into a homogeneous polynomial in n − 1 variables. In this case, the problem may thus be solved by applying the method to an equation with fewer variables.

If the polynomial q is a product of linear polynomials (possibly with non-rational coefficients), then it defines two hyperplanes. The intersection of these hyperplanes is a rational flat, and contains rational singular points. This case is thus a special instance of the preceding case.

In the general case, consider the parametric equation of a line passing through R:

Substituting this in q, one gets a polynomial of degree two in x1, that is zero for x1 = r1. It is thus divisible by x1 − r1. The quotient is linear in x1, and may be solved for expressing x1 as a quotient of two polynomials of degree at most two in  with integer coefficients:

with integer coefficients:

Substituting this in the expressions for  one gets, for i = 1, …, n − 1,

one gets, for i = 1, …, n − 1,

where  are polynomials of degree at most two with integer coefficients.

are polynomials of degree at most two with integer coefficients.

Then, one can return to the homogeneous case. Let, for i = 1, …, n,

be the homogenization of  These quadratic polynomials with integer coefficients form a parameterization of the projective hypersurface defined by Q:

These quadratic polynomials with integer coefficients form a parameterization of the projective hypersurface defined by Q:

A point of the projective hypersurface defined by Q is rational if and only if it may be obtained from rational values of  As

As  are homogeneous polynomials, the point is not changed if all ti are multiplied by the same rational number. Thus, one may suppose that

are homogeneous polynomials, the point is not changed if all ti are multiplied by the same rational number. Thus, one may suppose that  are coprime integers. It follows that the integer solutions of the Diophantine equation are exactly the sequences

are coprime integers. It follows that the integer solutions of the Diophantine equation are exactly the sequences  where, for i = 1, ..., n,

where, for i = 1, ..., n,

where k is an integer,  are coprime integers, and d is the greatest common divisor of the n integers

are coprime integers, and d is the greatest common divisor of the n integers

One could hope that the coprimality of the ti, could imply that d = 1. Unfortunately this is not the case, as shown in the next section.

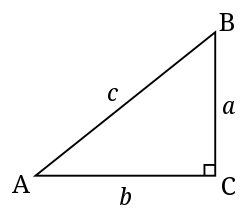

Example of Pythagorean triples

The equation

is probably the first homogeneous Diophantine equation of degree two that has been studied. Its solutions are the Pythagorean triples. This is also the homogeneous equation of the unit circle. In this section, we show how the above method allows retrieving Euclid's formula for generating Pythagorean triples.

For retrieving exactly Euclid's formula, we start from the solution (−1, 0, 1), corresponding to the point (−1, 0) of the unit circle. A line passing through this point may be parameterized by its slope:

Putting this in the circle equation

one gets

Dividing by x + 1, results in

which is easy to solve in x:

It follows

Homogenizing as described above one gets all solutions as

where k is any integer, s and t are coprime integers, and d is the greatest common divisor of the three numerators. In fact, d = 2 if s and t are both odd, and d = 1 if one is odd and the other is even.

The primitive triples are the solutions where k = 1 and s > t > 0.

This description of the solutions differs slightly from Euclid's formula because Euclid's formula considers only the solutions such that x, y, and z are all positive, and does not distinguish between two triples that differ by the exchange of x and y,