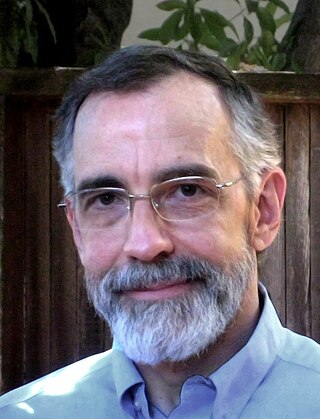

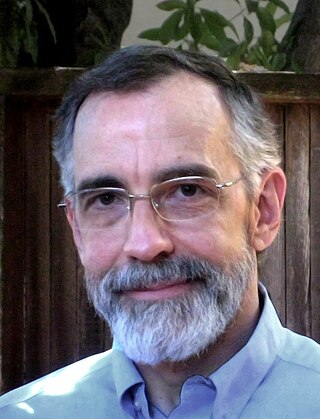

Kim Eric Drexler is an American engineer best known for introducing molecular nanotechnology (MNT), and his studies of its potential from the 1970s and 1980s. His 1991 doctoral thesis at Massachusetts Institute of Technology (MIT) was revised and published as the book Nanosystems: Molecular Machinery Manufacturing and Computation (1992), which received the Association of American Publishers award for Best Computer Science Book of 1992. He has been called the "godfather of nanotechnology".

Molecular nanotechnology (MNT) is a technology based on the ability to build structures to complex, atomic specifications by means of mechanosynthesis. This is distinct from nanoscale materials. Based on Richard Feynman's vision of miniature factories using nanomachines to build complex products, this advanced form of nanotechnology would make use of positionally-controlled mechanosynthesis guided by molecular machine systems. MNT would involve combining physical principles demonstrated by biophysics, chemistry, other nanotechnologies, and the molecular machinery of life with the systems engineering principles found in modern macroscale factories.

Nanotechnology was defined by the National Nanotechnology Initiative as the manipulation of matter with at least one dimension sized from 1 to 100 nanometers (nm). At this scale, commonly known as the nanoscale, surface area and quantum mechanical effects become important in describing properties of matter. The definition of nanotechnology is inclusive of all types of research and technologies that deal with these special properties. It is therefore common to see the plural form "nanotechnologies" as well as "nanoscale technologies" to refer to the broad range of research and applications whose common trait is size. An earlier description of nanotechnology referred to the particular technological goal of precisely manipulating atoms and molecules for fabrication of macroscale products, also now referred to as molecular nanotechnology.

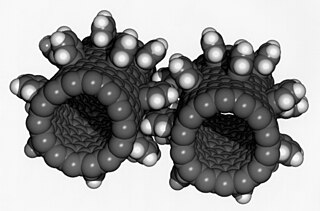

Gray goo is a hypothetical global catastrophic scenario involving molecular nanotechnology in which out-of-control self-replicating machines consume all biomass on Earth while building many more of themselves, a scenario that has been called ecophagy(the literal consumption of the ecosystem). The original idea assumed machines were designed to have this capability, while popularizations have assumed that machines might somehow gain this capability by accident.

Molecular engineering is an emerging field of study concerned with the design and testing of molecular properties, behavior and interactions in order to assemble better materials, systems, and processes for specific functions. This approach, in which observable properties of a macroscopic system are influenced by direct alteration of a molecular structure, falls into the broader category of “bottom-up” design.

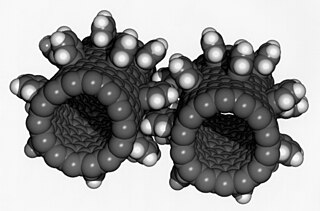

A molecular assembler, as defined by K. Eric Drexler, is a "proposed device able to guide chemical reactions by positioning reactive molecules with atomic precision". A molecular assembler is a kind of molecular machine. Some biological molecules such as ribosomes fit this definition. This is because they receive instructions from messenger RNA and then assemble specific sequences of amino acids to construct protein molecules. However, the term "molecular assembler" usually refers to theoretical human-made devices.

Computer simulation is the process of mathematical modelling, performed on a computer, which is designed to predict the behaviour of, or the outcome of, a real-world or physical system. The reliability of some mathematical models can be determined by comparing their results to the real-world outcomes they aim to predict. Computer simulations have become a useful tool for the mathematical modeling of many natural systems in physics, astrophysics, climatology, chemistry, biology and manufacturing, as well as human systems in economics, psychology, social science, health care and engineering. Simulation of a system is represented as the running of the system's model. It can be used to explore and gain new insights into new technology and to estimate the performance of systems too complex for analytical solutions.

Megascale engineering is a form of exploratory engineering concerned with the construction of structures on an enormous scale. Typically these structures are at least 1,000 km (620 mi) in length—in other words, at least one megameter, hence the name. Such large-scale structures are termed megastructures.

Computational science, also known as scientific computing, technical computing or scientific computation (SC), is a division of science that uses advanced computing capabilities to understand and solve complex physical problems. This includes

The following outline is provided as an overview of and topical guide to technology:

The history of nanotechnology traces the development of the concepts and experimental work falling under the broad category of nanotechnology. Although nanotechnology is a relatively recent development in scientific research, the development of its central concepts happened over a longer period of time. The emergence of nanotechnology in the 1980s was caused by the convergence of experimental advances such as the invention of the scanning tunneling microscope in 1981 and the discovery of fullerenes in 1985, with the elucidation and popularization of a conceptual framework for the goals of nanotechnology beginning with the 1986 publication of the book Engines of Creation. The field was subject to growing public awareness and controversy in the early 2000s, with prominent debates about both its potential implications as well as the feasibility of the applications envisioned by advocates of molecular nanotechnology, and with governments moving to promote and fund research into nanotechnology. The early 2000s also saw the beginnings of commercial applications of nanotechnology, although these were limited to bulk applications of nanomaterials rather than the transformative applications envisioned by the field.

The following outline is provided as an overview of and topical guide to nanotechnology:

The societal impact of nanotechnology are the potential benefits and challenges that the introduction of novel nanotechnological devices and materials may hold for society and human interaction. The term is sometimes expanded to also include nanotechnology's health and environmental impact, but this article will only consider the social and political impact of nanotechnology.

Modeling and simulation (M&S) is the use of models as a basis for simulations to develop data utilized for managerial or technical decision making.

Wet nanotechnology involves working up to large masses from small ones.

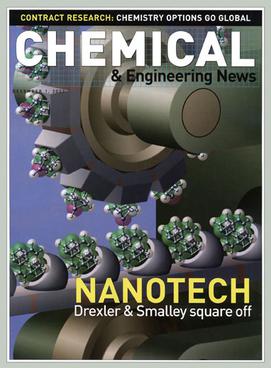

The Drexler–Smalley debate on molecular nanotechnology was a public dispute between K. Eric Drexler, the originator of the conceptual basis of molecular nanotechnology, and Richard Smalley, a recipient of the 1996 Nobel prize in Chemistry for the discovery of the nanomaterial buckminsterfullerene. The dispute was about the feasibility of constructing molecular assemblers, which are molecular machines which could robotically assemble molecular materials and devices by manipulating individual atoms or molecules. The concept of molecular assemblers was central to Drexler's conception of molecular nanotechnology, but Smalley argued that fundamental physical principles would prevent them from ever being possible. The two also traded accusations that the other's conception of nanotechnology was harmful to public perception of the field and threatened continued public support for nanotechnology research.

Alejandro Strachan is a scientist in the field of computational materials and the Reilly Professor of Materials Engineering at Purdue University. Before joining Purdue University, he was a staff member at Los Alamos National Laboratory.

Andres Jaramillo-Botero is a Colombian-American scientist and professor, working in nanoscale chemical physics, known for his contributions to first-principles based modeling, design, synthesis and characterization of nanostructured materials and devices.

This glossary of nanotechnology is a list of definitions of terms and concepts relevant to nanotechnology, its sub-disciplines, and related fields.