Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images into descriptions of world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

Optical character recognition or optical character reader (OCR) is the electronic or mechanical conversion of images of typed, handwritten or printed text into machine-encoded text, whether from a scanned document, a photo of a document, a scene photo or from subtitle text superimposed on an image.

In computer vision or natural language processing, document layout analysis is the process of identifying and categorizing the regions of interest in the scanned image of a text document. A reading system requires the segmentation of text zones from non-textual ones and the arrangement in their correct reading order. Detection and labeling of the different zones as text body, illustrations, math symbols, and tables embedded in a document is called geometric layout analysis. But text zones play different logical roles inside the document and this kind of semantic labeling is the scope of the logical layout analysis.

Document processing is a field of research and a set of production processes aimed at making an analog document digital. Document processing does not simply aim to photograph or scan a document to obtain a digital image, but also to make it digitally intelligible. This includes extracting the structure of the document or the layout and then the content, which can take the form of text or images. The process can involve traditional computer vision algorithms, convolutional neural networks or manual labor. The problems addressed are related to semantic segmentation, object detection, optical character recognition (OCR), handwritten text recognition (HTR) and, more broadly, transcription, whether automatic or not. The term can also include the phase of digitizing the document using a scanner and the phase of interpreting the document, for example using natural language processing (NLP) or image classification technologies. It is applied in many industrial and scientific fields for the optimization of administrative processes, mail processing and the digitization of analog archives and historical documents.

3D scanning is the process of analyzing a real-world object or environment to collect three dimensional data of its shape and possibly its appearance. The collected data can then be used to construct digital 3D models.

Optical music recognition (OMR) is a field of research that investigates how to computationally read musical notation in documents. The goal of OMR is to teach the computer to read and interpret sheet music and produce a machine-readable version of the written music score. Once captured digitally, the music can be saved in commonly used file formats, e.g. MIDI and MusicXML . In the past it has, misleadingly, also been called "music optical character recognition". Due to significant differences, this term should no longer be used.

Structure from motion (SfM) is a photogrammetric range imaging technique for estimating three-dimensional structures from two-dimensional image sequences that may be coupled with local motion signals. It is studied in the fields of computer vision and visual perception.

OCRopus is a free document analysis and optical character recognition (OCR) system released under the Apache License v2.0 with a very modular design using command-line interfaces.

In computer vision, the bag-of-words model sometimes called bag-of-visual-words model can be applied to image classification or retrieval, by treating image features as words. In document classification, a bag of words is a sparse vector of occurrence counts of words; that is, a sparse histogram over the vocabulary. In computer vision, a bag of visual words is a vector of occurrence counts of a vocabulary of local image features.

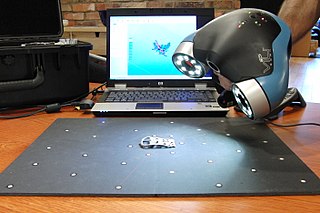

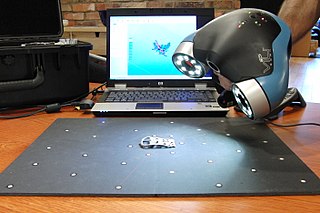

An area of computer vision is active vision, sometimes also called active computer vision. An active vision system is one that can manipulate the viewpoint of the camera(s) in order to investigate the environment and get better information from it.

In robotics and computer vision, visual odometry is the process of determining the position and orientation of a robot by analyzing the associated camera images. It has been used in a wide variety of robotic applications, such as on the Mars Exploration Rovers.

In statistics and machine learning, ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obtained from any of the constituent learning algorithms alone. Unlike a statistical ensemble in statistical mechanics, which is usually infinite, a machine learning ensemble consists of only a concrete finite set of alternative models, but typically allows for much more flexible structure to exist among those alternatives.

Forms processing is a process by which one can capture information entered into data fields and convert it into an electronic format. This can be done manually or automatically, but the general process is that hard copy data is filled out by humans and then "captured" from their respective fields and entered into a database or other electronic format.

Computer security compromised by hardware failure is a branch of computer security applied to hardware. The objective of computer security includes protection of information and property from theft, corruption, or natural disaster, while allowing the information and property to remain accessible and productive to its intended users. Such secret information could be retrieved by different ways. This article focus on the retrieval of data thanks to misused hardware or hardware failure. Hardware could be misused or exploited to get secret data. This article collects main types of attack that can lead to data theft.

The Medical Intelligence and Language Engineering Laboratory, also known as MILE lab, is a research laboratory at the Indian Institute of Science, Bangalore under the Department of Electrical Engineering. The lab is known for its work on Image processing, online handwriting recognition, Text-To-Speech and Optical character recognition systems, all of which are focused mainly on documents and speech in Indian languages. The lab is headed by A. G. Ramakrishnan.

The MNIST database is a large database of handwritten digits that is commonly used for training various image processing systems. The database is also widely used for training and testing in the field of machine learning. It was created by "re-mixing" the samples from NIST's original datasets. The creators felt that since NIST's training dataset was taken from American Census Bureau employees, while the testing dataset was taken from American high school students, it was not well-suited for machine learning experiments. Furthermore, the black and white images from NIST were normalized to fit into a 28x28 pixel bounding box and anti-aliased, which introduced grayscale levels.

In computer vision, rigid motion segmentation is the process of separating regions, features, or trajectories from a video sequence into coherent subsets of space and time. These subsets correspond to independent rigidly moving objects in the scene. The goal of this segmentation is to differentiate and extract the meaningful rigid motion from the background and analyze it. Image segmentation techniques labels the pixels to be a part of pixels with certain characteristics at a particular time. Here, the pixels are segmented depending on its relative movement over a period of time i.e. the time of the video sequence.

Emotion recognition is the process of identifying human emotion. People vary widely in their accuracy at recognizing the emotions of others. Use of technology to help people with emotion recognition is a relatively nascent research area. Generally, the technology works best if it uses multiple modalities in context. To date, the most work has been conducted on automating the recognition of facial expressions from video, spoken expressions from audio, written expressions from text, and physiology as measured by wearables.

Scene text is text that appears in an image captured by a camera in an outdoor environment.

A copy detection pattern (CDP) or graphical code is a small random or pseudo-random digital image which is printed on documents, labels or products for counterfeit detection. Authentication is made by scanning the printed CDP using an image scanner or mobile phone camera. It is possible to store additional product-specific data into the CDP that will be decoded during the scanning process. A CDP can also be inserted into a 2D barcode to facilitate smartphone authentication and to connect with traceability data.