Speech coding is an application of data compression to digital audio signals containing speech. Speech coding uses speech-specific parameter estimation using audio signal processing techniques to model the speech signal, combined with generic data compression algorithms to represent the resulting modeled parameters in a compact bitstream.

Windows Media Audio (WMA) is a series of audio codecs and their corresponding audio coding formats developed by Microsoft. It is a proprietary technology that forms part of the Windows Media framework. WMA consists of four distinct codecs. The original WMA codec, known simply as WMA, was conceived as a competitor to the popular MP3 and RealAudio codecs. WMA Pro, a newer and more advanced codec, supports multichannel and high-resolution audio. A lossless codec, WMA Lossless, compresses audio data without loss of audio fidelity. WMA Voice, targeted at voice content, applies compression using a range of low bit rates. Microsoft has also developed a digital container format called Advanced Systems Format to store audio encoded by WMA.

Adaptive Transform Acoustic Coding (ATRAC) is a family of proprietary audio compression algorithms developed by Sony. MiniDisc was the first commercial product to incorporate ATRAC, in 1992. ATRAC allowed a relatively small disc like MiniDisc to have the same running time as CD while storing audio information with minimal perceptible loss in quality. Improvements to the codec in the form of ATRAC3, ATRAC3plus, and ATRAC Advanced Lossless followed in 1999, 2002, and 2006 respectively.

Speex is an audio compression codec specifically tuned for the reproduction of human speech and also a free software speech codec that may be used on voice over IP applications and podcasts. It is based on the code excited linear prediction speech coding algorithm. Its creators claim Speex to be free of any patent restrictions and it is licensed under the revised (3-clause) BSD license. It may be used with the Ogg container format or directly transmitted over UDP/RTP. It may also be used with the FLV container format.

G.711 is a narrowband audio codec originally designed for use in telephony that provides toll-quality audio at 64 kbit/s. It is an ITU-T standard (Recommendation) for audio encoding, titled Pulse code modulation (PCM) of voice frequencies released for use in 1972.

Musepack or MPC is an open source lossy audio codec, specifically optimized for transparent compression of stereo audio at bitrates of 160–180 kbit/s. It was formerly known as MPEGplus, MPEG+ or MP+.

G.722 is an ITU-T standard 7 kHz wideband audio codec operating at 48, 56 and 64 kbit/s. It was approved by ITU-T in November 1988. Technology of the codec is based on sub-band ADPCM (SB-ADPCM). The corresponding narrow-band codec based on the same technology is G.726.

Variable-Rate Multimode Wideband (VMR-WB) is a source-controlled variable-rate multimode codec designed for robust encoding/decoding of wideband/narrowband speech. The operation of VMR-WB is controlled by speech signal characteristics and by traffic condition of the network. Depending on the traffic conditions and the desired quality of service (QoS), one of the 4 operational modes is used. All operating modes of the existing VMR-WB standard are fully compliant with cdma2000 rate-set II. VMR-WB modes 0, 1, and 2 are cdma2000 native modes with mode 0 providing the highest quality and mode 2 the lowest ADR. VMR-WB mode 3 is the AMR-WB interoperable mode operating at an ADR slightly higher than mode 0 and providing a quality equal or better than that of AMR-WB at 12.65 kbit/s when in an interoperable interconnection with AMR-WB at 12.65 kbit/s.

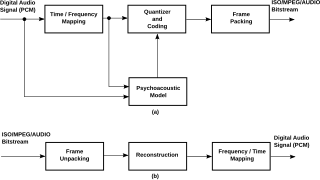

High-Efficiency Advanced Audio Coding (HE-AAC) is an audio coding format for lossy data compression of digital audio defined as an MPEG-4 Audio profile in ISO/IEC 14496–3. It is an extension of Low Complexity AAC (AAC-LC) optimized for low-bitrate applications such as streaming audio. The usage profile HE-AAC v1 uses spectral band replication (SBR) to enhance the modified discrete cosine transform (MDCT) compression efficiency in the frequency domain. The usage profile HE-AAC v2 couples SBR with Parametric Stereo (PS) to further enhance the compression efficiency of stereo signals.

Α video codec is software or a device that provides encoding and decoding for digital video, and which may or may not include the use of video compression and/or decompression. Most codecs are typically implementations of video coding formats.

Parametric stereo is an audio compression algorithm used as an audio coding format for digital audio. It is considered an Audio Object Type of MPEG-4 Part 3 that serves to enhance the coding efficiency of low bandwidth stereo audio media. Parametric Stereo digitally codes a stereo audio signal by storing the audio as monaural alongside a small amount of extra information. This extra information describes how the monaural signal will behave across both stereo channels, which allows for the signal to exist in true stereo upon playback.

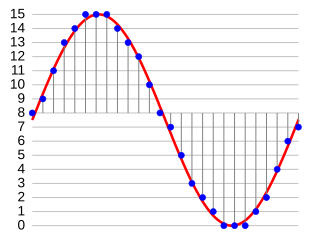

Adaptive differential pulse-code modulation (ADPCM) is a variant of differential pulse-code modulation (DPCM) that varies the size of the quantization step, to allow further reduction of the required data bandwidth for a given signal-to-noise ratio.

Constrained Energy Lapped Transform (CELT) is an open, royalty-free lossy audio compression format and a free software codec with especially low algorithmic delay for use in low-latency audio communication. The algorithms are openly documented and may be used free of software patent restrictions. Development of the format was maintained by the Xiph.Org Foundation and later coordinated by the Opus working group of the Internet Engineering Task Force (IETF).

aptX is a family of proprietary audio codec compression algorithms owned by Qualcomm, with a heavy emphasis on wireless audio applications.

In signal processing, sub-band coding (SBC) is any form of transform coding that breaks a signal into a number of different frequency bands, typically by using a fast Fourier transform, and encodes each one independently. This decomposition is often the first step in data compression for audio and video signals.

Opus is a lossy audio coding format developed by the Xiph.Org Foundation and standardized by the Internet Engineering Task Force, designed to efficiently code speech and general audio in a single format, while remaining low-latency enough for real-time interactive communication and low-complexity enough for low-end embedded processors. Opus replaces both Vorbis and Speex for new applications, and several blind listening tests have ranked it higher-quality than any other standard audio format at any given bitrate until transparency is reached, including MP3, AAC, and HE-AAC.

Unified Speech and Audio Coding (USAC) is an audio compression format and codec for both music and speech or any mix of speech and audio using very low bit rates between 12 and 64 kbit/s. It was developed by Moving Picture Experts Group (MPEG) and was published as an international standard ISO/IEC 23003-3 and also as an MPEG-4 Audio Object Type in ISO/IEC 14496-3:2009/Amd 3 in 2012.

Satin is a lossy speech codec developed by Microsoft. Satin was designed to supersede the earlier Silk codec in their applications, and implements a neural network and novel signal processing to improve performance over its predecessor.