In economics, adaptive expectations is a hypothesized process by which people form their expectations about what will happen in the future based on what has happened in the past. For example, if people want to create an expectation of the inflation rate in the future, they can refer to past inflation rates to infer some consistencies and could derive a more accurate expectation the more years they consider.

In economics, "rational expectations" are model-consistent expectations, in that agents inside the model are assumed to "know the model" and on average take the model's predictions as valid. Rational expectations ensure internal consistency in models involving uncertainty. To obtain consistency within a model, the predictions of future values of economically relevant variables from the model are assumed to be the same as that of the decision-makers in the model, given their information set, the nature of the random processes involved, and model structure. The rational expectations assumption is used especially in many contemporary macroeconomic models.

New Keynesian economics is a school of macroeconomics that strives to provide microeconomic foundations for Keynesian economics. It developed partly as a response to criticisms of Keynesian macroeconomics by adherents of new classical macroeconomics.

The Phillips curve is an economic model, named after William Phillips, that predicts a correlation between reduction in unemployment and increased rates of wage rises within an economy. While Phillips himself did not state a linked relationship between employment and inflation, this was a trivial deduction from his statistical findings. Paul Samuelson and Robert Solow made the connection explicit and subsequently Milton Friedman and Edmund Phelps put the theoretical structure in place.

In probability theory and statistics, the Weibull distribution is a continuous probability distribution. It models a broad range of random variables, largely in the nature of a time to failure or time between events. Examples are maximum one-day rainfalls and the time a user spends on a web page.

In economics and econometrics, the Cobb–Douglas production function is a particular functional form of the production function, widely used to represent the technological relationship between the amounts of two or more inputs and the amount of output that can be produced by those inputs. The Cobb–Douglas form is developed and tested against statistical evidence by Charles Cobb and Paul Douglas between 1927 and 1947; according to Douglas, the functional form itself was developed earlier by Philip Wicksteed.

Nominal rigidity, also known as price-stickiness or wage-stickiness, is a situation in which a nominal price is resistant to change. Complete nominal rigidity occurs when a price is fixed in nominal terms for a relevant period of time. For example, the price of a particular good might be fixed at $10 per unit for a year. Partial nominal rigidity occurs when a price may vary in nominal terms, but not as much as it would if perfectly flexible. For example, in a regulated market there might be limits to how much a price can change in a given year.

John Brian Taylor is the Mary and Robert Raymond Professor of Economics at Stanford University, and the George P. Shultz Senior Fellow in Economics at Stanford University's Hoover Institution.

The policy-ineffectiveness proposition (PIP) is a new classical theory proposed in 1975 by Thomas J. Sargent and Neil Wallace based upon the theory of rational expectations, which posits that monetary policy cannot systematically manage the levels of output and employment in the economy.

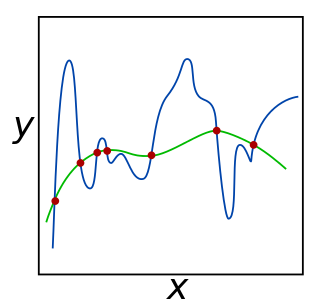

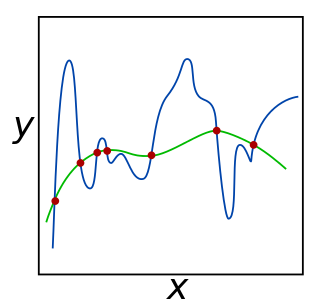

In mathematics, statistics, finance, computer science, particularly in machine learning and inverse problems, regularization is a process that changes the result answer to be "simpler". It is often used to obtain results for ill-posed problems or to prevent overfitting.

In mathematics, Mostow's rigidity theorem, or strong rigidity theorem, or Mostow–Prasad rigidity theorem, essentially states that the geometry of a complete, finite-volume hyperbolic manifold of dimension greater than two is determined by the fundamental group and hence unique. The theorem was proven for closed manifolds by Mostow (1968) and extended to finite volume manifolds by Marden (1974) in 3 dimensions, and by Prasad (1973) in all dimensions at least 3. Gromov (1981) gave an alternate proof using the Gromov norm. Besson, Courtois & Gallot (1996) gave the simplest available proof.

Difference in differences is a statistical technique used in econometrics and quantitative research in the social sciences that attempts to mimic an experimental research design using observational study data, by studying the differential effect of a treatment on a 'treatment group' versus a 'control group' in a natural experiment. It calculates the effect of a treatment on an outcome by comparing the average change over time in the outcome variable for the treatment group to the average change over time for the control group. Although it is intended to mitigate the effects of extraneous factors and selection bias, depending on how the treatment group is chosen, this method may still be subject to certain biases.

Also known as the (Moran-)Gamma Process, the gamma process is a random process studied in mathematics, statistics, probability theory, and stochastics. The gamma process is a stochastic or random process consisting of independently distributed gamma distributions where represents the number of event occurrences from time 0 to time . The gamma distribution has scale parameter and shape parameter , often written as . Both and must be greater than 0. The gamma process is often written as where represents the time from 0. The process is a pure-jump increasing Lévy process with intensity measure for all positive . Thus jumps whose size lies in the interval occur as a Poisson process with intensity The parameter controls the rate of jump arrivals and the scaling parameter inversely controls the jump size. It is assumed that the process starts from a value 0 at t = 0 meaning .

The Heckman correction is a statistical technique to correct bias from non-randomly selected samples or otherwise incidentally truncated dependent variables, a pervasive issue in quantitative social sciences when using observational data. Conceptually, this is achieved by explicitly modelling the individual sampling probability of each observation together with the conditional expectation of the dependent variable. The resulting likelihood function is mathematically similar to the tobit model for censored dependent variables, a connection first drawn by James Heckman in 1974. Heckman also developed a two-step control function approach to estimate this model, which avoids the computational burden of having to estimate both equations jointly, albeit at the cost of inefficiency. Heckman received the Nobel Memorial Prize in Economic Sciences in 2000 for his work in this field.

Financial models with long-tailed distributions and volatility clustering have been introduced to overcome problems with the realism of classical financial models. These classical models of financial time series typically assume homoskedasticity and normality cannot explain stylized phenomena such as skewness, heavy tails, and volatility clustering of the empirical asset returns in finance. In 1963, Benoit Mandelbrot first used the stable distribution to model the empirical distributions which have the skewness and heavy-tail property. Since -stable distributions have infinite -th moments for all , the tempered stable processes have been proposed for overcoming this limitation of the stable distribution.

The Gent hyperelastic material model is a phenomenological model of rubber elasticity that is based on the concept of limiting chain extensibility. In this model, the strain energy density function is designed such that it has a singularity when the first invariant of the left Cauchy-Green deformation tensor reaches a limiting value .

In differential geometry, a fibered manifold is surjective submersion of smooth manifolds Y → X. Locally trivial fibered manifolds are fiber bundles. Therefore, a notion of connection on fibered manifolds provides a general framework of a connection on fiber bundles.

A Calvo contract is the name given in macroeconomics to the pricing model that when a firm sets a nominal price there is a constant probability that a firm might be able to reset its price which is independent of the time since the price was last reset. The model was first put forward by Guillermo Calvo in his 1983 article "Staggered Prices in a Utility-Maximizing Framework". The original article was written in a continuous time mathematical framework, but nowadays is mostly used in its discrete time version. The Calvo model is the most common way to model nominal rigidity in new Keynesian DSGE macroeconomic models.

De-sparsified lasso contributes to construct confidence intervals and statistical tests for single or low-dimensional components of a large parameter vector in high-dimensional model.

In theoretical physics, the dual graviton is a hypothetical elementary particle that is a dual of the graviton under electric-magnetic duality, as an S-duality, predicted by some formulations of supergravity in eleven dimensions.