A non-credible threat is a term used in game theory and economics to describe a threat in a sequential game that a rational player would not actually carry out, because it would not be in his best interest to do so.

Contents

- Examples of non-credible threats

- Market entry game

- Eric van Damme's Extensive Form Game

- Rationality

- Experiment using the Beard and Beil Game (1994)

- Notes

- See also

- External links

A threat, and its counterpart –a commitment, are both defined by American economist and Nobel prize winner, T.C. Schelling, who stated that: "A announces that B's behaviour will lead to a response from A. If this response is a reward, then the announcement is a commitment; if this response is a penalty, then the announcement is a threat." [1] While a player might make a threat, it is only deemed credible if it serves the best interest of the player. [2] In other words, the player would be willing to carry through with the action that is being threatened regardless of the choice of the other player. [3] This is based on the assumption that the player is rational. [1]

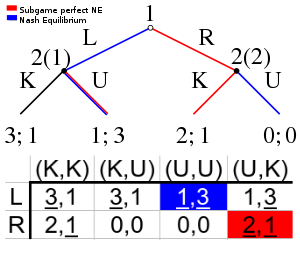

A non-credible threat is made on the hope that it will be believed, and therefore the threatening undesirable action will not need to be carried out. [4] For a threat to be credible within an equilibrium, whenever a node is reached where a threat should be fulfilled, it will be fulfilled. [3] Those Nash equilibria that rely on non-credible threats can be eliminated through backward induction; the remaining equilibria are called subgame perfect Nash equilibria. [2] [5]