This article needs additional citations for verification . Please help improve this article by adding citations to reliable sources. Unsourced material may be challenged and removed. Find sources: "Timeline of thermodynamics" – news · newspapers · books · scholar · JSTOR (August 2010) ( Learn how and when to remove this message ) |

| Thermodynamics | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

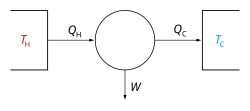

The classical Carnot heat engine | ||||||||||||

| ||||||||||||

A timeline of events in the history of thermodynamics.