The Au file format is a simple audio file format introduced by Sun Microsystems. The format was common on NeXT systems and on early Web pages. Originally it was headerless, being 8-bit μ-law-encoded data at an 8000 Hz sample rate. Hardware from other vendors often used sample rates as high as 8192 Hz, often integer multiples of video clock signal frequencies. Newer files have a header that consists of six unsigned 32-bit words, an optional information chunk which is always of non-zero size, and then the data.

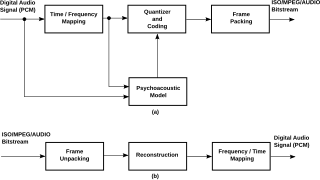

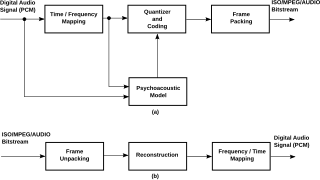

Speech coding is an application of data compression to digital audio signals containing speech. Speech coding uses speech-specific parameter estimation using audio signal processing techniques to model the speech signal, combined with generic data compression algorithms to represent the resulting modeled parameters in a compact bitstream.

In electronics, an analog-to-digital converter is a system that converts an analog signal, such as a sound picked up by a microphone or light entering a digital camera, into a digital signal. An ADC may also provide an isolated measurement such as an electronic device that converts an analog input voltage or current to a digital number representing the magnitude of the voltage or current. Typically the digital output is a two's complement binary number that is proportional to the input, but there are other possibilities.

An A-law algorithm is a standard companding algorithm, used in European 8-bit PCM digital communications systems to optimize, i.e. modify, the dynamic range of an analog signal for digitizing. It is one of the two companding algorithms in the G.711 standard from ITU-T, the other being the similar μ-law, used in North America and Japan.

In telecommunications and signal processing, companding is a method of mitigating the detrimental effects of a channel with limited dynamic range. The name is a portmanteau of the words compressing and expanding, which are the functions of a compander at the transmitting and receiving ends, respectively. The use of companding allows signals with a large dynamic range to be transmitted over facilities that have a smaller dynamic range capability. Companding is employed in telephony and other audio applications such as professional wireless microphones and analog recording.

Delta modulation is an analog-to-digital and digital-to-analog signal conversion technique used for transmission of voice information where quality is not of primary importance. DM is the simplest form of differential pulse-code modulation (DPCM) where the difference between successive samples is encoded into n-bit data streams. In delta modulation, the transmitted data are reduced to a 1-bit data stream representing either up (↗) or down (↘). Its main features are:

Signal-to-noise ratio is a measure used in science and engineering that compares the level of a desired signal to the level of background noise. SNR is defined as the ratio of signal power to noise power, often expressed in decibels. A ratio higher than 1:1 indicates more signal than noise.

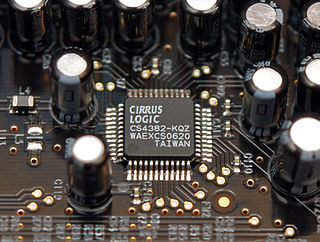

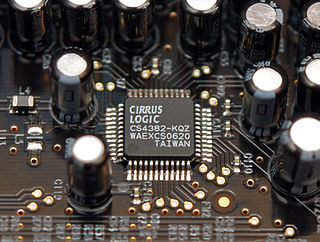

In electronics, a digital-to-analog converter is a system that converts a digital signal into an analog signal. An analog-to-digital converter (ADC) performs the reverse function.

G.711 is a narrowband audio codec originally designed for use in telephony that provides toll-quality audio at 64 kbit/s. It is an ITU-T standard (Recommendation) for audio encoding, titled Pulse code modulation (PCM) of voice frequencies released for use in 1972.

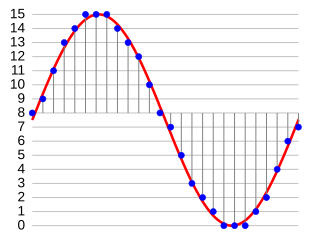

Quantization, in mathematics and digital signal processing, is the process of mapping input values from a large set to output values in a (countable) smaller set, often with a finite number of elements. Rounding and truncation are typical examples of quantization processes. Quantization is involved to some degree in nearly all digital signal processing, as the process of representing a signal in digital form ordinarily involves rounding. Quantization also forms the core of essentially all lossy compression algorithms.

Near Instantaneous Companded Audio Multiplex (NICAM) is an early form of lossy compression for digital audio. It was originally developed in the early 1970s for point-to-point links within broadcasting networks. In the 1980s, broadcasters began to use NICAM compression for transmissions of stereo TV sound to the public.

Continuously variable slope delta modulation is a voice coding method. It is a delta modulation with variable step size, first proposed by Greefkes and Riemens in 1970.

G.729 is a royalty-free narrow-band vocoder-based audio data compression algorithm using a frame length of 10 milliseconds. It is officially described as Coding of speech at 8 kbit/s using code-excited linear prediction speech coding (CS-ACELP), and was introduced in 1996. The wide-band extension of G.729 is called G.729.1, which equals G.729 Annex J.

G.726 is an ITU-T ADPCM speech codec standard covering the transmission of voice at rates of 16, 24, 32, and 40 kbit/s. It was introduced to supersede both G.721, which covered ADPCM at 32 kbit/s, and G.723, which described ADPCM for 24 and 40 kbit/s. G.726 also introduced a new 16 kbit/s rate. The four bit rates associated with G.726 are often referred to by the bit size of a sample, which are 2, 3, 4, and 5-bits respectively. The corresponding wide-band codec based on the same technology is G.722.

In digital audio using pulse-code modulation (PCM), bit depth is the number of bits of information in each sample, and it directly corresponds to the resolution of each sample. Examples of bit depth include Compact Disc Digital Audio, which uses 16 bits per sample, and DVD-Audio and Blu-ray Disc, which can support up to 24 bits per sample.

Adaptive differential pulse-code modulation (ADPCM) is a variant of differential pulse-code modulation (DPCM) that varies the size of the quantization step, to allow further reduction of the required data bandwidth for a given signal-to-noise ratio.

In signal processing, sub-band coding (SBC) is any form of transform coding that breaks a signal into a number of different frequency bands, typically by using a fast Fourier transform, and encodes each one independently. This decomposition is often the first step in data compression for audio and video signals.

Pulse-code modulation (PCM) is a method used to digitally represent analog signals. It is the standard form of digital audio in computers, compact discs, digital telephony and other digital audio applications. In a PCM stream, the amplitude of the analog signal is sampled at uniform intervals, and each sample is quantized to the nearest value within a range of digital steps. Alec Reeves, Claude Shannon, Barney Oliver and John R. Pierce are credited with its invention.

Pulse-density modulation, or PDM, is a form of modulation used to represent an analog signal with a binary signal. In a PDM signal, specific amplitude values are not encoded into codewords of pulses of different weight as they would be in pulse-code modulation (PCM); rather, the relative density of the pulses corresponds to the analog signal's amplitude. The output of a 1-bit DAC is the same as the PDM encoding of the signal.

In digital communications, an encoding law is a allocation of signal quantization levels across the possible analog signal levels in an analog-to-digital converter system. They can be viewed as a simple form of instantaneous companding.