Related Research Articles

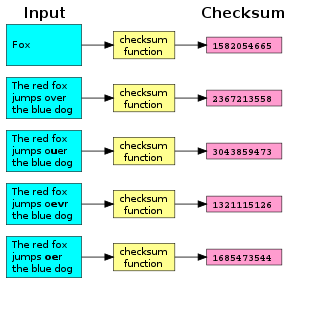

A checksum is a small-sized block of data derived from another block of digital data for the purpose of detecting errors that may have been introduced during its transmission or storage. By themselves, checksums are often used to verify data integrity but are not relied upon to verify data authenticity.

In information theory and coding theory with applications in computer science and telecommunication, error detection and correction (EDAC) or error control are techniques that enable reliable delivery of digital data over unreliable communication channels. Many communication channels are subject to channel noise, and thus errors may be introduced during transmission from the source to a receiver. Error detection techniques allow detecting such errors, while error correction enables reconstruction of the original data in many cases.

In computer networking, Point-to-Point Protocol (PPP) is a data link layer communication protocol between two routers directly without any host or any other networking in between. It can provide loop detection, authentication, transmission encryption, and data compression.

A cyclic redundancy check (CRC) is an error-detecting code commonly used in digital networks and storage devices to detect accidental changes to digital data. Blocks of data entering these systems get a short check value attached, based on the remainder of a polynomial division of their contents. On retrieval, the calculation is repeated and, in the event the check values do not match, corrective action can be taken against data corruption. CRCs can be used for error correction.

A frame is a digital data transmission unit in computer networking and telecommunication. In packet switched systems, a frame is a simple container for a single network packet. In other telecommunications systems, a frame is a repeating structure supporting time-division multiplexing.

In telecommunications, a transverse redundancy check (TRC) or vertical redundancy check is a redundancy check for synchronized parallel bits applied once per bit time, across the bit streams. This requires additional parallel channels for the check bit or bits.

In telecommunications and computer networking, a network packet is a formatted unit of data carried by a packet-switched network. A packet consists of control information and user data; the latter is also known as the payload. Control information provides data for delivering the payload. Typically, control information is found in packet headers and trailers.

The Serial Line Internet Protocol (SLIP) is an encapsulation of the Internet Protocol designed to work over serial ports and router connections. It is documented in RFC 1055. On personal computers, SLIP has largely been replaced by the Point-to-Point Protocol (PPP), which is better engineered, has more features, and does not require its IP address configuration to be set before it is established. On microcontrollers, however, SLIP is still the preferred way of encapsulating IP packets, due to its very small overhead.

In computer networking, the transport layer is a conceptual division of methods in the layered architecture of protocols in the network stack in the Internet protocol suite and the OSI model. The protocols of this layer provide end-to-end communication services for applications. It provides services such as connection-oriented communication, reliability, flow control, and multiplexing.

The data link layer, or layer 2, is the second layer of the seven-layer OSI model of computer networking. This layer is the protocol layer that transfers data between nodes on a network segment across the physical layer. The data link layer provides the functional and procedural means to transfer data between network entities and may also provide the means to detect and possibly correct errors that can occur in the physical layer.

A parity bit, or check bit, is a bit added to a string of binary code. Parity bits are a simple form of error detecting code. Parity bits are generally applied to the smallest units of a communication protocol, typically 8-bit octets (bytes), although they can also be applied separately to an entire message string of bits.

Modbus or MODBUS is a client/server data communications protocol in the application layer. It was originally published by Modicon in 1979 for use with its programmable logic controllers (PLCs). Modbus has become a de facto standard communication protocol for communication between industrial electronic devices in a wide range of buses and network.

The Fletcher checksum is an algorithm for computing a position-dependent checksum devised by John G. Fletcher (1934–2012) at Lawrence Livermore Labs in the late 1970s. The objective of the Fletcher checksum was to provide error-detection properties approaching those of a cyclic redundancy check but with the lower computational effort associated with summation techniques.

A frame check sequence (FCS) is an error-detecting code added to a frame in a communication protocol. Frames are used to send payload data from a source to a destination.

CRC-based framing is a kind of frame synchronization used in Asynchronous Transfer Mode (ATM) and other similar protocols.

Hybrid automatic repeat request is a combination of high-rate forward error correction (FEC) and automatic repeat request (ARQ) error-control. In standard ARQ, redundant bits are added to data to be transmitted using an error-detecting (ED) code such as a cyclic redundancy check (CRC). Receivers detecting a corrupted message will request a new message from the sender. In Hybrid ARQ, the original data is encoded with an FEC code, and the parity bits are either immediately sent along with the message or only transmitted upon request when a receiver detects an erroneous message. The ED code may be omitted when a code is used that can perform both forward error correction (FEC) in addition to error detection, such as a Reed–Solomon code. The FEC code is chosen to correct an expected subset of all errors that may occur, while the ARQ method is used as a fall-back to correct errors that are uncorrectable using only the redundancy sent in the initial transmission. As a result, hybrid ARQ performs better than ordinary ARQ in poor signal conditions, but in its simplest form this comes at the expense of significantly lower throughput in good signal conditions. There is typically a signal quality cross-over point below which simple hybrid ARQ is better, and above which basic ARQ is better.

Binary Synchronous Communication is an IBM character-oriented, half-duplex link protocol, announced in 1967 after the introduction of System/360. It replaced the synchronous transmit-receive (STR) protocol used with second generation computers. The intent was that common link management rules could be used with three different character encodings for messages.

Computation of a cyclic redundancy check is derived from the mathematics of polynomial division, modulo two. In practice, it resembles long division of the binary message string, with a fixed number of zeroes appended, by the "generator polynomial" string except that exclusive or operations replace subtractions. Division of this type is efficiently realised in hardware by a modified shift register, and in software by a series of equivalent algorithms, starting with simple code close to the mathematics and becoming faster through byte-wise parallelism and space–time tradeoffs.

An Answer To Reset (ATR) is a message output by a contact Smart Card conforming to ISO/IEC 7816 standards, following electrical reset of the card's chip by a card reader. The ATR conveys information about the communication parameters proposed by the card, and the card's nature and state.

CAN FD is a data-communication protocol used for broadcasting sensor data and control information on 2 wire interconnections between different parts of electronic instrumentation and control system. This protocol is used in modern high performance vehicles.

References

- ↑ RFC 935: "Reliable link layer protocols".

- ↑ "Errors, Error Detection, and Error Control: Data Communications and ComputerNetworks: A Business User's Approach".

- ↑ "Chapter1". Archived from the original on 2013-06-13. Retrieved 2012-08-20.

- ↑ Gary H. Kemmetmueller. "RAM error correction using two dimensional parity checking".

- ↑ Oosterbaan. "Longitudinal parity".

- ↑ "Errors, Error Detection, and Error Control".

- ↑ ISO 1155:1978 Information processing -- Use of longitudinal parity to detect errors in information messages.

- ↑ RFC 914. "A Thinwire Protocol for connecting personal computers to the INTERNET". Appendix D: "Serial Line Interface Protocol (SLIP)".

This article incorporates public domain material from Federal Standard 1037C. General Services Administration. Archived from the original on 2022-01-22. (in support of MIL-STD-188).

This article incorporates public domain material from Federal Standard 1037C. General Services Administration. Archived from the original on 2022-01-22. (in support of MIL-STD-188).