Related Research Articles

In computer programming, assembly language, often referred to simply as Assembly and commonly abbreviated as ASM or asm, is any low-level programming language with a very strong correspondence between the instructions in the language and the architecture's machine code instructions. Assembly language usually has one statement per machine instruction (1:1), but constants, comments, assembler directives, symbolic labels of, e.g., memory locations, registers, and macros are generally also supported.

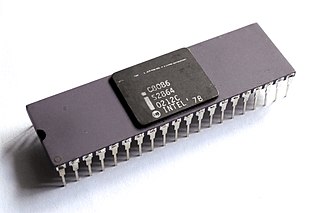

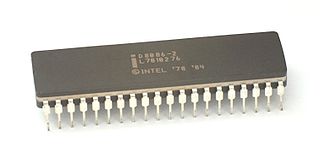

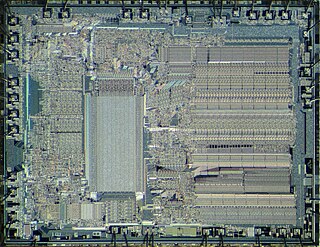

The 8086 is a 16-bit microprocessor chip designed by Intel between early 1976 and June 8, 1978, when it was released. The Intel 8088, released July 1, 1979, is a slightly modified chip with an external 8-bit data bus, and is notable as the processor used in the original IBM PC design.

The Intel 8088 microprocessor is a variant of the Intel 8086. Introduced on June 1, 1979, the 8088 has an eight-bit external data bus instead of the 16-bit bus of the 8086. The 16-bit registers and the one megabyte address range are unchanged, however. In fact, according to the Intel documentation, the 8086 and 8088 have the same execution unit (EU)—only the bus interface unit (BIU) is different. The original IBM PC is based on the 8088, as are its clones.

In computer programming, machine code is computer code consisting of machine language instructions, which are used to control a computer's central processing unit (CPU). Although decimal computers were once common, the contemporary marketplace is dominated by binary computers; for those computers, machine code is "the binary representation of a computer program which is actually read and interpreted by the computer. A program in machine code consists of a sequence of machine instructions ."

x86 is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was introduced in 1978 as a fully 16-bit extension of Intel's 8-bit 8080 microprocessor, with memory segmentation as a solution for addressing more memory than can be covered by a plain 16-bit address. The term "x86" came into being because the names of several successors to Intel's 8086 processor end in "86", including the 80186, 80286, 80386 and 80486 processors. Colloquially, their names were "186", "286", "386" and "486".

The Intel 8085 ("eighty-eighty-five") is an 8-bit microprocessor produced by Intel and introduced in March 1976. It is software-binary compatible with the more-famous Intel 8080 with only two minor instructions added to support its added interrupt and serial input/output features. However, it requires less support circuitry, allowing simpler and less expensive microcomputer systems to be built. The "5" in the part number highlighted the fact that the 8085 uses a single +5-volt (V) power supply by using depletion-mode transistors, rather than requiring the +5 V, −5 V and +12 V supplies needed by the 8080. This capability matched that of the competing Z80, a popular 8080-derived CPU introduced the year before. These processors could be used in computers running the CP/M operating system.

x86 memory segmentation refers to the implementation of memory segmentation in the Intel x86 computer instruction set architecture. Segmentation was introduced on the Intel 8086 in 1978 as a way to allow programs to address more than 64 KB (65,536 bytes) of memory. The Intel 80286 introduced a second version of segmentation in 1982 that added support for virtual memory and memory protection. At this point the original mode was renamed to real mode, and the new version was named protected mode. The x86-64 architecture, introduced in 2003, has largely dropped support for segmentation in 64-bit mode.

In computing, protected mode, also called protected virtual address mode, is an operational mode of x86-compatible central processing units (CPUs). It allows system software to use features such as segmentation, virtual memory, paging and safe multi-tasking designed to increase an operating system's control over application software.

x86 assembly language is the name for the family of assembly languages which provide some level of backward compatibility with CPUs back to the Intel 8008 microprocessor, which was launched in April 1972. It is used to produce object code for the x86 class of processors.

In computer engineering, Halt and Catch Fire, known by the assembly mnemonic HCF, is an idiom referring to a computer machine code instruction that causes the computer's central processing unit (CPU) to cease meaningful operation, typically requiring a restart of the computer. It originally referred to a fictitious instruction in IBM System/360 computers, making a joke about its numerous non-obvious instruction mnemonics.

A general protection fault (GPF) in the x86 instruction set architectures (ISAs) is a fault initiated by ISA-defined protection mechanisms in response to an access violation caused by some running code, either in the kernel or a user program. The mechanism is first described in Intel manuals and datasheets for the Intel 80286 CPU, which was introduced in 1983; it is also described in section 9.8.13 in the Intel 80386 programmer's reference manual from 1986. A general protection fault is implemented as an interrupt. Some operating systems may also classify some exceptions not related to access violations, such as illegal opcode exceptions, as general protection faults, even though they have nothing to do with memory protection. If a CPU detects a protection violation, it stops executing the code and sends a GPF interrupt. In most cases, the operating system removes the failing process from the execution queue, signals the user, and continues executing other processes. If, however, the operating system fails to catch the general protection fault, i.e. another protection violation occurs before the operating system returns from the previous GPF interrupt, the CPU signals a double fault, stopping the operating system. If yet another failure occurs, the CPU is unable to recover; since 80286, the CPU enters a special halt state called "Shutdown", which can only be exited through a hardware reset. The IBM PC AT, the first PC-compatible system to contain an 80286, has hardware that detects the Shutdown state and automatically resets the CPU when it occurs. All descendants of the PC AT do the same, so in a PC, a triple fault causes an immediate system reset.

The x86 instruction set refers to the set of instructions that x86-compatible microprocessors support. The instructions are usually part of an executable program, often stored as a computer file and executed on the processor.

The Intel 8087, announced in 1980, was the first x87 floating-point coprocessor for the 8086 line of microprocessors.

The Program Segment Prefix (PSP) is a data structure used in DOS systems to store the state of a program. It resembles the Zero Page in the CP/M operating system. The PSP has the following structure:

The Western Design Center (WDC) 65C02 microprocessor is an enhanced CMOS version of the popular nMOS-based 8-bit MOS Technology 6502. The 65C02 fixed several problems in the original 6502 and added some new instructions, but its main feature was greatly lowered power usage, on the order of 10 to 20 times less than the original 6502 running at the same speed. The reduced power consumption made the 65C02 useful in portable computer roles and microcontroller systems in industrial settings. It has been used in some home computers, as well as in embedded applications, including medical-grade implanted devices.

In computing, the x86 memory models are a set of six different memory models of the x86 CPU operating in real mode which control how the segment registers are used and the default size of pointers.

A Trace Vector Decoder (TVD) is computer software that uses the trace facility of its underlying microprocessor to decode encrypted instruction opcodes just-in-time prior to execution and possibly re-encode them afterwards. It can be used to hinder reverse engineering when attempting to prevent software cracking as part of an overall copy protection strategy.

The TI-990 was a series of 16-bit minicomputers sold by Texas Instruments (TI) in the 1970s and 1980s. The TI-990 was a replacement for TI's earlier minicomputer systems, the TI-960 and the TI-980. It had several unique features, and was easier to program than its predecessors.

A stack register is a computer central processor register whose purpose is to keep track of a call stack. On an accumulator-based architecture machine, this may be a dedicated register. On a machine with mulitple general-purpose registers, it may be a register that is reserved by convention, such as on the IBM System/360 through z/Architecture architecture and RISC architectures, or it may be a register that procedure call and return instructions are hardwired to use, such as on the PDP-11, VAX, and Intel x86 architectures. Some designs such as the Data General Eclipse had no dedicated register, but used a reserved hardware memory address for this function.

A trap flag permits operation of a processor in single-step mode. If such a flag is available, debuggers can use it to step through the execution of a computer program.

References

- ↑ "ARM Information Center". ARM Technical Support Knowledge Articles.

- ↑ Hayes, John (1998). Computer Architecture and Organization (Second ed.). McGraw-Hill.

- ↑ Feller, William (1968). An Introduction to Probability theory and its applications (Second ed.). John Wiley and Sons.

- ↑ Papoulis, Athanasios; S.Unnikrishna Pillai (2008). Probability, Random Variables and Stochastic Processes (Fourth ed.). McGraw-Hill. pp. 784 to 800.

- ↑ Zaky, Safwat; V. Carl Hamacher; Zvonko G. Vranesic (1996). Computer Organization (Fourth ed.). McGraw-Hill. pp. 310–329. ISBN 0-07-114309-2.

- ↑ "Block diagram of 8086 CPU".

- ↑ Hall, Douglas (2006). Microprocessors and Interfacing. Tata McGraw-Hill. p. 2.12. ISBN 0-07-060167-4.

- ↑ Hall, Douglas (2006). Microprocessors and Interfacing. New Delhi: Tata McGraw-Hill. pp. 2.13–2.14. ISBN 0-07-060167-4.

- ↑ McKevitt, James; Bayliss, John (March 1979). "New options from big chips". IEEE Spectrum. 16 (3): 28–34. doi:10.1109/MSPEC.1979.6367944. S2CID 25154920.