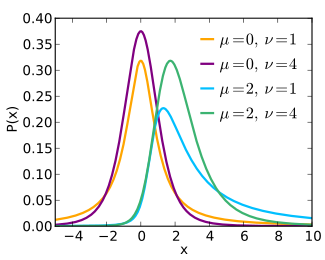

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is The parameter is the mean or expectation of the distribution, while the parameter is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate.

In particle physics, the Dirac equation is a relativistic wave equation derived by British physicist Paul Dirac in 1928. In its free form, or including electromagnetic interactions, it describes all spin-1/2 massive particles, called "Dirac particles", such as electrons and quarks for which parity is a symmetry. It is consistent with both the principles of quantum mechanics and the theory of special relativity, and was the first theory to account fully for special relativity in the context of quantum mechanics. It was validated by accounting for the fine structure of the hydrogen spectrum in a completely rigorous way. It has become vital in the building of the Standard Model.

In probability theory and statistics, Student's t distribution is a continuous probability distribution that generalizes the standard normal distribution. Like the latter, it is symmetric around zero and bell-shaped.

In probability theory and statistics, the chi-squared distribution with degrees of freedom is the distribution of a sum of the squares of independent standard normal random variables.

In probability theory, a distribution is said to be stable if a linear combination of two independent random variables with this distribution has the same distribution, up to location and scale parameters. A random variable is said to be stable if its distribution is stable. The stable distribution family is also sometimes referred to as the Lévy alpha-stable distribution, after Paul Lévy, the first mathematician to have studied it.

The scaled inverse chi-squared distribution, where is the scale parameter, equals the univariate inverse Wishart distribution with degrees of freedom .

The Pearson distribution is a family of continuous probability distributions. It was first published by Karl Pearson in 1895 and subsequently extended by him in 1901 and 1916 in a series of articles on biostatistics.

In probability theory, the Rice distribution or Rician distribution is the probability distribution of the magnitude of a circularly-symmetric bivariate normal random variable, possibly with non-zero mean (noncentral). It was named after Stephen O. Rice (1907–1986).

In probability theory and statistics, the noncentral chi-squared distribution is a noncentral generalization of the chi-squared distribution. It often arises in the power analysis of statistical tests in which the null distribution is a chi-squared distribution; important examples of such tests are the likelihood-ratio tests.

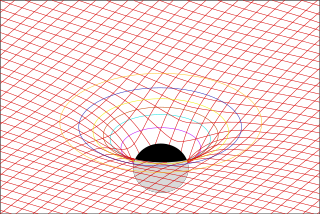

In physics, Maxwell's equations in curved spacetime govern the dynamics of the electromagnetic field in curved spacetime or where one uses an arbitrary coordinate system. These equations can be viewed as a generalization of the vacuum Maxwell's equations which are normally formulated in the local coordinates of flat spacetime. But because general relativity dictates that the presence of electromagnetic fields induce curvature in spacetime, Maxwell's equations in flat spacetime should be viewed as a convenient approximation.

The folded normal distribution is a probability distribution related to the normal distribution. Given a normally distributed random variable X with mean μ and variance σ2, the random variable Y = |X| has a folded normal distribution. Such a case may be encountered if only the magnitude of some variable is recorded, but not its sign. The distribution is called "folded" because probability mass to the left of x = 0 is folded over by taking the absolute value. In the physics of heat conduction, the folded normal distribution is a fundamental solution of the heat equation on the half space; it corresponds to having a perfect insulator on a hyperplane through the origin.

In statistics, the multivariate t-distribution is a multivariate probability distribution. It is a generalization to random vectors of the Student's t-distribution, which is a distribution applicable to univariate random variables. While the case of a random matrix could be treated within this structure, the matrix t-distribution is distinct and makes particular use of the matrix structure.

Expected shortfall (ES) is a risk measure—a concept used in the field of financial risk measurement to evaluate the market risk or credit risk of a portfolio. The "expected shortfall at q% level" is the expected return on the portfolio in the worst of cases. ES is an alternative to value at risk that is more sensitive to the shape of the tail of the loss distribution.

In financial mathematics, tail value at risk (TVaR), also known as tail conditional expectation (TCE) or conditional tail expectation (CTE), is a risk measure associated with the more general value at risk. It quantifies the expected value of the loss given that an event outside a given probability level has occurred.

In probability theory and statistics, the normal-inverse-gamma distribution is a four-parameter family of multivariate continuous probability distributions. It is the conjugate prior of a normal distribution with unknown mean and variance.

In probability and statistics, the generalized K-distribution is a three-parameter family of continuous probability distributions. The distribution arises by compounding two gamma distributions. In each case, a re-parametrization of the usual form of the family of gamma distributions is used, such that the parameters are:

In statistics and probability theory, the nonparametric skew is a statistic occasionally used with random variables that take real values. It is a measure of the skewness of a random variable's distribution—that is, the distribution's tendency to "lean" to one side or the other of the mean. Its calculation does not require any knowledge of the form of the underlying distribution—hence the name nonparametric. It has some desirable properties: it is zero for any symmetric distribution; it is unaffected by a scale shift; and it reveals either left- or right-skewness equally well. In some statistical samples it has been shown to be less powerful than the usual measures of skewness in detecting departures of the population from normality.

In probability theory and statistics, the generalized multivariate log-gamma (G-MVLG) distribution is a multivariate distribution introduced by Demirhan and Hamurkaroglu in 2011. The G-MVLG is a flexible distribution. Skewness and kurtosis are well controlled by the parameters of the distribution. This enables one to control dispersion of the distribution. Because of this property, the distribution is effectively used as a joint prior distribution in Bayesian analysis, especially when the likelihood is not from the location-scale family of distributions such as normal distribution.

In statistics, the folded-t and half-t distributions are derived from Student's t-distribution by taking the absolute values of variates. This is analogous to the folded-normal and the half-normal statistical distributions being derived from the normal distribution.

In probability theory, the stable count distribution is the conjugate prior of a one-sided stable distribution. This distribution was discovered by Stephen Lihn in his 2017 study of daily distributions of the S&P 500 and the VIX. The stable distribution family is also sometimes referred to as the Lévy alpha-stable distribution, after Paul Lévy, the first mathematician to have studied it.