Discrete distributions

With finite support

- The Bernoulli distribution, which takes value 1 with probability p and value 0 with probability q = 1 −p.

- The Rademacher distribution, which takes value 1 with probability 1/2 and value −1 with probability 1/2.

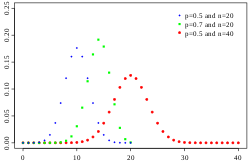

- The binomial distribution, which describes the number of successes in a series of independent Yes/No experiments all with the same probability of success.

- The beta-binomial distribution, which describes the number of successes in a series of independent Yes/No experiments with heterogeneity in the success probability.

- The degenerate distribution at x0, where X is certain to take the value x0. This does not look random, but it satisfies the definition of random variable. This is useful because it puts deterministic variables and random variables in the same formalism.

- The discrete uniform distribution, where all elements of a finite set are equally likely. This is the theoretical distribution model for a balanced coin, an unbiased die, a casino roulette, or the first card of a well-shuffled deck.

- The hypergeometric distribution, which describes the number of successes in the first m of a series of n consecutive Yes/No experiments, if the total number of successes is known. This distribution arises when there is no replacement.

- The negative hypergeometric distribution, a distribution which describes the number of attempts needed to get the nth success in a series of Yes/No experiments without replacement.

- The Poisson binomial distribution, which describes the number of successes in a series of independent Yes/No experiments with different success probabilities.

- Fisher's noncentral hypergeometric distribution

- Wallenius' noncentral hypergeometric distribution

- Benford's law, which describes the frequency of the first digit of many naturally occurring data.

- The ideal and robust soliton distributions.

- Zipf's law or the Zipf distribution. A discrete power-law distribution, the most famous example of which is the description of the frequency of words in the English language.

- The Zipf–Mandelbrot law is a discrete power law distribution which is a generalization of the Zipf distribution.

With infinite support

- The beta negative binomial distribution

- The Boltzmann distribution, a discrete distribution important in statistical physics which describes the probabilities of the various discrete energy levels of a system in thermal equilibrium. It has a continuous analogue.

- The Maxwell–Boltzmann distribution is a special case.

- The Borel distribution

- The discrete phase-type distribution, a generalization of the geometric distribution which describes the first hit time of the absorbing state of a finite terminating Markov chain.

- The extended negative binomial distribution

- The generalized log-series distribution

- The Gauss–Kuzmin distribution

- The geometric distribution, a discrete distribution which describes the number of attempts needed to get the first success in a series of independent Bernoulli trials, or alternatively only the number of losses before the first success (i.e. one less).

- The Hermite distribution

- The logarithmic (series) distribution

- The mixed Poisson distribution

- The negative binomial distribution or Pascal distribution, a generalization of the geometric distribution to the nth success.

- The discrete compound Poisson distribution

- The parabolic fractal distribution

- The Poisson distribution, which describes a very large number of individually unlikely events that happen in a certain time interval. Related to this distribution are a number of other distributions: the displaced Poisson, the hyper-Poisson, the general Poisson binomial and the Poisson type distributions.

- The Conway–Maxwell–Poisson distribution, a two-parameter extension of the Poisson distribution with an adjustable rate of decay.

- The zero-truncated Poisson distribution, for processes in which zero counts are not observed

- The Polya–Eggenberger distribution

- The Skellam distribution, the distribution of the difference between two independent Poisson-distributed random variables.

- The skew elliptical distribution

- The Yule–Simon distribution

- The zeta distribution has uses in applied statistics and statistical mechanics, and perhaps may be of interest to number theorists. It is the Zipf distribution for an infinite number of elements.

- The Hardy distribution, which describes the probabilities of the hole scores for a given golf player.